A Survey on PageRank

- 格式:pdf

- 大小:304.65 KB

- 文档页数:48

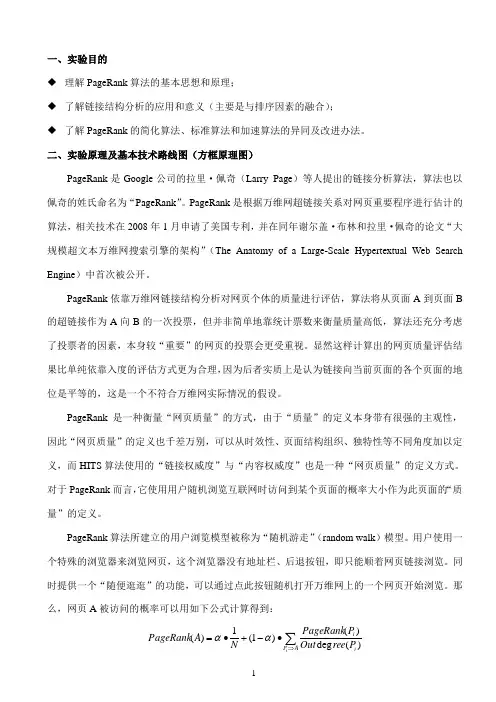

一、实验目的◆ 理解PageRank 算法的基本思想和原理;◆ 了解链接结构分析的应用和意义(主要是与排序因素的融合);◆ 了解PageRank 的简化算法、标准算法和加速算法的异同及改进办法。

二、实验原理及基本技术路线图(方框原理图)PageRank 是Google 公司的拉里·佩奇(Larry Page )等人提出的链接分析算法,算法也以佩奇的姓氏命名为“PageRank ”。

PageRank 是根据万维网超链接关系对网页重要程序进行估计的算法,相关技术在2008年1月申请了美国专利,并在同年谢尔盖·布林和拉里·佩奇的论文“大规模超文本万维网搜索引擎的架构”(The Anatomy of a Large-Scale Hypertextual Web Search Engine )中首次被公开。

PageRank 依靠万维网链接结构分析对网页个体的质量进行评估,算法将从页面A 到页面B 的超链接作为A 向B 的一次投票,但并非简单地靠统计票数来衡量质量高低,算法还充分考虑了投票者的因素,本身较“重要”的网页的投票会更受重视。

显然这样计算出的网页质量评估结果比单纯依靠入度的评估方式更为合理,因为后者实质上是认为链接向当前页面的各个页面的地位是平等的,这是一个不符合万维网实际情况的假设。

PageRank 是一种衡量“网页质量”的方式,由于“质量”的定义本身带有很强的主观性,因此“网页质量”的定义也千差万别,可以从时效性、页面结构组织、独特性等不同角度加以定义,而HITS 算法使用的“链接权威度”与“内容权威度”也是一种“网页质量”的定义方式。

对于PageRank 而言,它使用用户随机浏览互联网时访问到某个页面的概率大小作为此页面的“质量”的定义。

PageRank 算法所建立的用户浏览模型被称为“随机游走”(random walk )模型。

用户使用一个特殊的浏览器来浏览网页,这个浏览器没有地址栏、后退按钮,即只能顺着网页链接浏览。

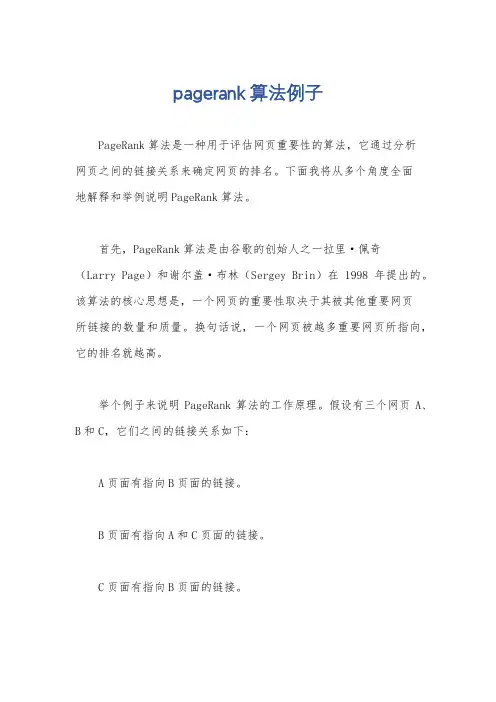

pagerank算法例子PageRank算法是一种用于评估网页重要性的算法,它通过分析网页之间的链接关系来确定网页的排名。

下面我将从多个角度全面地解释和举例说明PageRank算法。

首先,PageRank算法是由谷歌的创始人之一拉里·佩奇(Larry Page)和谢尔盖·布林(Sergey Brin)在1998年提出的。

该算法的核心思想是,一个网页的重要性取决于其被其他重要网页所链接的数量和质量。

换句话说,一个网页被越多重要网页所指向,它的排名就越高。

举个例子来说明PageRank算法的工作原理。

假设有三个网页A、B和C,它们之间的链接关系如下:A页面有指向B页面的链接。

B页面有指向A和C页面的链接。

C页面有指向B页面的链接。

根据PageRank算法,我们可以计算每个页面的初始排名。

假设初始排名为1,我们可以得到以下结果:A页面的初始排名为1。

B页面的初始排名为1。

C页面的初始排名为1。

接下来,我们根据链接关系来更新页面的排名。

根据PageRank 算法的计算公式,排名的更新是一个迭代过程。

在每一次迭代中,我们根据页面之间的链接关系来更新页面的排名。

在第一次迭代中,我们可以得到以下结果:A页面的排名更新为,1/2(来自B页面的链接)。

B页面的排名更新为,1/2(来自A页面的链接) + 1(来自C 页面的链接)。

C页面的排名更新为,1/2(来自B页面的链接)。

在第二次迭代中,我们再次根据链接关系来更新页面的排名。

根据公式,我们可以得到以下结果:A页面的排名更新为,1/2(来自B页面的链接) + 1/2(来自B页面的链接)。

B页面的排名更新为,1/2(来自A页面的链接) + 1(来自C 页面的链接)。

C页面的排名更新为,1/2(来自B页面的链接)。

通过多次迭代,我们最终可以得到每个页面的稳定排名。

在这个例子中,最终的排名结果可能是:A页面的排名为0.75。

B页面的排名为1.5。

C页面的排名为0.75。

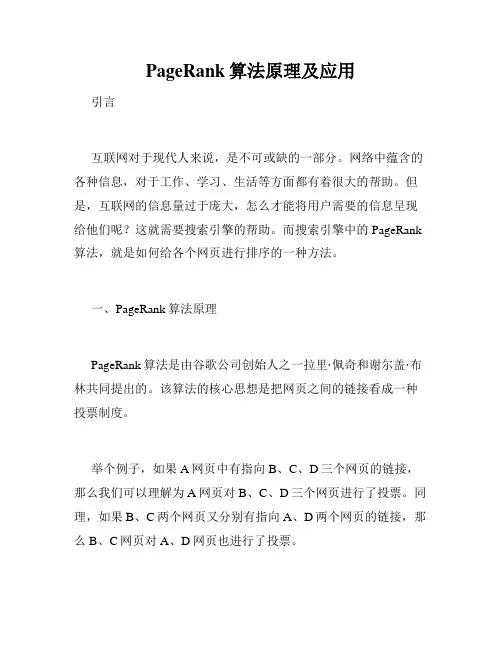

PageRank算法原理及应用引言互联网对于现代人来说,是不可或缺的一部分。

网络中蕴含的各种信息,对于工作、学习、生活等方面都有着很大的帮助。

但是,互联网的信息量过于庞大,怎么才能将用户需要的信息呈现给他们呢?这就需要搜索引擎的帮助。

而搜索引擎中的PageRank 算法,就是如何给各个网页进行排序的一种方法。

一、PageRank算法原理PageRank算法是由谷歌公司创始人之一拉里·佩奇和谢尔盖·布林共同提出的。

该算法的核心思想是把网页之间的链接看成一种投票制度。

举个例子,如果A网页中有指向B、C、D三个网页的链接,那么我们可以理解为A网页对B、C、D三个网页进行了投票。

同理,如果B、C两个网页又分别有指向A、D两个网页的链接,那么B、C网页对A、D网页也进行了投票。

但是,这个投票制度并不是完全平等的。

如果A网页的排名比B、C、D网页都要高,那么A网页对B、C、D网页的投票效果就要比B、C、D网页对A网页的投票效果更大。

又因为B、C网页同时又对A网页进行了投票,所以其对D网页的投票效果会比A网页的投票效果更大。

PageRank算法正是基于这种投票论证进行的,即如果一个网页被越多的其他网页链接的话,那么这个网页就越重要。

同时,如果链接这个网页的网页还有更高的权重,那么这个网页的权重就会更大。

Pagerank算法是一种迭代算法。

迭代中每个网页的PageRank 值逐渐逼近其真实值。

大致流程如下:1. 给每一个网页初始化PageRank值为12. 每个网页的PageRank值等于其他链接到这个网页的网页的PageRank值乘以这个网页投出去链接的数量除以被链接到的网页的总数再乘以一个0.85的系数,再加上一个概率0.153. 重复执行第二步,直到所有网页的PageRank值收敛二、PageRank算法应用PageRank算法的应用主要体现在搜索引擎排序上。

因为搜索引擎返回的结果一般都是以网页链接的形式呈现的,PageRank算法可以依据链接来判断网页的重要性并进行排序。

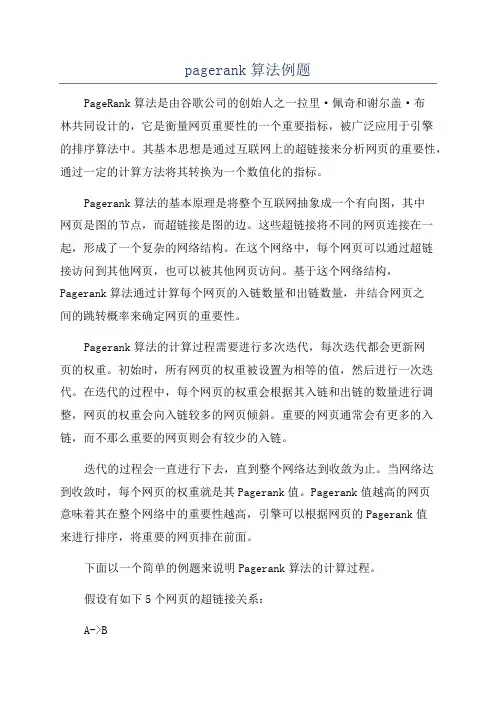

pagerank算法例题PageRank算法是由谷歌公司的创始人之一拉里·佩奇和谢尔盖·布林共同设计的,它是衡量网页重要性的一个重要指标,被广泛应用于引擎的排序算法中。

其基本思想是通过互联网上的超链接来分析网页的重要性,通过一定的计算方法将其转换为一个数值化的指标。

Pagerank算法的基本原理是将整个互联网抽象成一个有向图,其中网页是图的节点,而超链接是图的边。

这些超链接将不同的网页连接在一起,形成了一个复杂的网络结构。

在这个网络中,每个网页可以通过超链接访问到其他网页,也可以被其他网页访问。

基于这个网络结构,Pagerank算法通过计算每个网页的入链数量和出链数量,并结合网页之间的跳转概率来确定网页的重要性。

Pagerank算法的计算过程需要进行多次迭代,每次迭代都会更新网页的权重。

初始时,所有网页的权重被设置为相等的值,然后进行一次迭代。

在迭代的过程中,每个网页的权重会根据其入链和出链的数量进行调整,网页的权重会向入链较多的网页倾斜。

重要的网页通常会有更多的入链,而不那么重要的网页则会有较少的入链。

迭代的过程会一直进行下去,直到整个网络达到收敛为止。

当网络达到收敛时,每个网页的权重就是其Pagerank值。

Pagerank值越高的网页意味着其在整个网络中的重要性越高,引擎可以根据网页的Pagerank值来进行排序,将重要的网页排在前面。

下面以一个简单的例题来说明Pagerank算法的计算过程。

假设有如下5个网页的超链接关系:A->BA->CB->CC->AD->A其中关系“->”表示一个网页通过超链接指向另一个网页。

初始化时,所有网页的权重都设置为1/5,即:A:1/5B:1/5C:1/5D:1/5E:1/5进行第一次迭代时,根据网页之间的超链接关系,更新所有网页的排名。

A:(1-0.2)/5+0.2*(1/3+1/4)=0.34B:(1-0.2)/5=0.16C:(1-0.2)/5+0.2*(1/4+1/4+1/4)=0.32D:(1-0.2)/5=0.16E:(1-0.2)/5=0.16进行第二次迭代时,再次根据网页之间的超链接关系,更新所有网页的排名。

pagerank算法公式

PageRank是一种衡量网页重要性的算法,其基本思想是:对于一个网页,其“重要性”或者“权威性”主要取决于其引用的网页质量和数量。

PageRank的计算公式如下:

v’=Mv

其中,v是一个n维向量,每个分量代表对应节点的PageRank值的估计值,称作概率分布向量。

M是一个n×n矩阵,表示万维网的网页构成的图。

节

点A、B、C、D代表网页,有向边代表起点页面包含终点页面的链接。

PageRank还有一个简化模型:一个网页的影响力等于所有入链集合的页面的加权影响力之和,公式表示为:PR(u)=∑v∈BuPR(v)L(v)PR(u)=\sum_{v \in B_{u}} \frac{P R(v)}{L(v)}PR(u)=v∈Bu∑L(v)PR(v)u为待评估的页面,Bu为页面u的入链集合。

针对入链集合中的任意页面v,它能给u带来的

影响力是其自身的影响力PR(v)除以v页面的出链数量,统计所有能给u带来链接的页面v,得到的总和就是网页u的影响力,即为PR(u)。

请注意,这只是PageRank算法的简化模型,实际应用中PageRank算法会更复杂。

如需了解更多关于PageRank算法的信息,建议咨询计算机领域专业人士或查阅相关书籍。

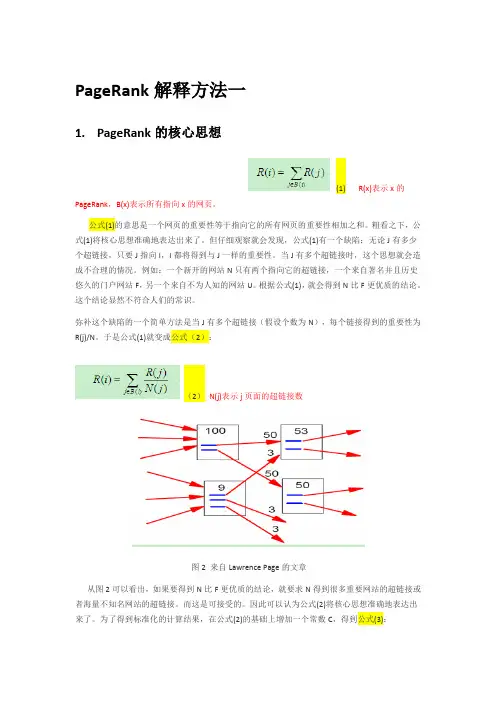

PageRank解释方法一1.PageRank的核心思想(1) R(x)表示x的PageRank,B(x)表示所有指向x的网页。

公式(1)的意思是一个网页的重要性等于指向它的所有网页的重要性相加之和。

粗看之下,公式(1)将核心思想准确地表达出来了。

但仔细观察就会发现,公式(1)有一个缺陷:无论J有多少个超链接,只要J指向I,I都将得到与J一样的重要性。

当J有多个超链接时,这个思想就会造成不合理的情况。

例如:一个新开的网站N只有两个指向它的超链接,一个来自著名并且历史悠久的门户网站F,另一个来自不为人知的网站U。

根据公式(1),就会得到N比F更优质的结论。

这个结论显然不符合人们的常识。

弥补这个缺陷的一个简单方法是当J有多个超链接(假设个数为N),每个链接得到的重要性为R(j)/N。

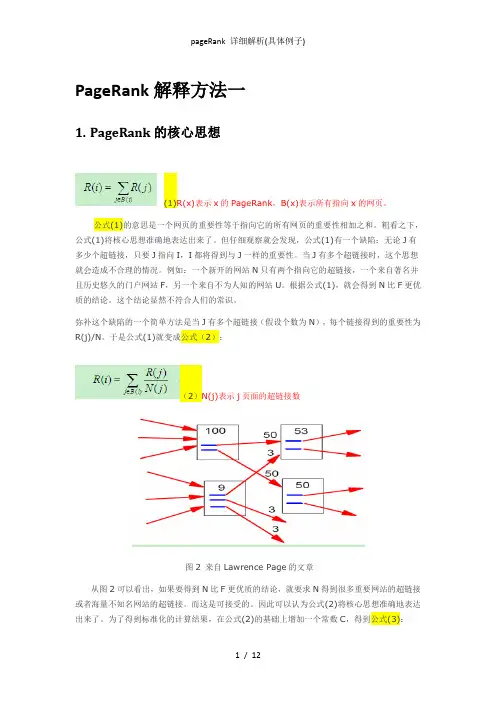

于是公式(1)就变成公式(2):(2)N(j)表示j页面的超链接数图2 来自Lawrence Page的文章从图2可以看出,如果要得到N比F更优质的结论,就要求N得到很多重要网站的超链接或者海量不知名网站的超链接。

而这是可接受的。

因此可以认为公式(2)将核心思想准确地表达出来了。

为了得到标准化的计算结果,在公式(2)的基础上增加一个常数C,得到公式(3):(3)2.计算,实例由公式(3)可知,PageRank是递归定义的。

换句话就是要得到一个页面的PageRank,就要先知道另一些页面的PageRank。

因此需要设置合理的PageRank初始值。

不过,如果有办法得到合理的PageRank初始值,还需要这个算法吗或者说,这个严重依赖于初始值的算法有什么意义吗依赖于合理初始值的PageRank算法是没意义的,那么不依赖于初始值的PageRank算法就是有意义的了。

也就是说,如果存在一种计算方法,使得无论怎样设置初始值,最后都会收敛到同一个值就行了。

要做到这样,就要换一个角度看问题,从线性代数的角度看问题。

将页面看作节点,超链接看作有向边,整个互联网就变成一个有向图了。

第46卷 第5期V o.l 46 N o.5山 东 大 学 学 报 (理 学 版)Journal of Shandong U niversity(N atural Science)2011年5月M ay 2011收稿日期:2011 01 10;网络出版时间:2011 05 0412 02网络出版地址:http ://k.i net/k c m s/detail/37.1389.N .20110504.1202.001.h t m l基金项目:国家自然科学基金资助项目(60736044,60903107);高等学校博士学科点专项科研基金项目(20090002120005);国家重点基础研究(973)项目(2004CB318108);国家高技术研究发展计划(863计划)项目(2006AA01Z141)作者简介:李智超(1985-),男,博士研究生,研究方向为反作弊技术,观点挖掘.Ema i :l liz h ichaoxyz @s ohu.co m文章编号:1671 9352(2011)05 0001 08网页作弊与反作弊技术综述李智超,余慧佳,刘奕群,马少平(清华大学智能技术与系统国家重点实验室,北京100084)摘要:随着网络信息爆炸式的增长,搜索引擎成为人们首选的获取信息的主要途径。

能否在搜索引擎的排名中占有比较靠前的位置,将在一定程度上决定网页的访问量。

一些网站并不是通过提高网页质量来提高其在搜索引擎中的排名,而是根据搜索引擎自身的特点,采用欺骗手段来提高排名,这就是网页作弊。

网页作弊是搜索引擎面临的重大挑战之一。

本文将结合常见的网页作弊的方法,阐述当前已经存在的比较有效的反作弊技术。

关键词:网页作弊;反作弊;搜索引擎中图分类号:TP391 3 文献标志码:AA s urvey of web spa m and anti spa m techni quesL I Zhi chao ,YU H u i jia ,L IU Y i qun ,M A Shao p i n g(S t a te K ey L ab o f In telligent T echno l o gy and Sy ste m s ,T si nghua U n i v ersity ,B eiji ng 100084,C hina)Ab stract :W ith the inc rease o fW eb i nform ati o n ,search eng i nes hav e becom e the pr i nci pa l approach to i nfo r m a tion re triev a.l T he acce ssi ng o f a pag e is basicall y dec i ded by its ranki ng in search eng i ne s .Som e site s boo st t he ir page rank i ng w it hout i m pro v i ng the qua lit y o f the pages ,but deceive the search eng i nes acco rd i ng to its charac teristi c ,w hich is ca lled W eb Spa m.W eb spam is one o f t he cha llenge s o f search eng i nes .V ali d an ti spa m techniques are presented w ith an i n tro ducti on o f comm on W eb spam.K ey w ords :w eb spam;an ti s pa m;sea rch eng i ne0 引言互联网在最近的十几年间得到了飞速的发展,网络上的信息也成爆炸式的增长,我国域名总数量已经超过了1121万个,截至2010年6月,域名注册者在我国境内的网站数目为279万[1],由于动态网页的广泛使用以及W eb2 0的普及,真实的网页数目更是难以估算。

pagerank算法相关的概念,

Pagerank算法是一种用于计算网页排名的算法,它是由谷歌公司的创始人拉里·佩奇和谢尔盖·布林在1998年开发出来的。

Pagerank

算法主要基于网络链接分析理论,它能够通过分析页面之间的链接关系,识别出页面的重要性和影响力,从而对网页进行排序。

Pagerank算法的基本思想是:对于一个具有链接关系的网页集合,权重高的链接指向的页面的排名就越高。

这意味着,一个网页的排名

不仅取决于自身的内容质量,还取决于链接到它的网页的权重。

此外,Pagerank算法还考虑了链接的数量和质量,以及链接页面的主题等因素。

Pagerank算法的核心公式为:

PR(A) = (1-d) + d (PR(T1)/C(T1) + ... + PR(Tn)/C(Tn))

其中,PR(A)表示网页A的排名值,d为阻尼系数,通常被定义为0.85,T1-Tn表示所有链接到页面A的网页,C(T1)-C(Tn)表示对应网

页的链接数,PR(T1)-PR(Tn)表示对应网页的排名值。

Pagerank算法的实现是以迭代的方式进行的,即从初始状态开始,对每个网页进行计算,然后根据当前的排名值重新计算所有网页的排

名值,并不断迭代直到达到一定的收敛精度。

在实现过程中,需要考

虑到计算量的问题,因为对于大规模的网页集合,计算复杂度会极大

地增加。

Pagerank算法已经成为衡量网页重要性的重要指标之一,不少搜索引擎和网站都采用了这种算法来进行排序。

此外,Pagerank算法还

具有其他应用方面,例如社交网络分析、反垃圾邮件等领域,它为我

们提供了一种全新的思考角度和解决问题的思路。

PageRank解释方法一1.PageRank的核心思想(1)R(x)表示x的PageRank,B(x)表示所有指向x的网页。

公式(1)的意思是一个网页的重要性等于指向它的所有网页的重要性相加之和。

粗看之下,公式(1)将核心思想准确地表达出来了。

但仔细观察就会发现,公式(1)有一个缺陷:无论J有多少个超链接,只要J指向I,I都将得到与J一样的重要性。

当J有多个超链接时,这个思想就会造成不合理的情况。

例如:一个新开的网站N只有两个指向它的超链接,一个来自著名并且历史悠久的门户网站F,另一个来自不为人知的网站U。

根据公式(1),就会得到N比F更优质的结论。

这个结论显然不符合人们的常识。

弥补这个缺陷的一个简单方法是当J有多个超链接(假设个数为N),每个链接得到的重要性为R(j)/N。

于是公式(1)就变成公式(2):(2)N(j)表示j页面的超链接数图2 来自Lawrence Page的文章从图2可以看出,如果要得到N比F更优质的结论,就要求N得到很多重要网站的超链接或者海量不知名网站的超链接。

而这是可接受的。

因此可以认为公式(2)将核心思想准确地表达出来了。

为了得到标准化的计算结果,在公式(2)的基础上增加一个常数C,得到公式(3):(3)2.计算,实例由公式(3)可知,PageRank是递归定义的。

换句话就是要得到一个页面的PageRank,就要先知道另一些页面的PageRank。

因此需要设置合理的PageRank初始值。

不过,如果有办法得到合理的PageRank初始值,还需要这个算法吗?或者说,这个严重依赖于初始值的算法有什么意义吗?依赖于合理初始值的PageRank算法是没意义的,那么不依赖于初始值的PageRank算法就是有意义的了。

也就是说,如果存在一种计算方法,使得无论怎样设置初始值,最后都会收敛到同一个值就行了。

要做到这样,就要换一个角度看问题,从线性代数的角度看问题。

将页面看作节点,超链接看作有向边,整个互联网就变成一个有向图了。

pagerank算法Pagerank算法介绍Pagerank算法,也称为网页排名算法,是Google搜索引擎的核心算法之一。

这个算法最早由Google创始人之一、斯坦福大学教授拉里·佩奇(Larry Page)提出,并以他的姓氏命名。

Pagerank 算法用于评估网页的重要性和排名顺序,可以帮助用户快速找到相关性更高的网页。

Pagerank算法的核心思想是根据互联网中网页之间的链接结构来评估网页的质量。

它基于一个假设:如果一个网页被很多其他网页链接到,那么它就是一个重要的网页。

而如果这些链接是来自其他重要的网页,那么这个网页的重要性就更高。

Pagerank算法通过计算网页之间的链接关系,为每个网页赋予一个Pagerank值,用于衡量其重要性。

Pagerank算法的计算过程可以简单概括为以下几个步骤:1. 初始化:给每个网页一个初始的Pagerank值,一般可以设置为相等的数值,例如1/N,其中N是互联网中的总网页数。

2. 迭代计算:通过多次迭代计算,逐步更新每个网页的Pagerank 值。

每一次迭代中,对于每个网页,计算其Pagerank值,该值与其被其他网页链接所传递的Pagerank值相关。

具体计算公式为:PR(A) = (1-d) + d * (PR(T1)/C(T1) + PR(T2)/C(T2) + ... + PR(Tn)/C(Tn))其中PR(A)表示网页A的Pagerank值,d是一个称为阻尼因子的常数(一般取值为0.85),T1至Tn表示链接到网页A的其他网页,C(T1)至C(Tn)则表示T1至Tn这些网页的出链数量。

3. 迭代终止:当每个网页的Pagerank值不再发生显著变化时,迭代可以停止,得到最终的Pagerank值。

Pagerank算法的核心在于通过网络中的链接关系来评估网页的重要性,而不仅仅是根据关键词等其他因素进行评估。

这种链接分析的方式使得Pagerank算法具有很强的可靠性和稳定性,因为网页的链接结构并不容易被人为操控。

PageRank算法PageRank是⽹页重要程度计算⽅法,可推⼴到有向图结点的重要程度的计算。

基本思想是在有向图上定义随机游⾛模型,在⼀定条件下,极限情况访问每个结点的概率收敛到平稳分布。

给定有n个结点强连通且⾮周期性的有向图,在其基础上定义随机游⾛模型。

假设转移矩阵M,在时刻0,1,2,…,t,…访问各个结点概率为则其极限存在,那么极限向量R表⽰马尔可夫链的平稳分布,满⾜平稳分布R称为这个图的PageRank。

R的各个分量为各个结点的PageRank值,这是PageRank的基本定义,但有时有向图并未能满⾜强连通且⾮周期性的条件,没有其对应的平稳分布。

然⽽可以在基本定义上导⼊平滑项,这样平稳分布向量R就由下⾯的公式决定其中,1是所有分量为1 的n维向量;d阻尼因⼦,⼀般由经验决定因此这也称为PageRank的⼀般定义,其随机游⾛模型的转移矩阵由两部分的线性组合组成,⼀部分是有向图的基本转移矩阵M,另⼀部分是完全随机的转移矩阵。

PageRank的计算⽅法包括幂法、迭代计算法、代数算法。

幂法是其常⽤的⽅法,通常计算矩阵的主特征值和主特征向量求求得有向图的⼀般PageRank。

计算流程:输⼊:有n个结点的有向图,其转移矩阵M,阻尼因⼦d,初始向量x0和计算精度ε输出:有向图的PageRank平稳向量R1. 令t=0,选择初始向量x02. 计算有向图的⼀般转移矩阵A3. 迭代并规范化结果向量4. 当时,令,停⽌迭代。

5. 否则,令t=t+1,执⾏步骤(3)6. 对R进⾏规范化处理,使其表⽰概率分布例,给定以下有向图,d=0.85,ε=0.005,求其⼀般的PageRank图1 有向图有向图的转移矩阵7. 令t=0,8. 根据公式计算该图的⼀般转移矩阵A9. 迭代并规范化通过多次迭代,在t=21,22时,得到向量,停⽌迭代,取将R规范化,即使得其各个分量的和为1,迭代计算算法的流程:输⼊:有n个结点的有向图,其转移矩阵M,阻尼因⼦d,初始向量R0输出:有向图的PageRank平稳向量R(1)令t=0,(2)计算(3)当时充分接近,令,停⽌迭代。

PageRank算法PageRank,即网页排名,又称网页级别、Google左侧排名或佩奇排名。

是Google创始人拉里·佩奇和谢尔盖·布林于1997年构建早期的搜索系统原型时提出的链接分析算法,自从Google在商业上获得空前的成功后,该算法也成为其他搜索引擎和学术界十分关注的计算模型。

目前很多重要的链接分析算法都是在PageRank算法基础上衍生出来的。

PageRank是Google用于用来标识网页的等级/重要性的一种方法,是Google 用来衡量一个网站的好坏的唯一标准。

在揉合了诸如Title标识和Keywords标识等所有其它因素之后,Google通过PageRank来调整结果,使那些更具“等级/重要性”的网页在搜索结果中另网站排名获得提升,从而提高搜索结果的相关性和质量。

其级别从0到10级,10级为满分。

PR值越高说明该网页越受欢迎(越重要)。

例如:一个PR值为1的网站表明这个网站不太具有流行度,而PR值为7到10则表明这个网站非常受欢迎(或者说极其重要)。

一般PR值达到4,就算是一个不错的网站了。

Google把自己的网站的PR值定到10,这说明Google这个网站是非常受欢迎的,也可以说这个网站非常重要。

在PageRank提出之前,已经有研究者提出利用网页的入链数量来进行链接分析计算,这种入链方法假设一个网页的入链越多,则该网页越重要。

早期的很多搜索引擎也采纳了入链数量作为链接分析方法,对于搜索引擎效果提升也有较明显的效果。

PageRank除了考虑到入链数量的影响,还参考了网页质量因素,两者相结合获得了更好的网页重要性评价标准。

对于某个互联网网页A来说,该网页PageRank的计算基于以下两个基本假设:λ数量假设:在Web图模型中,如果一个页面节点接收到的其他网页指向的入链数量越多,那么这个页面越重要。

λ质量假设:指向页面A的入链质量不同,质量高的页面会通过链接向其他页面传递更多的权重。

[编辑本段]什么是pagerankPageRank(网页级别),2001年9月被授予美国专利,专利人是Google创始人之一拉里·佩奇(Larry Page)。

因此,PageRank里的page不是指网页,而是指佩奇,即这个等级方法是以佩奇来命名的。

它是Google排名运算法则(排名公式)的一部分,是Google用于用来标识网页的等级/重要性的一种方法,是Google用来衡量一个网站的好坏的唯一标准。

在揉合了诸如Title标识和Keywords标识等所有其它因素之后,Google通过PageRank来调整结果,使那些更具“等级/重要性”的网页在搜索结果中另网站排名获得提升,从而提高搜索结果的相关性和质量。

其级别从1到10级,10级为满分。

PR值越高说明该网页越受欢迎(越重要)。

例如:一个P R值为1的网站表明这个网站不太具有流行度,而PR值为7到10则表明这个网站非常受欢迎(或者说极其重要)。

一般PR值达到4,就算是一个不错的网站了。

Go ogle把自己的网站的PR值定到10,这说明Google这个网站是非常受欢迎的,也可以说这个网站非常重要。

Google的PageRank根据网站的外部链接和内部链接的数量和质量俩衡量网站的价值。

PageRank背后的概念是,每个到页面的链接都是对该页面的一次投票,被链接的越多,就意味着被其他网站投票越多。

这个就是所谓的“链接流行度”——衡量多少人愿意将他们的网站和你的网站挂钩。

PageRank这个概念引自学术中一篇论文的被引述的频度——即被别人引述的次数越多,一般判断这篇论文的权威性就越高。

Google有一套自动化方法来计算这些投票。

Google的PageRank分值从0到1 0;PageRank为10表示最佳,但非常少见,类似里氏震级(Richter scale),Pag eRank级别也不是线性的,而是按照一种指数刻度。

这是一种奇特的数学术语,意思是PageRank4不是比PageRank3好一级——而可能会好6到7倍。

pagerank方法PageRank方法是一种用于评估网页重要性的算法,它是由谷歌公司的创始人拉里·佩奇和谢尔盖·布林在1996年提出的。

该算法基于网页之间的链接关系,通过计算每个网页的入链数量和入链质量来确定其重要性。

在PageRank算法中,每个网页都被视为一个节点,并且网页之间的链接被视为有向边。

当一个网页A链接到另一个网页B时,被链接的网页B会获得一部分A 的PageRank值。

而被链接的网页的重要性也会影响链接出去的网页的重要性。

通过这种方式,PageRank算法形成了一个网页链接的评估系统。

PageRank算法的核心思想是基于概率模型。

它假设用户在互联网上随机浏览网页,并且以一定概率点击链接跳转到其他网页。

在这个模型中,一个重要的网页应该具有更多的入链,这意味着更多的网页会链接到该网页,从而提高用户访问该网页的概率。

为了计算每个网页的PageRank值,PageRank算法采用了迭代的方法。

初始时,每个网页被赋予相等的PageRank值。

然后,在每一次迭代中,PageRank值会被重新计算,直到达到收敛。

在计算过程中,每个网页的PageRank值会根据其入链的数量和质量进行调整。

PageRank算法的应用不仅限于评估网页重要性,还可以用于搜索引擎结果排序、网络社区发现和推荐系统等领域。

通过PageRank算法,搜索引擎可以提供更准确、有用的搜索结果,帮助用户找到他们想要的信息。

总而言之,PageRank方法是一种用于评估网页重要性的算法,它通过考虑网页之间的链接关系来确定每个网页的重要性。

它是谷歌公司在建立其搜索引擎算法中的重要一环,也是互联网发展中的一项重要技术。

一、介绍Pagerank算法Pagerank算法是由谷歌公司的联合创始人拉里·佩奇(Larry Page)和谢尔盖·布林(Sergey Brin)在1996年提出的,该算法被用于谷歌搜索引擎中用于确定网页排名的算法。

Pagerank算法基于网页之间的信息关系,通过对网页的信息数量和质量进行分析,计算出每个网页的权重,从而决定网页在搜索结果中的排名。

二、Pagerank算法的原理Pagerank算法基于下面的公式计算网页的排名:PR(A) = (1-d) + d (PR(T1)/C(T1) + ... + PR(Tn)/C(Tn))在公式中,PR(A)代表网页A的排名,d代表阻尼因子,通常取值为0.85,PR(Ti)代表信息到网页A的其他网页的排名,C(Ti)代表其他网页T1到Tn的出链数量。

Pagerank算法的原理是通过不断迭代计算,直到收敛为止,得到每个网页的排名。

三、实际应用案例1. 谷歌搜索引擎Pagerank算法最为著名的应用就是谷歌搜索引擎。

谷歌搜索引擎利用Pagerank算法来确定网页的排名,进而为用户提供最相关的搜索结果。

Pagerank算法的优势在于可以有效地识别网页的权威性和可信度,提高搜索结果的质量。

2. 网络推荐系统在网络推荐系统中,Pagerank算法也被大量应用。

在社交网络中,通过分析用户之间的关系,利用Pagerank算法可以计算出每个用户的影响力,并基于此为用户推荐合适的内容或信息。

3. 网页排行榜许多全球信息站和社区都利用Pagerank算法来确定网页排行榜,例如新闻全球信息站、论坛和博客评台等。

通过Pagerank算法,可以准确地评估网页的受欢迎程度和影响力,为用户提供最有价值的内容。

4. 互联网广告投放在互联网广告投放领域,Pagerank算法也被广泛应用。

通过分析网页的信息关系和排名,可以有效地确定广告投放的位置,使广告主能够更精准地找到目标受众,提高广告的点击率和转化率。

Internet Mathematics Vol.2,No.1:73-120A Survey on PageRankComputingPavel BerkhinAbstract.This survey reviews the research related to PageRank po-nents of a PageRank vector serve as authority weights for web pages independent of their textual content,solely based on the hyperlink structure of the web.PageRank is typically used as a web search ranking component.This defines the importance of the model and the data structures that underly PageRank puting even a single PageRank is a difficult computational puting many PageRanks is a much more complex challenge.Recently,significant effort has been invested in building sets of personalized PageRank vectors.PageRank is also used in many diverse applications other than ranking.We are interested in the theoretical foundations of the PageRank formulation,in the acceleration of PageRank computing,in the effects of particular aspects of web graph structure on the optimal organization of computations,and in PageRank stability.We also review alternative models that lead to authority indices similar to PageRank and the role of such indices in applications other than web search.We also discuss link-based search personalization and outline some aspects of PageRank infrastructure from associated measures of convergence to link preprocessing.1.IntroductionThe tremendous popularity of Internet search engines presents a striking example of a successful fusion of many developments in different areas of computer science and relatedfields from statistics to information retrieval to natural language processing.One of the most difficult problems in web search is the ranking of the results recalled in response to a user query.Since contemporary web crawls ©A K Peters,Ltd.1542-7951/05$0.50per page7374Internet Mathematics discover billions of pages,broad topic queries may result in recalling hundreds of thousand of pages containing the query terms.Only a few dozens of the recalled pages are actually presented to the user.Moreover,these results are presented in order of relevance.A variety of ranking features are used by Internet search engines to come up with a good order.Some of the query-independent features are based on page content.Some utilize the hyperlink structure of the web. The ascent of Google to prominence is to a certain degree associated with the concept of a new relevance feature of the second kind,PageRank.PageRank as-signs authority weights to each page of a web graph based on the web hyperlink structure.It is based on an assumption that authoritative pages usually contain links to good pages,therefore endorsing them in a degree inversely proportional to the number of their out-links.This fundamental assumption is not always true in practice partly because good pages may erroneously link to bad ones,partly because they have a natural tendency to emphasize pages within their own sites, and partly because of the consistent efforts of malicious sites containing spam. While this affects its usefulness,PageRank remains an important relevance fea-ture when combined with other ranking components.Many issues are related to PageRank computing:fromfiltering,weighting,and compressing the link data to the most advantageous modification of the underlying model tofinding fast methods of computing its solution.PageRank is based on a random surfer model[Page et al.98]and may be viewed as a stationary distribution of a Markov chain.The PageRank solution is a principal eigenvector of a linear system that can be found via the power method.We discuss this model and some technical modifications(teleportation mechanism)needed to safeguard the existence of a solution and the convergence of an iterative process in Section2.The sheer size of the World Wide Web presents a challenge to PageRank com-puting.Different developments to speed-up the power method include extrapola-tion,adaptive,and block structure methods.PageRank can also be reformulated in terms of a linear system.Linear systems have a rich arsenal of fast iterative methods.The effective solution of PageRank depends on a special enumeration of its nodes corresponding to a DAG structure.We discuss effective procedures for PageRank computing and its stability with respect to changes in the web graph and in the teleportation coefficient in Section3.Simultaneously with the random surfer model,a different but closely related approach,the HITS algorithm[Kleinberg99],was invented.This algorithm results in two authority vectors.PageRank results in one.More importantly, HITS is designed to work at query time,while PageRank is precomputed in advance of its utilization in a search.HITS,its modifications,and other later models leading to a concept of authority weights defined on a directed graphBerkhin:A Survey on PageRank Computing 75such as,for example,SALSA,as well as the use of such weights in applications other than web search relevance ranking are surveyed in Section 4.The PageRank vector serves as only one of the many relevance features for ranking web search results.Generic PageRank corresponds to a uniform tele-portation.In principle,PageRanks with different teleportations can be used.A nonuniform teleportation makes sense,since it leads to a topical or user-specific personalized PageRank [Page et al.98,Haveliwala 02b].Computing many such PageRanks is difficult,but in some cases it may be significantly optimized.Re-cent results in personalized PageRank are presented in Section 5.Remarkable efforts have been made to facilitate the PageRank computing in-frastructure.They range from special means to estimate PageRank convergence,to web graph analysis,to special data compression techniques.We present them in Section 6.2.Definitions and Fundamentals In this section we formally define PageRank and consider the theoretical foun-dations for its existence and for the convergence of the power iteration.2.1.Definitions The PageRank vector (PageRank citation ranking weigh t)was introduced in [Page et al.98]and [Brin and Page 98].It assigns to pages authority scores that are higher for pages with many in-links from authoritative pages with relatively few out-links .Let W (G,L )be a directed graph with vertices (or nodes)G that are web HTML pages and directed edges L that are hyperlinks.The edges L can be associated with a n ×n sparse adjacency matrix that we denote by the same symbol L ,where n =|G |is the number of web pages.An element L ij is equal to 1if a page i has a link i →j to a page j and is equal to 0otherwise.The out-degree of a node i is the number of outgoing links,deg (i )= j L ij .All the definitions can be generalized to a weighted directed graph,in which case L ij ≥0.A transition matrix is defined as P ij =L ij /deg (i )when deg (i )>0.For such i it is row-stochastic ,meaning that i -row elements sum to one.We set P ij =0when deg (i )=0.Consider the following random surfer model .A surfer travels along the directed graph.If at a step k he is located at a page i ,then at the next step k +1he moves uniformly at random to any out-neighbor j of i .Obviously,pages can be considered as n states of a Markov chain with a transition matrix P .Given a distribution p (k )= p (k )i of probabilities for a surfer to be at a page i at a step76Internet Mathematics k ,the probability for being at a page j on the next step is proportional to p (k +1)j = i →j p (k )i /deg (i )= i P ij p (k )i or p (k +1)=P T p (k ).(2.1)(If all pages have out-links,p (k +1)j is indeed a probability distribution.)An equi-librium state of transformation (2.1)correctly reflects our modeling objectives.The more incoming links a page has,the higher its importance.The importance of a page j is also proportional to the importance of its in-neighbors and in-versely proportional to the neighbor’s out-degrees.This motivates the following definition.Definition 2.1.A PageRank vector is a stationary point of the transformation (2.1)with nonnegative components (a stationary distribution for a Markov chain)p =Ap,A =P T .(2.2)Since we are interested in a probability distribution,the sum of the p -components is assumed to be one.We use an L 1norm x = |x i |as our standard norm.This definition has a problem.Matrix P has zero rows for dangling pages (pages that have no outgoing links),since for them deg (i )=0,while a Markov chain transition matrix has to be row-stochastic.Dangling pages serve as sinks or attractors :some p -volume comes to them but then cannot leave.Different remedies have been explored.One approach [Page et al.98,Section 2.7]suggests getting rid of dangling pages.The rationale is that these pages do not influence any other pages.Notice that deleting dangling pages generates new dangling pages.Another alternative considered in that paper is to renormalize P T p (k +1)so that it remains a probability distribution to compensate for sinks.The authors of [Kamvar et al.03b]consider adding deleted dangling pages on final iterations.Still another option advocated by [Jeh and Widom 02b]is to add a self-link to each dangling page and,after computation,to adjust the constructed PageRank.Another approach [Bianchini et al.03]suggests introducing an ideal page (sink)with a self-link to which each dangling page links.Along a similar line,see [Eiron et al.04].The most widely used device [Page et al.98,Haveliwala et al.03b,Kamvar et al.03c,Langville and Meyer 05]modifies the matrix P by adding artificial links that uniformly connect dangling pages to all G pages.Let v be a distribution,for example,v =e,e =e n =(1/n,...,1/n ),and let d be a dangling page indicator,d i =δ(deg (i ),0).Here,as usual,δ(a,b )=1when a =b and is zero otherwise.Consider the matrix P =P +d ·v T .(2.3)Berkhin:A Survey on PageRank Computing77 Rows corresponding to dangling pages are changed to v.The trick is popular since,as we will see shortly,it is also useful in a general situation of nondangling pages and is easy to compute.Aside from a particular approach,the decisive fact is that there are many dangling pages to deal with.(In reality,on a Yahoo!web graph from data collected in August,2004,and consisting of billions of pages, more than60%of pages have not been crawled,and therefore,these pages are dangling for sure.Around14%of actually crawled pages are also dangling.In a corresponding host graph62%of the pages are dangling pages.)When does the stationary point in(2.2)exist and when does the process in (2.1),called the power method or power iteration,converge to the solution?For a nonnegative row-stochastic matrix P independent of the initial distribution p(0) (elements are nonnegative and sum to one),the power method converges to a principal eigenvector p of A,p has positive elements,and the principal eigenvalue λ1=1is simple as long as two conditions are satisfied:1.The graph W(G,L)is strongly connected,meaning that there is a directedpath from each node to any other node.2.The graph W(G,L)is aperiodic,meaning that for any pages i and j thereare paths from i to j(with whatever repetitions)of any length l except for afinite set of lengths.While Condition2is guaranteed for the web,Condition1is routinely violated. For example,there are many pages without in-links.To satisfy both conditions, the trick used for dangling pages is repeated:the matrix is modified toP =cP +(1−c)E,E=(1,...,1)·v T,0<c<1.(2.4) Here all rows of E coincide with v.The transformation(2.4)conserves the stochastic property(it transforms a probability distribution into another proba-bility distribution).According to(2.4),from a nondangling page a surfer follows one of the local out-links with probability c and jumps to some j∈G with probability(1−c)v j.For this reason,v is known as a teleportation vector.A uniform v=e is usually employed,resulting in a uniform PageRank.However, in a personalization context nonuniform jumps are used.Consequently,v is also known as a personalization vector.Different recipes for choosing c range from 0.85[Page et al.98,Kamvar et al.03b]to0.99[Haveliwala et al.03b,Kamvar et al.03c].Values0.85–0.9are used in practice.The original P is sparse.We still can benefit from this fortunate fact after the transformations(2.3)and(2.4),since the multiplication with matrix P can be carried out[Kamvar et al.03c]in terms of the original P:the vector78Internet Mathematicsy=Ax=P T x can be computed by the following code:y=cP T x,γ= x − y ,y=y+γv.(2.5)General information related to PageRank can be found in a survey article [Langville and Meyer04a].It contains some explanatory examples corresponding to small graphs.Henzinger[Henzinger00]also reviews related issues.2.2.Related TheoryThe Perron-Frobenius theorem for positive matrices is proved in[Axelsson94, Chapter4].Under Condition1of strong connectivity,it guarantees thatλ1is simple and that the corresponding eigenvector has positive components.The fact that the eigenvalueλ1=1is a consequence of stochasticity.Condition2 of aperiodicity relates not to the existence of a solution but to the convergence of(2.1),which is not guaranteed in its absence.For example,for a strongly connected two-node graph a↔b that does not satisfy the aperiodicity condition (since all paths from a to b have odd lengths),the iterations(2.1)for p(0)= (q,1−q)oscillate without convergence between p(0)and p(1)=(1−q,q).A matrix is reducible if after a permutation of coordinates into two nonempty groups,first of size d and second of size n−d,it has a block triangular formP=P11P120P22,(2.6)where dim(P11)=d×d,dim(P22)=(n−d)×(n−d).It is called irreducible otherwise[Axelsson94,Definition4.2,Theorem4.2].An adjacency matrix and a transition matrix of a directed graph are irreducible if and only if the associated graph is strongly connected[Axelsson94,Theorem4.3].If the graph W is not strongly connected,then factorization of its strongly connected components (SCC)leads to a directed acyclic graph(DAG),discussed in Section3.4.Every DAG’sfinal node(i.e.,having no out-links)corresponds to a SCC that serves as an attractor in a corresponding Markov chain.In plain language all the p-volumes gradually get sucked into such states.Condition1of strong connectivity is necessary for the existence of a unique positive solution of(2.2).If P is reducible,then the system(2.2)either has multiple positive solutions,if P12=0 (combinations of solutions for two blocks),or has no positive solution(since p1=P T11p1and P11is not stochastic if P12=0).So far,we only knowλ1.A less recognized fact is that eigenvalues of P and P are interrelated:λ1(P )=λ1(P )=1,λj(P )=cλj(P )for j>1[LangvilleBerkhin:A Survey on PageRank Computing79 and Meyer05].In particular,the second eigenvalueλ2(P )≤c.In reality,for a web graphλ2(P )=c.This amazing fact,first proven by[Haveliwala and Kamvar03],is important for extrapolation.It has the astonishing consequence that a teleportation mechanism introduced to secure strong connectivity also determines the convergence rate.The authors also prove that ifA=(cP +(1−c)E)T,E=(1,...,1)·v T,(2.7)where P is row stochastic and has at least two irreducible closed subsets,then A has a second eigenvalueλ2=c.The web graph W(G,E)has many strongly connected components[Kumar et al.00].A proof can also be found in[Langville and Meyer05].Further information about random walks on directed graphs can be found in [Motwani and Raghavan95,Ahuja et al.93,Lov´a sz96].Not all of the theory is relevant to PageRank.The Markov model defined by afinite graph with a moderate number of outgoing links has its own intrinsic features.It has been observed[Page et al.98]that the web graph is expanding,meaning that the number of vertices reachable from a(not too large)subset exceeds by a factor αthe cardinality of the initial set.A graph has a high expansion factor if and only if its principal eigenvalue is sufficiently larger than the second eigenvalue (“eigenvalue gap”).A random walk on a graph is rapidly-mixing if iterations converge in log(|G|)time,which in turn depends on the principal eigenvalue separation.As we explained,pages G can be viewed as Markov chain nodes with a transi-tion matrix P.The theory of Markov chains includes the important concept of ergodic states.Let N(i,k)be the number of times a surfer visited page i during thefirst k steps.The solution of Equation(2.2)is related to N.When strong connectivity and aperiodicity are in place,the Ergodic theorem[Motwani and Raghavan95,Theorem6.2]says that each state is ergodic:N(i,k)/k=p i.limk→∞The transition matrix P is related to an adjacency matrix L by the formula P=D−1·L,where a diagonal matrix D={deg(i)}contains out-degrees. Generalizations of this formulation are discussed in[Ding et al.01].Brandes and Cornelson[Brandes and Cornelsen03]present a general view of other similar constructions for directed graphs;independently of PageRank that induces an order over G,they also describe a method of spectral graph layout resulting in another ordering of undirected graphs.The method relates to the important concepts of the Laplacian matrix and the minimum energy state Fiedler vector.80Internet Mathematics PageRank can be formulated in a form alternative to a spectral problem. Since(1,..,1)T p= p =1,the PageRank vector,in addition to an eigensystem p=Ap,A=P T,also satisfies the equationp=cP T p+(1−c)v=cP T p+(1−c+cs)v,(2.8) where s=s(p)=d T·p is a“dangling sink.”The actual value of s affects the solution only through rescaling it.Therefore,any s,for example,s=0(that results in the spectral eigensystem solution in the absence of dangling pages), can be used.This fact has important implications.We elaborate on this topic in Section3.5.Finally,modifying P with teleportation can be avoided[Langville and Meyer05,Tomlin03]if in(2.7)G is augmented with an additional ideal sink node n+1:˜p=˜P˜p,˜P=cP (1−c)(1, (1)v T0,˜p=p(1−c).This construction is also useful in handling dangling pages[Eiron et al.04,Lee et al.03].ageThe traditional application of PageRank is ranking web search results.The ap-pealing quality of PageRank is that it is available in query time.Since PageRank only uses web graph connectivity and is query-independent,it has to be blended with other features,including query-dependent features.Along with PageR-ank,another similar algorithm reviewed below and called HITS[Kleinberg99] works in query time.The benefits of PageRank are best for under-specified (broad topic)queries,for example“University”[Page et al.98].In other words, it is best for queries with very high recall based on a straightforward all-term inclusion procedure.From an application standpoint,PageRank has some prob-lems.For example,an artificially high rank is assigned to copyright warnings, disclaimers,and highly interlinked mailing archives.Another problem is a po-tential topic drift.Different remedies were suggested,starting with an insightful article[Bharat and Henzinger98](which we get back to in the context of HITS). The major issue is how to merge connectivity and content data.Internet search engines blend PageRank with other relevance features using proprietary ranking formulas and other techniques,and any statistics reflecting its significance in this regard are not public.There is no certainty about the importance of per-sonalized PageRank either,except for the fact that no other obvious link-based personalization feature exists.HostRank,PageRank constructed over the host graph,is another useful relevance feature.Berkhin:A Survey on PageRank Computing81Algorithm1.(Poor man’s PageRank algorithm.)Input:Given a transition matrix P,a teleportation vector v,and a coefficient cOutput:Compute PageRank pbeginInitialize p(0)=v,k=0repeatp(k+1)=cP T p(k)γ= p(k) − p(k+1)p(k+1)=p(k+1)+γvδ= p(k+1)−p(k)k=k+1untilδ<endreturn p(k)Before moving further we would like to present the reader with the“Poor man’s PageRank algorithm”implementation of the power iteration that takes (2.5)into account;see Algorithm1.3.Acceleration of ConvergenceAs we explained,our goal is tofind a stationary point p for an eigensystemp=Ap,A=P T.(3.1) We start with some distribution p(0)(e.g.,p(0)=e)and use the power iterationp(k+1)=Ap(k)(3.2) to compute the eigenvector associated with the principal eigenvalueλ1=1. According to(2.5),A-multiplication successfully exploits the sparse nature of the transition matrix P(on average,there are between7and20out-links per page depending on the pruning used)and thus,a major iteration has O(n) computational complexity.This is good news;however,the graph size n is very large.We use a simple L1stopping criterion for transparency,but we will return to this issue in Section6.1.Before we investigate more complex algorithms,what is the convergence rate of the power iteration?We already know thatλ1(P)=1,λ2(P)=c.It is easy to see that a convergence rate of the power iteration process for any P82Internet Mathematics isλ2(P)/λ1(P)or c in our case.We would like to provide somewhat simpler reasoning specifically related to PageRank that leads to a slightly weaker result. Following[Bianchini et al.03],if we subtract from Equation(2.8),p=cP T p+ (1−c)v,its iterative version(3.2),we getp−p(k+1)=cP T(p−p(k))=c kP Tk(p−p(0)),and hence,since P T ≤1,the rate of convergence is bounded by c.The number of iterations k≈log( )/log(c)needed to achieve -accuracy is independent of the graph.Next we review different algorithmic methods to accelerate the power method (3.2)and other methods of computing PageRank.In Section6we talk about infrastructural means to achieve computational efficiently.Since a web graph is volatile,there is a natural limit on how accurate we would like to be.Also,the whole adventure with computing PageRank makes sense only if the ideal object is stable.This is also one of our concerns in this section.3.1.Extrapolation MethodsExtrapolation methods are based on the expansion of iterates p(k)in a seriesp(k)=u1+λk2u2+···+λk m u m+···,(3.3) where terms u m are(not orthonormal)eigenvectors of A(we utilize thatλ1=1). These methods try to improve approximation of the ideal solution u1by p(k) through clever suppression of the higher terms.More specifically,while com-puting power iterations,from time to time,we construct a linear combination of several iterates that exactly eliminates one or more harmonics after the veryfirst term and regard the remaining tail as approximately relatively small.In doing so,we assume that1<λ2≤λ3≤...≤λm...and carefully analyze coefficients of thefirst m terms in series(3.3).The constructed combination is used as a closer starting point for further iterations.Kamvar,Schlosser,and Garcia-Molina[Kamvar et al.03c]present a variety of extrapolation methods.The simplest Aitken method aims at eliminating the second sub-principal term.Setting m=2and truncating the tail(denoted by “...”),we getp(k−2)=u1+u2+...p(k−1)=u1+λ2u2+...p(k)=u1+λ22u2+....(3.4)These are three equations with three unknowns,and we can eliminate two of them,u2andλ2.This is what the Aitken method does.After an initialBerkhin:A Survey on PageRank Computing83 speedup,the acceleration is not significant.They also suggest a straightfor-ward generalization—a quadratic extrapolation that is based on a similar set of equations for m=3,p(k−3)=u1+u2+u3+...p(k−2)=u1+λ2u2+λ3u3+...p(k−1)=u1+λ22u2+λ23u3+...p(k)=u1+λ32u2+λ33u3+....(3.5)Based on some matrix algebra,the authors developed formulas to eliminate un-knowns corresponding to the second and third terms.Both the Aitken method and quadratic extrapolation are applied periodically within(3.2).Reported ex-perimental speedup for quadratic extrapolation is59%.The presented extrapolation techniques were immediately superseded by an elegant discovery:λ2(P )=c[Haveliwala and Kamvar03](see Section2.2).The rate of convergence p(k+1)−p(k) / p(k)−p(k−1) →|λ2/λ1|=c is confirmed by experiments.In the Markov chain context,|λ1|−|λ2|=1−c is known as stability.The authors also relate eigenvectors corresponding toλ2=c to spam detection.In extrapolation methods,knowingλ2has a profound consequence: we do not need to bother computing it in Equations(3.4)and(3.5). Extrapolation methods developed in[Haveliwala et al.03b]utilize this dis-covery.A d extrapolation starts with a representation similar to that in Equa-tion(3.5)under the assumption that m=d+1andλj for j=2:d+1form a system of d-roots of c d.This means,in particular,thatλd j=c d.For example, for d=2,λ2=c andλ3=−c.We see now thatp(k−d)=u1+u2+···+u d+1+...p(k)=u1+λd2u2+···+λd d+1u d+...(3.6)and,hence,the equation p new=u1=p(k)−c d p(k−d)1−c d becomes a viable extrap-olation.It is suggested to be used periodically in conjunction with the power method(3.2).For c=0.85the best speedups correspond to d=4(25.8%)and d=6(30%).In our experience this extrapolation works as predicted with large graphs.3.2.Adaptive MethodThere is no need to compute PageRank components exactly.How much accuracy is actually required is an intriguing question that we revisit in Section6.1.What-ever the required accuracy is,there is a disparity in the magnitudes of PageRank components:authorities of pages differ dramatically,and most values are small84Internet Mathematics (the lower bound (1−c )/n is achieved on pages without in-links).A less trans-parent observation is that small components converge much faster even when measured on a relative scale i (k )= p (k +1)i −p (k )i /p (k )i [Kamvar et al.03a].For example,the authors show that,on a graph of 280,000nodes (3M links)for c =0.85,around 80%of PageRank components converged ( i (k )< =10−3)in less than 15iterations and their average magnitude was 0.37of the average magnitude of the remaining components.The proposed acceleration,adaptive method ,simply stops iterating on already converged components.Assume that p =(p N ,p C )is a split of p into m not yet converged and (n −m )already converged components.Let A N and A C be the corresponding m ×n and (n −m )×n submatrices of A .Then,we have p (k +1)N p (k +1)C = A N A C · p (k )N p (k )C or p (k +1)N =A N p (k )p (k +1)C =p (k )C ,(3.7)where p (k )C ≈A C p (k )and we only need to iterate over the nonconverged p N .Here,dim(A N )=m ×n and dim(A C )=(n −m )×n .When m becomes smaller,the size of A N becomes smaller as well,and many computations are avoided.The modified algorithm presented in the paper actually deletes the links corresponding to converged pages from a sparse matrix A .This means decomposition A N =[A NN ,A NC ].The explicit reordering of A is important,and a smart strategy is used to enable housekeeping.The savings are about 20%in overall time.Little hope for the reordering of a several-billion-node web graph currently exists.This restricts the application of this method to smaller graphs such as,for example,a host graph.The adaptive method requires slightly more major iterations k to achieve convergence.3.3.Block Structure Method The web has a particular structure.On the very primitive level,there are many more links within the same domains (hosts)than between pages of different do-mains (hosts).Kamvar et al.brilliantly exploit this idea [Kamvar et al.03b].The intuition behind the idea is that blocks roughly represent the whole graph,but there are many fewer blocks than pages and they are more interconnected.Therefore,computing “aggregate”PageRank is easy.If we are also able to ap-proximate PageRank within blocks that is again a series of smaller problems,then we may find a good starting point from which to ignite a full graph algo-rithm.The authors compute PageRank via the algorithm blockRank in several steps.A slightly updated version of this development is presented in Algorithm 2.。