A proposed DAQ system for a calorimeter at the International Linear Collider

- 格式:pdf

- 大小:275.81 KB

- 文档页数:14

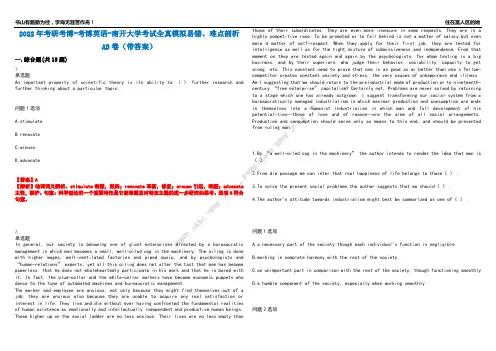

全国2018年4月自学考试英语科技文选试题课程代码:00836PART A: VOCABULARYI. Directions: Add the affix to each word according to the given Chinese, making changes when necessary.(8%)1. artificial 人工制品 1. __________________2. fiction 虚构的 2. __________________3. coincide 巧合 3. __________________4. organic 无机的 4. __________________5. sphere 半球 5. __________________6. technology 生物技术 6. __________________7. formid 可怕的7. __________________8. harmony 和谐的8. __________________II. Directions: Fill in the blanks, each using one of the given words or phrases below in its proper form.(12%)stand for exposure to at work on the edge of short ofend up focus on a host of give off a sense ofin memory of comply with9. We were on a hill, right _________ the town.10. UNESCO _________ United Nations Educational, Scientific and Cultural Organization.11. I am a bit _________ cash right now, so I can’t lend you anything.12. The milk must be bad, it’s _________ a nasty smell.13. The traveler took the wrong train and _________ at a country village.14. The material will corrode after prolonged _________ acidic gases.15. _________ problems may delay the opening of the conference.16. The congress opened with a minute’s silence _________ those who died in the struggle for the independence of their country.17. Tonight’s TV program _________ homelessness.18. He promised to _________ my request.19. Farmers are _________ in the fields planting.20. She doesn’t sleep enough, so she always has _________ of fatigue.III. Directions: Fill in each blank with a suitable word given below.(10%)birth to unmarried had premature among were between such past The more miscarriages or abortions a woman has,the greater are her chances of giving birth to a child that is underweight or premature in the future,the research shows.Low birthweight (under 2500g) and premature birth(less than 37 weeks)are two of the major contributors to deaths 21 newborn babies and infants. Rates of low birthweight and 22 birth were highest among mothers who 23 black, young or old, poorly educated, and 24 . But there was a strong association 25 miscarriage and abortion and an early or underweight 26 , even after adjusting for other influential factors, 27 as smoking, high blood pressure and heavy drinking. Women who had 28 one, two, or three or more miscarriages or abortions in the 29 were almost three, five, and nine times as likely to give birth130 an underweight child as those without previous miscarriages or abortions.21. _________ 22. _________ 23. _________ 24. _________ 25. _________26. _________ 27. _________ 28. _________ 29. _________ 30. _________PART B: TRANSLATIONIV. Directions: Translate the following sentences into English, each using one of the given words or phrases below. (10%)precede replete with specialize in incompatible with suffice for31.上甜食前,每个用餐者都已吃得很饱了。

中考英语经典科学实验与科学理论深度剖析阅读理解20题1<背景文章>Isaac Newton is one of the most famous scientists in history. He is known for his discovery of the law of universal gravitation. Newton was sitting under an apple tree when an apple fell on his head. This event led him to think about why objects fall to the ground. He began to wonder if there was a force that acted on all objects.Newton spent many years studying and thinking about this problem. He realized that the force that causes apples to fall to the ground is the same force that keeps the moon in orbit around the earth. He called this force gravity.The discovery of the law of universal gravitation had a huge impact on science. It helped explain many phenomena that had previously been mysteries. For example, it explained why planets orbit the sun and why objects fall to the ground.1. Newton was sitting under a(n) ___ tree when he had the idea of gravity.A. orangeB. appleC. pearD. banana答案:B。

digestive system 相关英语词汇The digestive system is a complex network of organs and tissues responsible for breaking down food into nutrients that the body can absorb and use. It involves a series of physical and chemical processes that occur in the mouth, esophagus, stomach, small intestine, large intestine, and accessory organs such as the liver, pancreas, and gallbladder.Here are some key English vocabulary related to the digestive system:$$1. Mouth and Teeth:$$* **Mouth:** The entry point for food intake. * **Teeth (Teeth):** Hard, calcified structures used for chewing and grinding food. * **Tongue:** A muscular organ that moves food around in the mouth and helps in swallowing. ***Saliva:** A watery secretion that moistens food, begins the chemical breakdown of carbohydrates, and helps in swallowing.**2. Esophagus:*** **Esophagus:** A muscular tube that carries food from the mouth to the stomach. * **Peristalsis:** The rhythmic muscular contractions that propel food through the esophagus.**3. Stomach:*** **Stomach:** A hollow, muscular organ that stores food, secretes gastric juices, and mixes food with these juices to form a semisolid mass called chyme. * **Gastric Juice:** A mixture of hydrochloric acid, enzymes, and mucus secreted by the stomach. * **Hydrochloric Acid:** A strong acid that helps in the digestion of protein and creates an acidic environment that kills bacteria. * **Enzyme:** A biological catalyst that speeds up chemical reactions in the body, including the breakdown of food into nutrients. * **Mucus:** A slippery, viscous substance that coats the lining of the stomach, protecting it from the corrosive effects of gastric juice.**4. Small Intestine:*** **Small Intestine:** A long, coiled tube that continues from the stomach and is the primary site of digestion and absorption of nutrients. * **Duodenum:** Thefirst part of the small intestine, closest to the stomach. * **Jejunum:** The middle part of the small intestine. ***Ileum:** The final part of the small intestine, leading to the large intestine. * **Villi:** Tiny, finger-like projections on the inner lining of the small intestine that increase its surface area for absorption. * **Microvilli:** Minute projections on the surface of the villi that further enhance the absorption capacity of the small intestine.**5. Large Intestine:*** **Large Intestine:** A wider, shorter tube that absorbs water and forms feces. * **Colon:** The major part of the large intestine. * **Rectum:** The final, straight section of the large intestine, leading to the anus. ***Feces:** Solid waste product formed in the largeintestine and expelled from the body through the anus.**6. Accessory Organs:*** **Liver:** A large organ that produces bile, metabolizes fats, stores vitamins and minerals, and detoxifies the blood. * **Bile:** A yellowish fluid produced by the liver and stored in the gallbladder. It helps in the digestion of fats. * **Gallbladder:** A small,pear-shaped sac that stores bile until it is needed for digestion. * **Pancreas:** A gland that produces enzymes that break down carbohydrates, fats, and proteins, as well as hormones that regulate blood sugar levels.**7. Digestive Processes and Functions:*** **Digestion:** The process of breaking down food into smaller molecules that can be absorbed by the body. ***Absorption:** The process of nutrients passing through the walls of the small intestine into the bloodstream. ***Metabolism:** The set of chemical reactions that occur in the body to convert food into energy and building blocksfor cells and tissues.These are just a few of the many terms related to the digestive system. The digestive system is a highly complex and interconnected network of organs and processes, and its efficient functioning is crucial for maintaining overall health and well-being. Disorders of the digestive system can lead to a range of symptoms and health issues, makingit important to maintain a healthy diet and lifestyle to promote optimal digestive health.。

AN INTRODUCTION TO THE AMERICAN LEGAL SYSTEMThe American legal system is a complex and multifaceted system that governs a wide range of laws and regulations. It is crucial to understand the different aspects of the legal system in order tonavigate it effectively, whether you are a legal professional or an ordinary citizen. In this guide, we will provide an overview of the American legal system and some practice questions to help you understand the key concepts.Overview of the American Legal SystemThe American legal system is based on the principle of federalism, which means that the federal government shares power with individual state governments. This means that laws can vary from state to state, which can sometimes lead to confusion and inconsistency.The ConstitutionThe Constitution is the supreme law of the land in the United States. It outlines the structure of the federal government and provides rights to individual citizens. The Constitution is made up of seven articlesand 27 amendments.The Legislative BranchThe legislative branch is responsible for creating the laws that govern the country. It is made up of two parts: the Senate and the House of Representatives. The Senate has 100 members – two from each state –and the House of Representatives has 435 members, with the number of representatives from each state determined by its population.The Executive BranchThe executive branch is responsible for enforcing the laws that the legislative branch creates. It is headed by the President of the United States and also includes the Vice President, the Cabinet, and various government agencies.The Judicial BranchThe judicial branch is responsible for interpreting the laws and deciding cases that arise from them. It is made up of a system offederal and state courts. At the federal level, the Supreme Court is the highest court in the land and has the final say in all legal matters.Practice Questions1.What is federalism and how does it impact the American legalsystem? the three branches of government and briefly describetheir roles.3.What is the Supreme Court and what is its role in theAmerican legal system?4.What is the Constitution and why is it important to thelegal system?5.How are laws created in the American legal system?。

Final Concept PaperQ11: Q&As on Selection and Justification of Starting Materials for the Manufacture of DrugSubstancesFocus on chemical entity drug substancesdated 22 October 2014Endorsed by the ICH Steering Committee on 10 November 2014Type of Harmonisation Action ProposedAn Implementation Working Group (IWG) is proposed to prepare a Questions and Answers (Q&A) document for ICH’s Development and Manufacture of Drug Substances (Q11) Guideline to provide clarification on what information about the selection and justification of starting materials should be provided in marketing authorisation applications and/or Master Files.The IWG will provide clarification of the existing principles and will not re-open ICH Q11. As appropriate, references will be made to existing ICH Guidelines, e.g., ICH Q7, ICH Q9, ICH Q10, ICH Q11 and ICH M7, to ensure continuity across all ICH Quality Guidelines. The focus of the Q&A document will be on chemical entity drug substances as that is where most of the differences of opinion have been experienced.Statement of the Perceived ProblemEvaluation of information related to the manufacturing process and controls for drug substances is an important part of marketing authorisations. Decisions made about the proposed starting material(s) determine what expectations apply to the Quality-related information for both pre-market assessment and for post-market changes. The acceptability of the applicant's proposed starting material also has implications for Good Manufacturing Practices (GMPs), process validation requirements, and inspection-related activities (as outlined in ICH Q7). While it is recognised that ICH Q11 provided good scientific guidance when published in 2012, differences in the interpretation of that guidance are causing problems for industry and regulators.Issues to be ResolvedExamples of issues that a Q&A document might help resolve include, but are not limited to the following:Significant regional differences between regulatory authorities in terms of:o Which aspects contribute to the potential unsuitability of starting materials (e.g., number of distinct chemical steps separating starting material(s) from final drug substance, potentiallymutagenic impurities, stereochemistry);o The amount of regulatory attention given to steps prior to the proposed starting material(e.g., how much of the synthesis of the proposed starting material should be disclosed aspart of the justification for the starting material);o What information is necessary to support the justification of the starting material.International Conference on Harmonisation of Technical Requirements for Registration of Pharmaceuticals for Human Use ICH Secretariat, Chemin Louis-Dunant 15, P.O. Box 195, 1211 Geneva 20, Switzerland∙Significant resources are frequently used to resolve differences of opinions (regulatory and industry);∙The information provided by industry can be inadequate for regulators to evaluate whether the proposed starting material, manufacturing process, and control strategy provide sufficient assurance of the quality of the drug substance (especially if the proposed starting material occurs late in the manufacturing process);∙Additional burden on industry associated with conservative approaches to defining starting material can include, for example:o Validating early steps before the proposed starting material;o Evaluating every step of the process for known and potential impurities with the same intensity as the final few steps;o Expecting steps prior to proposed starting material to be manufactured under GMP conditions.Background to the Proposal and IssuesQ11 provided guiding principles to be considered in the selection and justification of starting materials for the manufacture of drug substances. It has become apparent, based on public workshops, symposia, and industry experience with global submissions, that differences of opinion can arise between regulators and industry about how those principles should be applied in specific situations. While it is recognised that each dossier needs to be judged on its own merit, further clarification of the principles of Q11 through a Q&A document (including perhaps, case studies) could help address differences in understanding and interpretation.The Q&A should provide several benefits for industry, regulators, and patients:∙Improvement in global harmonisation regarding the selection and justification of starting materials used in the manufacture of drug substances for new and generic applications;∙Clarification regarding the relationship between the selection of appropriate starting material and GMP considerations, control strategy, length of synthetic process, and impact of manufacturing steps on DS quality. Clarification: ICHQ7 / GMP is not in scope and not for this IWG;∙Clarification on the type of information that industry should provide in submissions to justify starting material selection;∙Clarify expectations for lifecycle management of starting material .Type of Expert Working Group and ResourcesThe proposed Q&A document will provide clarification to complement ICH Quality Guidelines for chemical entity drug substances. In general, biotechnological/biological drug substances will not be within scope; however, the Q&A may clarify special cases. The working group should include representatives from the ICH official members (EU, EFPIA, FDA, PhRMA, MHLW, JPMA, Health Canada and Swissmedic). One member can also be nominated by WHO Observer, EDQM, WSMI, IGPA, and API industry as well as RHIs, DRAs/DoH (if requested).The primary mechanism for advancing the work of the IWG will be through teleconferences. However, one face-to-face meeting of the IWG may be requested to meet the tight timeline proposed. Given the time zone challenges for scheduling within business hours, the complex nature of this topic, and the anticipated challenges in reaching harmonisation, it will be difficult to complete the Q&A document within the compressed timeline using only teleconferencing and email. A single face-to-face meeting at an ICH meeting would approximately double the amount of time available for discussions between the full IWG. Additionally, face-to-face discussions are more effective than teleconferences especially for members who must participate using a second language.TimingApproval of Topic/Rapporteur & IWG Defined November 10, 2014 First IWG Meeting (teleconference) November 2014 Step 2a/b document to present to SC November 2015 Step 4 document sign-off TBD。

The problem is the heart of mathematics.(问题是数学的心脏。

)??哈尔莫斯He who seeks for methods without having a difinite problem in mind seeks for the most part in vain.(心中没有一定的问题而要寻找方法的人,多半都是徒劳无获的。

)?? 希尔伯特The problem solver may do creative work even if he does not succeed in solving his own problem;his effort may lead him to means applicable to other problems,Then the problem solver may be creative indirectly be leaving a good unsolved problem which eventually leads others to discovering fertile means.(即使在解某一道题时,解题者未获成功,他也可能做了有独创性的工作;他的努力可能使他得到适用于解决其他问题的工具。

此外,他可能留下一个很好的未解决问题,这个问题最终能使其他人发现更有成效的解题手段。

这样,他间接地作出了独创性的贡献。

)??波利亚One of the virtues of a good problem is that it generates other good problems.(一道好题的价值之一在于它能产生其他一些好题。

)??波利亚Each problem that I solved became a rule which served afterwards to solve other problems.(我解决过的每一个问题都成为日后用以解决其他问题的法则。

ORIGINAL PAPER -PRODUCTION ENGINEERINGEffect of viscosity and interfacial tension of surfactant–polymer flooding on oil recovery in high-temperature and high-salinity reservoirsZhiwei Wu •Xiang’an Yue •Tao Cheng •Jie Yu •Heng YangReceived:22April 2013/Accepted:28August 2013/Published online:8September 2013ÓThe Author(s)2013.This article is published with open access at Abstract This study aims to analyze the influence of viscosity and interfacial tension (IFT)on oil displacement efficiency in heterogeneous reservoirs.Measurement of changes in polymer viscosity and IFT indicates that vis-cosity is influenced by brine salinity and shearing of pore media and that IFT is influenced by salinity and the interaction between the polymer and surfactant.High concentrations (2,500and 3,000mg/L)of polymer GLP-85are utilized to reduce the effect of salinity and maintain high viscosity (24mPa s)in formation water.After shearing of pore media,polymer viscosity is still high (17mPa Ás).The same polymer viscosity (17mPa Ás)is utilized to displace oil,whose viscosity is 68mPa Ás,at high temperature and high pressure.The IFTs between surfac-tant DWS of 0.2%in the reservoir water of different salinities and crude oil droplet are all below 10-2mN/m,with only a slight difference.Surfactant DWS exhibits good salt tolerance.In the surfactant–polymer (SP)system,the polymer solution prolongs the time to reach ultra-low IFT.However,the surfactant only has a slight effect on the viscosity of the SP system.SP slugs are injected after waterflooding in the heterogeneous core flooding experiments.Recovery is improved by 4.93–21.02%of the original oil in place.Furthermore,the core flooding experiments show that the pole of lowering the mobility ratio is more sig-nificant than decreasing the IFT of the displacing agent;both of them must be optimized by considering the injec-tivity of the polymer molecular,emulsification of oil,and the economic cost.This study provides technical support in selecting and optimizing SP systems for chemical flooding.Keywords Chemical flooding ÁViscosity ÁInterfacial tension ÁOil displacement efficiency ÁSalinityAbbreviations IFT Interfacial tension,mN/mGLP-85The polymer,modified polyacrylamides,whoserelative molecular mass is 1.759107OOIP Original oil in place EOR Enhanced oil recovery SP Surfactant–polymer ASP Alkali–surfactant–polymer DWS The surfactant,an anionic sulfate,whoseaverage relative molecular weight is 560to 600PV Injection pore volume CMC Critical micelle concentrationIntroductionPolymer flooding has been employed successfully in Daqing Oilfield in China for decades;it contributed to the oil recovery of more than 10%of original oil in place (OOIP)after water flooding (Wang et al.2009).This Project (2011ZX05009-004)was supported by National NaturalScience Foundation of China.Z.Wu (&)ÁX.Yue ÁJ.Yu ÁH.YangMOE Key Laboratory of Petroleum Engineering in China University of Petroleum,Beijing 102249,China e-mail:wuzhiwei1987@Z.Wu ÁX.Yue ÁJ.Yu ÁH.YangPetroleum Engineering Faculty,China University of Petroleum,Beijing 102249,ChinaT.ChengShell China Exploration and Production Co.Ltd,Beijing 100000,ChinaJ Petrol Explor Prod Technol (2014)4:9–16DOI 10.1007/s13202-013-0078-6Alkaline–surfactant–polymer(ASP)flooding can effec-tively reduce oil residual saturation to reduce interfacial tension(IFT)and the mobility ratio between the water phase and oil phase(Clark et al.1988;Meyers et al.1992; Vargo et al.1999).Alkali is added in ASPflooding to decrease the quantity of the surfactant through competitive adsorption with the surfactant and reaction with petroleum acids in crude oil to generate a new surfactant(Pope2007; Rivas et al.1997).However,the use of alkali has intro-duced problems in the injection of the ASP solution These problems include the deposition of alkali scales in the reservoir and bottom hole(Hou et al.2005;Bataweel and Nasr-El-Din2011;Jing et al.2013),difficulty of treating the produced water(Deng et al.2002),and reduction of the viscosity of the combined ASP slug(Wang et al.2006; Nasr–El–Din H.A.et al.Nasr-El-Din et al.1992).Many methods were introduced to solve these problems.Elraies (2012)proposed a new polymeric surfactant and conducted a series of experiments to evaluate this surfactant in the absence and presence of alkali.Some studies(Maolei and Yunhong2012;Flaaten et al.2008;Berger and Lee2006 replaced strong alkalis with weak alkalis,such as sodium carbonate,sodium metaborate,and organic alkaline,to reduce their effect on the viscosity of the ASP slug.Alkali-free SPflooding avoids the drawbacks associated with alkali.Surfactants with concentrations higher than the critical micelle concentration(CMC)can achieve ultra-low IFT.However,such surfactants are expensive.The use of a hydrophilic surfactant mixed with a relatively lipophilic surfactant or a new surfactant was also investigated(Rosen et al.2005;Aoudia et al.2006;Cui et al.2012).However, studies on SPflooding only focused on the screening and evaluation of the polymer and surfactant and their inter-action.Reduction in mobility ratio and IFT is influenced by reservoir brine salinity,reservoir temperature,concentra-tion of chemical ingredients and oil components,and others (Gaonkar1992;Ferdous et al.2012;Liu et al.2008;Gong et al.2009;Cao et al.2012;Zhang et al.2012).Dis-placement performance is affected by the interaction of the physical properties of the reservoir and those of thefluid. The primary influencing factors must be identified.SP flooding can enhance recovery because of its capability to control viscousfingering and reduce IFT.In formulas involving the capillary number,ultra-low IFT between the binary system and oil drop in a homogenous core yields the lowest residual oil saturation and the highest oil recovery. In a heterogeneous core with high permeability,sweep efficiency has a larger influence on oil recovery than dis-placement efficiency.Highest oil recovery can be achieved under optimum IFT and not under the lowest IFT of the binary system.However,this concept(Wang et al.2010)is based on light oil reservoir with high permeability and low temperature.Dagang Oilfield is a reservoir with medium–low permeability characterized by high temperature,sig-nificant heterogeneity,and high brine salinity.These rough conditions bring about a significant challenge in SP flooding and demand different IFTs and viscosities of the SP system.Based on the reservoir condition of Dagang Oilfield, static experiments were conducted to study the influence of loss parameters of viscosity and IFT on the SP system. Combined with coreflooding,the respective effect of viscosity and IFT in the binary system on displacement efficiency was investigated.The results of this study pro-vide insights into chemical screening,slug optimization, and injection methods in thefield.Equipment and materialsEquipmentThe main equipment for the experimentalflow is shown in Fig.1.The heterogeneous core holder is30cm long.The coreflooding model is30cm long, 4.5cm wide,and 4.5cm thick.Each layer of the model is1.5cm thick. Other equipment include a RheoStress6,000rheometer from HAAKE,a Brookfield DV-II?viscosimeter,several high-pressure intermediate containers,an automatic mea-suring cylinder,a thermostat oven,a pressure collection system,and a constantflow pump.Water was pumped into high-pressure intermediate containers at a certain speed, and formation water and crude oil were forced into the core with a certain difference in pressure.A30cm long core holder was utilized to hold the core with external pressure that is1–2MPa more than the inlet pressure.The pressure was determined by a pressure collection system.An oven was utilized to maintain stable experimental temperature. The product was gathered and measured by a product acquisition system.MaterialsThe brine(experimental water)was composed of simulated pure water,formation water,and simulated formation water.The ion concentrations of these components are listed in Table1.A three-layer artificial heterogeneous sandstone core was created.The core has an average per-meability ranging from55.38910-3to106.009 10-3l m2and a porosity percentage of24.2%.All other parameters of the core are shown in Table2.Modified polyacrylamide GLP-85was utilized as the polymer.This polymer,whose relative molecular mass is 1.759107,has a high tolerance for salinity.The viscosity of the polymer was measured with HAAKE Rotational Rheometer-6000at78°C.The main active material of surfactant DWS is an anionic sulfonate component,of which 50wt %is active content,16.8wt %is unsulfonated oil,31.2wt %is vol-atile content,and 2.0wt %is inorganic salt.The average relative molecular weight ranges from 560to 600.The polymer (2,000and 2,500mg/L)and the surfactant (0.08–0.3wt %)were mixed with formation water to form the SP system (binary system).Ground dehydration degassed oil and kerosene were mixed at a volume ratio of 5–1to maintain consistent viscosity between the simulated oil and the crude oil in the reservoir.The viscosity of oil is 68mPa Ás at 78°C.A constant reservoir temperature of 78°C was maintained throughout the experiment.Table 3shows the reservoir condition and the basic characteristics of the pore fluid.Viscosity and IFT measurementThe viscosities of the SP solutions were determined at a shear rate of 7.34s -1with HAAKE Rotational Rheometer-6000at 78°C.The IFTs between the surfactant solutions and oil were measured at 78°C with a spinning drop ten-sion meter (Model Texas-500).The spinning oil droplet was stretched in the chemical agent solution until the oil/water phase reached equilibrium at a rotation speed of 6,000r/min.The images were stored at regular intervals.InFig.1Main experimental setupTable 1Ion concentration of simulated injection water and formation water (mg/L)Water typeNa ??K ?Ca 2?Mg 2?Cl -HCO 3-Total salinity Simulation injection water 38185553285452Formation water9,423404309,48562320,001Simulated formation water10,9935256317,73960529,952Table 2Core parameters and oil displacement efficiency of chemical flooding Core Number Porosity/%Permeability/10-3l m 20.3PV chemical system Reduction in water cut/%Water drive/%OOIP Increase inrecovery/%OOIP Total recovery/%OOIP DG-F418.5155.380.2%DWS4.4147.76 4.9352.69DG-F1527.4267.772,000mg/L GLP-85?0.08%DWS29.5849.6310.4860.12DG-F1327.2665.242,000mg/L GLP-85?0.2%DWS19.6748.0418.6966.72DG-F1426.7881.072,000mg/L GLP-85?0.3%DWS39.1854.3114.7169.01DG-F1125.8677.252,500mg/L GLP-85?0.2%DWS5849.8121.0270.83DG-F1627.8296.112,500mg/L GLP-85?0.2%DWS after shearing4.7850.213.5753.78When the water cut was 98%,water flooding was ceased and the SP system was injected.The increase in recovery was observed in the stage of injecting SP system and subsequent water flooding.Total recovery includes the recovery of water flooding and the increase in recoverythe images,the height of the spinning oil drop was mea-sured to calculate the IFT when the ratio of the length to the height of oil drop was more than4.However,length and height should be measured only when the ratio of the length to the height of the oil drop is between1and4.The IFTs of the different concentrations of the surfactant were obtained with the abovementioned surfactants or their mixtures with a polymer.Coreflooding experiments1.The heterogeneous core was vacuumized and saturatedwith formation water.Pore volume was then measured.2.The model was saturated with crude oil at an injectionrate of0.2mL/min.Original oil saturation and irreducible water saturation were then calculated.3.Formation water was injected at a rate of1.2mL/minuntil the water cut reached98%.The produced oil and water and pressure change in the inlet were monitored.4.An SP system solution of0.3PV was injected at a rateof1.2mL/min.Waterflooding was performed at the same rate until the water cut reached98%.Ultimate recovery was then calculated.Results and discussionInfluencing factors of binary system’s performance PolymerThe polymer solutions were generally fabricated with pure water in chemicalflooding,thereby reducing the influence of salinity on the polymer mother solution.The solution was diluted with reservoir water to guarantee that the chemical system matched the formation water with high salinity.Polymer viscosity was measured in high salinity under constant temperature because salinity affects the viscosity and IFT of the binary system.The viscosity of the polymer solution must be determined to displace the crude oil with high viscosity.Therefore,the polymer solution with a high concentration was utilized.Polymer solutions of different concentrations were fabricated with a forma-tion brine of different salinities at78°C.The results of viscosity changes are shown in Table4.As shown in Table4,the viscosity of the polymer solu-tion decreased sharply with the increase in salinity when the polymer concentration was determined.When the polymer concentration was1,500mg/L,the viscosity of the polymer solution decreased from29to8mPaÁs,and the viscosity retention rate was27.59%.However,when the polymer concentration was3,000mg/L,the viscosity of the polymer solution decreased from172to25mPaÁs,and the viscosity retention rate was14.53%.The viscosity retention rate decreased and the loss of polymer solution increased with the increase in polymer concentration.With the increase in salinity,the polymer molecular chain became compressed that it could not interweave with another polymer molecular chain.In addition,a small molecular group was formed.The viscous force among the polymer molecules was reduced after the group was formed,resulting in the loss of viscosity of the polymer solution.However,viscosity increased in each style of formation water with the increase in polymer solution.High concentration of the polymer solution was necessary to maintain high velocity.Thus,2,500mg/L was determined based on the polymer’s injectivity,economic cost,and the demand of viscosity.The polymer solution had toflow through pumps,pipes, valves,perforated holes,and so on at a high speed before it was injected.To simulate the effect of mechanical shearing on viscosity,2,500and3,000mg/L of the polymer solution were dissolved with formation water and simulated forma-tion water and sheared in a Waring device at a speed of 3,000r/min for20s.The viscosities were measured before and after shearing at78°C.The results are shown in Table5.As shown in Table5,the viscosity retention rates at 2,500and3,000mg/L of the polymer solution were70.83 and66.67%in formation water,respectively,and86.67 and76%in simulated formation water,respectively,after shearing.Therefore,this type of polymer solution dissolved with high salinity of brine has a strong ability to resist shearing.Thisfinding indicates that the solution can be applied in the reservoir.SurfactantThe mixture of surfactant and polymer solution injected into the formation is affected by many factors,such as temperature,salinity,shearing,retention,adsorption,and dilution of formation brine.Therefore,surfactant DWS wasTable3Reservoir condition and crude oil propertiesItem Permeability/10-3l m2Porosity/%Variation coefficient of permeability Reservoir temperature/°CReservoir condition55.38–106.0024.20.678Item Reservoir depth(m)Formation water type Crude oil viscosity/(mPaÁs)Crude oil density/(g/cm3) Reservoir condition2,100–2,300MgCl268(at78°C)0.922–0.968(on the ground)utilized to create solutions of different concentrations at reservoir temperature.The IFTs were measured,and the results are shown in Fig.1.As shown in Fig.2,IFT between the oil droplet and the solution decreased gradually when the surfactant concen-tration increased from0.05to0.4%.IFT reached an ultra-low level when the concentration was0.3%.With the increase in surfactant concentration,the surfactant mole-cules were adsorbed onto the oil/water interface constantly with the hydrophilic in the water phase and lipophilic in the oil phase.When the concentration was more than0.3%, the adsorption on the oil/water interface reached saturation, and IFT remained stable.Thus,the concentration of0.3% was the CMC.Surfactant concentration of0.2–0.3% should be selected because of its economic cost and loss in the pore media.The process of dissolving the surfactant with pure water and diluting it with formation water would seriously influ-ence the activity of the surfactant.Given that the salinity of the injected water was lower than that of the original for-mation water,the salinity of the areas washed for long-term waterflooding was reduced,whereas that of the unwashed areas remained high.In SP systemflooding,the mobility control of the polymer solution causes the chemical system toflow toward the area unwashed with water.As a result,the chemicals are placed in contact with the original for-mation water and are affected by salinity.Therefore, studying the influence of salinity on IFT is essential.Fig-ure3shows the influence of different salinities on IFT between the DWS of0.2%and the crude oil droplet.With increasing salinity,the IFTs of all types of brine can reach an ultra-low level.However,the prolonged time of reaching ultra-low IFT would affect the time of chemicalflooding in the marine oilfield.With constant time,the increase in salinity can increase IFT.The reason for such is that the surfactant molecules adsorbed on the oil/water interface desorbed constantly and entered into the oil phase with the increase in salinity,especially from several hundred to 30,000mg/L.However,ultra-low IFTs were reached with different salinities,indicating that0.2%surfactant can adapt to the reservoir with different salinities.The compatibility between the polymer and surfactant in the SP system had an interaction problem.We analyzed the interaction by studying how the addition of surfactant DWS influences the viscosity of polymer and how the addition of a polymer solution affects the IFT of the sur-factant.Table6shows the effect of the addition of sur-factant on polymer viscosity.Table7shows the effect of the addition of polymer solution on the IFT of the surfac-tant.Tables6and7show that ultra-low IFT can be reached by0.2%DWS surfactant with the increase in the con-centration of the polymer solution.However,longer time was required.The velocity of the surfactant molecules to the oil/water phase decreased because of the long organic chains of polymer molecules.Therefore,more migration time was required.The SP system can reach ultra-low IFT with longer interfacial contacting time,which matches the SP systemflooding.Theflowing velocity of the SP system in the reservoir was much slower because the mobility of the SP system was smaller than that of a single surfactant solution.Therefore,contact time with crude oil was longer, thereby reducing oil–water IFT and enhancing oil dis-placement efficiency.However,the surfactant did notTable4Viscosity change in polymer GLP-85with different salini-ties of waterPolymer concentration (mg/L)Simulation purewater(salinityof452mg/L)Formationwater(salinityof20,000mg/L)Simulatedformation water(salinity of29,952mg/L) Viscosity/(mPaÁs)Viscosity/(mPaÁs)Viscosity/(mPaÁs)4,000––733,500––503,00017248252,5007924152,0005017131,5002998Table5Viscosity change in polymer GLP-85before and after shearingPolymer concentration/ (mg/L)Viscosity/(mPaÁs)Formation water(salinity of20,000mg/L)Simulated formation water(salinity of29,952mg/L) BeforeshearingAftershearingBeforeshearingAftershearing2,50024171513 3,00048322519significantly affect the viscosity of the SP system;it merely affected dilution.Therefore,the SP solution has the same tackifying property as that of the polymer solution at the same concentration.It also allowed for the reduction of IFT with prolonged contact time.Surfactant concentration should be increased and polymer concentration should be decreased to reduce IFT instantly and achieve instant emulsification,given that a certain relationship exists between emulsification and IFT reduction.However,such procedures are expensive and lead to less activity of tackifying and poor ability of the SP system to control mobility ratio.The surfactant and polymer can be mixed to prolong contact time with the crude oil;such would be a significant contribution to the study of injection patterns in chemicalflooding after waterflooding.Effect of viscosity on oil displacementTable2shows the results of core displacement of various chemical pared with the oil displacement results of DG-F4,DG-F13,and DG-F11,the viscosity of the SP system increased gradually and the recovery of flooding was enhanced on the condition of similar oil recovery of waterflooding at a certain surfactant concen-tration and with increasing polymer concentration.Based on the change in pressure curve and water cut curve,the increase in the system’s viscosity increased theflowing resistance of the water phase in the high-permeable layer. As a result,the pressure on the entry side increased grad-ually.The SP systemflowed into the middle-and low-permeable layers where residual oil was abundant,and the water cut significantly decreased.When the system vis-cosity increased from1to15mPaÁs,oil recovery increased by13.76%.When the viscosity increased from15to 22.5mPaÁs,enhanced recovery increased only by2.33%. However,the pressure gradient on the entry side increased from11.05to15.23MPa/m,indicating that viscosity contributed73.62%to the increase in oil recovery and that the proportion declined with the increase in viscosity. Thus,oil recovery did not increase when viscosity increased(Fig.4).The SP system(2,500mg/L GLP-85?0.2%DWS) was sheared in the Waring device and then utilized to displace residual oil in heterogeneous cores.Figure5 shows the dynamic change in recovery before and after shearing.The displacement results of core DG-F11and DG-F16showed that viscosity changed greatly after shearing and that recovery declined sharply correspond-ingly.Recovery after shearing was53.78%OOIP and only increased by3.57%OOIP after waterflooding.The water cut was reduced only by4.78%.However,recovery before shearing was70.83%OOIP and increased by21.02% OOIP after waterflooding.The water cut was reduced by 58%before shearing.The pole of lowering the mobility ratio was obvious in the heterogeneous cores.Table6Changes in SP system viscosity with surfactant concentration Polymer concentration/(mg/L)Viscosity/(mPaÁs)0%DWS?GLP-8 50.1%DWS?GLP-850.2%DWS?GLP-850.3%DWS?GLP-852,0001716.515142,500242322.521Table7IFT of the SP system changes with polymer concentrationSurfactant concentration/%DWS2,000mg/L GLP-85?DWS2,500mg/L GLP-85?DWSt/min r/(10-3mN/m)t/min r/(10-3mN/m)t/min r/(10-3mN/m) 0.23 5.31 6.59.231212.15Influence of IFT on oil recoverySurfactants of different concentrations were added to the polymer with a concentration of2,000mg/L.Core dis-placement experiments were conducted with the mixtures. Compared with core DG-F13,DG-F14,and DG-F15,IFT decreased from5.6910-2to1.5910-3mN/m when the surfactant concentration increased from0.08to0.3% under constant system viscosity(Table2).Recovery increased from10.4to14.71%OOIP after waterflooding. Errors in the oil displacement experiment of core DG-F14 might have caused the different results.However,we can still consider the contribution of IFT to the recovery of heterogeneous cores,ranging from4to8%OOIP,which only accounts for approximately30%;this percentage is less than the pole of lowering the mobility ratio,which is nearly70%.Therefore,control of mobility between oil and water in the heterogeneous cores and increase in the displacement resistance of high-permeable layers should be consideredfirst.The increase in the recovery of reducing IFT was much less than that of increasing viscosity.Fig-ure6shows the relationship between IFT and recovery as well as that between IFT and the pressure gradient.With the decrease in IFT,recovery initially increased and then decreased.Therefore,other principles could have increased recovery other than the decrease in IFT.By changing pressure,oil–water emulsification was strengthened because of the decrease in IFT from 5.6910-2to 9.23910-3mN/m.Moreover,the emulsified oil exhib-ited coalescence,which increased the displacement resis-tance,formed an oil block,and significantly increased the pressure gradient.The low IFT of1.5910-3mN/m made oil-in-water emulsion stable.Thus,the oil block was not formed easily.ConclusionsPolymer viscosity was seriously affected by salinity.The effect of shearing on polymer viscosity and oil recovery was significant.Thus,high concentration of polymer was utilized to maintain high viscosity.The CMC of DWS was 0.3%;this CMC value was employed to maintain low IFT. The IFTs with the brine at all salinity levels could be ultra low,indicating that salinity only had a slight effect on the activity of0.2%DWS.The time of reaching ultra-low IFT between the oil droplet and SP system was longer than that of a single surfactant because of the polymer’s existence. The injection pattern of the surfactant and polymer mixture was used to maintain low IFT in the binary system.In the core whose permeability contrast was4and average permeability ranged from55.38910-3to106.009 10-3l m2,viscosity and IFT contributed approximately70 and30%to the increase in oil recovery,respectively.In the heterogeneous,heavy oil reservoirs whose permeability contrast was4and temperature was78°C,increasingdisplacement resistance in the high-permeable layers and displacing the residual oil caused by microheterogeneity are important to improve oil recovery.When screening the properties of agents in chemicalflooding,viscoelasticity is thefirst thing that should be considered.The second is how to reach ultra-low IFT between oil and water.Viscosity and IFT must be optimized to maximize oil recovery in the heterogeneous cores on the condition that the injectivity and emulsification of the SP system are considered.When viscosity is high,injectivity becomes a problem.When IFT reaches an ultra-low level,oil-in-water emulsion remains stable,and the coalescence of emulsified oil droplet would not easily occur.Finally,an oil block would be formed. Acknowledgments The authors would like to express their appre-ciation for thefinancial support received from National Natural Sci-ence Foundation of China(2011ZX05009-004)and China University of Petroleum,for permission to publish this paper.Open Access This article is distributed under the terms of the Creative Commons Attribution License which permits any use,dis-tribution,and reproduction in any medium,provided the original author(s)and the source are credited.ReferencesAoudia M,Al-Shibli MN,Al-Kasimi LH,Al-Maamari R,Al-Bemani A(2006)Novel surfactants for ultralow interfacial tension in a wide range of surfactant concentration and temperature.J Sur-factants Deterg9:287–293Bataweel MA,Nasr-El-Din HA(2011)Minimizing scale precipitation in carbonate cores caused by alkalis in ASPflooding in high salinity/high temperature applications,SPE14151presented at the SPE International Symposium on Oilfield Chemistry held in The Woodlands.Texas,USA,pp11–13Berger PD,Lee CH(2006)Improve ASP process using organic alkali.SPE99581presented at the SPE/DOE symposium on improved oil recovery,Tulsa,Oklahoma22–26April2006Cao Y,Zhao R,Zhang L,Xu Z,Jin Z,Luo L,Zhang L,Zhao S(2012) Effect of electrolyte and temperature on interfacial tensions of alkylbenzene sulfonate solutions.Energy Fuels26:2175–2181 Clark SR,Pitts MJ,Smith SM(1988)Design and application of an alkaline-surfactant-polymer recovery system for the West Kiehl field.Paper SPE17538presented at the SPE rocky mountain regional meeting,Casper,WYCui Z,DU X,Pei X,Jiang J,Wang F(2012)Synthesis of didodecylmethylcarboxyl betaine and its application in surfac-tant–polymerflooding.J Surfactants Deterg15:685–694Deng S,Bai R,Chen JP,Yu G,Jiang Z,Zhou F(2002)Effects of alkaline/surfactant/polymer on stability of oil droplets in produced water from ASPflooding.Colloids Surf A Physico-chem Eng Asp211:275–284Elraies KA(2012)An experimental study on ASP process using a new polymeric surfactant.J Petrol Explor Prod Technol 2:223–227Ferdous S,Ioannidis MA,Henneke DE(2012)Effects of temperature, pH,and ionic strength on the adsorption of nanoparticles at liquid–liquid interfaces.J Nanopart Res14:850Flaaten AK,Nguyen QP,Pope GA,Zhang J(2008)A systematic laboratory approach to low-cost,high-performance chemical flooding.SPE113469presented at the SPE/DOE Improved Oil Recovery Symposium.Tulsa,Oklahoma,19-23April2008 Gaonkar AG(1992)Effects of salt,temperature,and surfactants on the interfacial tension behavior of a vegetable oil/water system.J Colloid Interface Sci149(1):256–260Gong H,Guiying X,Zhu Y,Wang Y,Dan W,Niu M,Wang L,Guo H,Wang H(2009)Influencing factors on the properties of complex systems consisting of hydrolyzed polyacrylamide/triton x-100/cetyl trimethylammonium bromide:viscosity and dynamic interfacial tension studies.Energy Fuels23:300–305Hou JR,Liu ZC,Zhang SF,Yue XA,Yang JZ(2005)The role of viscoelasticity of alkali/surfactant/polymer solutions in enhanced oil recovery.J Petrol Sci Eng47:219–235Jing G,Tang S,Li X,Yu T,Gai Y(2013)The scaling conditions for ASPflooding oilfield in the fourth Plant of Daqing oilfield.J Petrol Explor Prod Technol3:175–178Liu L,Hou J,Yue XA,Zhao J(2008)Effect of active species in crude oil on the interfacial tension behavior of alkali/synthetic surfactants/crude oil systems.Petrol Sci5:353–358Maolei C,Yunhong D(2012)Study of interfacial tension between a weakly alkaline three-componentflooding system and crude oil, and evaluation of oil displacement efficiency.Chem Technol Fuels Oils48(1):33–38Meyers JJ,Pitss MJ,Wyatt K(1992)Alkaline-Surfactant-Polymer flood of the west kiehl,minnelusa unit.SPE24144presented at the SPE/DOE8th symposium on enhanced oil recovery,Tulsa, Oklahoma,April22–24Nasr-El-Din HA,Hawkins BF,Green KA(1992)Recovery of residual oil using the alkaline/surfactant/polymer process:effect of alkali concentration.J Petrol Sci Eng6:381Pope GA(2007)Overview of chemical EOR.Presentation:casper eor workshopRivas H,Gutierrez X,Zirrit JL,Anto0n,RE,Salager JL(1997) Industrial applications of microemulsions.305–329Rosen MJ,Wang H,Shen P,Zhu Y(2005)Ultralow interfacial tension for enhanced oil recovery at very low surfactant ngmuir21:3749–3756Vargo J,Turner J,Vergnani B,Pitts M,Wyatt K,Surkalo H,Patterson D(1999)Alkaline-Surfactant-Polymerflooding of the cambridge minnelusafield.SPE55633presented at SPE Rocky Mountain Regional Meeting held in Gillette,Wyoming,15–18May1999 Wang D,Han P,Shao Z,Chen J,Serigh RS(2006)Sweep improvement options for Daqing oilfield.SPE99441presented at SPE/DOE symposium on improved oil recovery,Tulsa, Oklahoma22–26April2006Wang D,Dong H,Lv C,Fu X,Nie J(2009)Review of practical experience by polymerflooding at Daqing.SPE Reserv Eval Eng 12(3):470–476Wang Y,Zhao F,Bai B,Zhang J,Xiang W,Li X,Zhou W(2010) Optimized surfactantfit and polymer viscosity for surfactant-polymerflooding in heterogeneous formations.SPE127391 presented at SPE improved oil recovery symposium held in Tulsa,Oklahoma,USA,24–28April2010Zhang H,Dong M,Zhao S(2012)Experimental study of the interaction between NaOH,surfactant,and polymer in reducing court heavy oil/brine interfacial tension.Energy Fuels26:3644–3650。

The evolution of emergency management and the advancement towards a profession in theUnited States and FloridaJennifer Wilson a,*,Arthur Oyola-Yemaiel baFlorida Division of Emergency Management,2555Shumard Oak Blvd.,Tallahassee,FL32399,USA b Department of Sociology and Anthropology,Florida International University,Miami,FL33199,USA AbstractThe occupation of ‘‘emergency management’’is the official organizational structure estab-lished by governments (federal,state,county,and city)to manage the social repercussions of natural and/or technological emergencies.This field has evolved quite extensively from its Cold War-civil defense origins and some have stated that the field is ‘‘professionalizing’’.We examine the process of professionalization in the United States and Florida.Our research explores how emergency management organizations are modifying in order to develop the capacity to prepare for,respond to,recover from,and mitigate against disaster events more effectively.#2001Elsevier Science Ltd.All rights reserved.Keywords:Emergency management;Professionalization;Accreditation;Certification;Florida1.IntroductionThroughout time societies have dealt with natural and/or man-made disastrous events and calamities.For example,the Greco–Roman cities of Pompeii,Hercula-neum and Stabiae were covered with and instantly preserved by volcanic ash up to 65feet deep that resulted from the eruption of Mount Vesuvius in AD 79(Maiuri,1970;Parslow,1995).The great fire of London in 1666destroyed a large part of the city over 4days including most of the civic buildings,a cathedral,87parish churches and about 13,000homes (Bell,1971).The Indonesian island of Krakatoa was an underwater volcano that erupted and exploded in 1883to completely destroy the island,cover 300,000square miles with ash and pumice,and cause greattsunamis,Safety Science 39(2001)117–131/locate/ssci0925-7535/01/$-see front matter #2001Elsevier Science Ltd.All rights reserved.P I I:S 0925-7535(01)00031-5*Corresponding author.E-mail addresses:jennifer.wilson@dca.state.fl.us (J.Wilson),omaielson@(A.Oyola-Yemaiel).118J.Wilson,A.Oyola-Yemaiel/Safety Science39(2001)117–131which took36,000lives(Thornton,1996).These and other events caused natural disruption as well as social and human losses.Doubtlessly,few would argue that these disruptions are disasters.2.Defining disasterBut,what exactly is a disaster?Is disaster the disruption of natural ecosystems?Is it the destruction of property?Is it the loss of human life?Is it the loss of vital human resources?Or a combination of these things?Furthermore,is disaster a societal phenomenon or is it a local phenomenon?Although there is no argument that catastrophic events occur to individuals and to small social groups such as the family,we classify disaster in this study as a catastrophic event that affects a large portion of a community.We concur with Fritz (1961)who defines disaster as:an event,concentrated in time and space,in which a society,or a relatively self-sufficient subdivision of a society,undergoes severe danger and incurs such losses to its members and physical appurtenances that the social structure is disrupted and the fulfillment of all or some of the essential functions of the society is prevented.Fritz’s(1961)definition encompasses the human responses and adaptations to events that have been defined as disasters.It also illustrates how this behavior differs from that occurring in the crises of everyday life and in ordinary accidents such as traffic accidents.This contrasts disasters from somewhat more routine public issues like crime,unemployment,and poverty.Although a consensus on the proper academic definition of‘‘disaster’’has not been reached,it is certain that disasters affect whole communities in many significant ways.Indeed,the potential for highly destructive events is increasing as the world’s population increases,as certain potentially dangerous technologies become more widespread,and as populations become more concentrated in urban areas(Hoet-mer,1991,p.xxii).3.Defining emergency managementIn view of the social calamity that past disasters have produced and in order to reduce catastrophic events,societies in the modern era have established structures to attempt to‘‘manage’’natural and technological hazards and their impacts on life and property.The delineation of‘‘emergency management’’as a separate and specific body of knowledge is a fairly recent innovation,codifying the discipline of managing disasters and emergencies(Marshall,1987).Emergency management is the discipline and profession of applying science,technology,planning,and management to deal with extreme events that can injure or kill large numbers of people,do extensiveJ.Wilson,A.Oyola-Yemaiel/Safety Science39(2001)117–131119 damage to property,and disrupt community life(Hoetmer,1991,p.xvii).In other words,emergency management is the management of risk so that societies can live with environmental and technological hazards and deal with the disasters that they cause(Waugh,2000).In the United States,emergency management generally has been conceptualized as a problem and legal responsibility of government—local,state,and federal(Lindell and Perry,1992).Indeed,elected officials have an inherent or a statutory duty to protect lives and property with co-ordinated response to disasters(Daines,1991,p. 161).Although,historically,emergency management was considered only a function of law enforcement andfire departments,(Petak,1985),today,the function of emergency management requires a permanent,full-time program to co-ordinate a variety of resources,techniques,and skills to reduce the probability and impact of extreme events—and,should a disaster occur,to bring about a quick restoration of routine.Throughout this century,each United States presidential administration has been concerned for its citizen’s safety from calamity—natural or man-made.Since Franklin D.Roosevelt’sfirst administration permanent government agencies con-cerned with domestic and defense emergencies have been in place(Lindell and Perry, 1992).For example,drought and wind erosion that caused the Dust Bowl in the 1930s was gradually halted with federal aid in which windbreaks were planted and grassland was restored from overgrazing(Hurt,1981).The Flood Control Act of 1936provided for a wide variety of projects,many of which were completed under the authority granted to the US Army Corp of Engineers under which hundreds of dams,dikes,and levees were erected to reduce vulnerability tofloods(Drabek, 1991,p.7).Thus,because the land was considered tamed and vulnerability to natural hazards seemingly reduced,by the1950s the primary threat of disaster to the general popu-lation was considered by the federal government to be from an outside source in the manifestation of nuclear attack.Growing concerns about potential uses of nuclear weapons resulted in the creation of an independent federal agency through the enactment of the Federal Civil Defense Act of1950(Drabek,1987).Throughout the 1950s and1960s especially and until the conclusion of the Cold War,this country’s federal emergency management program focused upon civil defense.But,continued population growth in high-risk areas such as barrier islands,along fault lines and inflood plains,fueled growing vulnerability to natural disasters in the United States.With diminishing threat of nuclear attack at the closing stages of the Cold War and ever-increasing impacts of major natural hazards such as hurri-canes,earthquakes,andfloods,federal emergency management began to split its focus between both types of threat—war and natural disasters.By the early1970s, specific emphasis was placed on peacetime as well as wartime emergencies(Drabek, 1987).The Carter administration created the Federal Emergency Management Agency (FEMA)in1979in response to administrative and structural difficulties,as well as to concern that the scope of the functions performed as part of emergency management were too narrow and that too many resources were devoted to‘‘after-the-fact’’120J.Wilson,A.Oyola-Yemaiel/Safety Science39(2001)117–131disaster response and too few to the issues of prevention and control(Lindell and Perry,1992).This reorganization pulled together programs and personnel scattered throughout the federal bureaucracy and gave increasing legitimacy to comprehen-sive emergency management(Drabek,1991,p.17–18).In line with the comprehensive emergency management concept,FEMA has emphasized an all-hazards approach since the mid-1980s.The basis of this approach acknowledges that for many disaster management needs and problems,the con-sequences are often the same regardless of the particular type of disaster(natural or technological),i.e.displaced people need to be fed and sheltered,damaged infra-structure needs to be repaired,etc.(Kreps,1991,p.37).According to FEMA,if one looks across the range of threats we face,fromfire,to hurricanes,to tornados,to earthquakes,to war,one willfind there are common preparedness measures that we deal with in trying to prepare for those threats.These common preparedness elements include evacuation,shelter,communications,direction and control,con-tinuity of government,resource management,and law and order(FEMA,1983).It is the establishment of common preparedness measures that then becomes a foun-dation for all threats in addition to the unique preparedness aspects relevant to each individual threat(Thomas,1982,p.8as cited in Vale,1987,p.84).Thus,many emergency management functions are appropriate to a range of hazards.Oper-ationally,emergency management capabilities are now based on these functions,i.e. warning,shelter,public safety,evacuation,and so forth,that are required for all hazards(Hoetmer,1991).4.Emergency management levelsSince the comprehensive consolidation effort of federal emergency management programs into FEMA there has been subsequent development of state and local emergency management programs along similar lines.While some emergency responsibilities and functions are common to all three levels of government,each also has its own unique responsibilities(Table1).The basic role of the state emergency management office is to support local gov-ernment in all aspects of disaster mitigation,preparedness,response,and recovery (Durham and Suiter,1991,p.111).States directly engage in emergency management of hazards with scopes of impact that may encompass multiple localities.Some threats require states to co-ordinate the emergency management actions of local jurisdictions as well as commit their own resources(Lindell and Perry,1992,p.8). In addition,state government serves as the pivot in the intergovernmental system between the local and federal levels.The state emergency management office has the responsibility for administering federal funds(primarily from FEMA)to assist local government in developing and maintaining an all-hazard emergency management system.In its pivotal role,the state is in a position to determine the emergency management needs and capabilities of its political subdivisions and to channel state and federal resources to local government,including training and technical assis-tance,as well as operational support in an emergency(Durham and Suiter,1991,J.Wilson,A.Oyola-Yemaiel/Safety Science39(2001)117–131121 Table1State agency emergency management role in support of local government(adapted from Drabek and Hoetmer,1991,p.109)Emergency management role Principal state agenciesDirection and control in emergency State emergency management(through governor) Warning and notification State emergency management Communications State emergency management Public safety Public information Governor’s office State emergency management Shelter and mass care American Red Cross Human services Evacuation State emergency management National Guard Law enforcement Public safety National GuardDamage assessmentPublic buildings General servicesElectric power Public service commissionUnemployment Employment securityHousing Human servicesFarms AgricultureWater supply Environmental protectionRoads and bridges TransportationState agency coordination for damage assessment State emergency managementHazardous materialsIdentification and assessment Environmental protectionEmergency response State emergency managementCleanup Environmental protectionRadiological monitoring Environmental protectionp.101).In this capacity,the state office has a unique relationship with local gov-ernment governed by two related objectives:(1)to ensure that federal dollars are used in a manner consistent with federal policy,and(2)to provide direct support to local governments as they develop emergency management capability(Durham and Suiter,1991,p.107).It has been argued that disasters,whether natural or technological,are local events.It is the local community that experiences the impact of disasters and it is incumbent on the locality to undertake some positive action(Lindell and Perry, 1992).Local government has traditionally had thefirst line of official public responsibility forfirst response to a disaster(Clary,1985).Therefore,local govern-ments have to develop and maintain a program of emergency management to meet their responsibilities to provide for the protection and safety of the public (McLoughlin,1985).County and municipal offices tend to get much less exposure and have much less availability of resources compared with the federal and state levels.Although the locality is the component closest to the disaster,it is the one with the smallest resource base and with the least access to resources through its constituency. Financial resources and technical capacity can be provided by state and federal122J.Wilson,A.Oyola-Yemaiel/Safety Science39(2001)117–131agencies to augment local capacities,but local officials typically are required to manage disasters until help arrives.What is done during thosefirst hours or days may well determine the success or failure of the response and the costs of recovery (Waugh,1994).For state and local emergency managers,disaster response and mitigation responsibilities have increased dramatically over the past decade.There have been a number of catastrophic natural disasters since1989that have raised the conscious-ness of average citizens and public administrators.Hurricane Hugo in the Carolinas and Virgin Islands,and the Loma Prieta(San Francisco Bay area)earthquake,both in1989,Hurricane Andrew in1992,plus the Midwestfloods of1993were cata-strophes that focused public attention not only on disaster,but on the adequacy of the response to each event and on the need for better disaster mitigation and recovery(Grant,1996).In spite of the fact that tremendous effort has been placed on reduction of risk to natural and technological hazards,it is evident that the overall social consequences of disasters have increased over the past few decades(Blaikie et al.,1994;Peacock et al.,1997;Mileti,1999).There is now more pressure for organizations and civil society to cope with disasters.Consequently,major shifts in the practice of emer-gency management have taken place in the United States.Better emergency response systems and functional co-ordination between federal,state,and local levels of government has evolved into a greater degree of expertise in thefield of emergency management.5.Professionalization of emergency managementIndeed,there has been acceptance of the notion among researchers and practi-tioners that the occupation of emergency management is professionalizing(e.g. Drabek,1987,1989,1994;Drabek and Hoetmer,1991;Lindell and Perry,1992; Sylves and Waugh,1996).Drabek(1994)maintains that the entire nation has experienced a major redirection in disaster preparedness since the1960s that reflects the rapid emergence of a new profession.Increasingly,local government officials have recognized the need for improved co-ordination within the emergency response system.Increasingly this function has been explicitly assigned to an agency directed by a professional with specialized training and job title.For Drabek(1994),the new era of emergency management is indicated by four major areas of change:(1) developments in preparedness theory,(2)new training opportunities,(3)technolo-gical innovations,and(4)increased linkages between research and practitioner communities.But are these areas of change real indicators of occupational(emergency man-agement)professionalization?First,defining exactly what is a‘‘profession’’is not simple.The word‘‘profession’’can be used in quite different ways in everyday lan-guage(Selander,1990).Sociological theory identifies professions as occupations enjoying,or seeking to enjoy,a unique position in the labor force of industrial-ized countries(Collins,1979;Rothman,1987).In this context,professions areJ.Wilson,A.Oyola-Yemaiel/Safety Science39(2001)117–131123 occupations that have been able to establish exclusive jurisdiction over certain kinds of services and to negotiate freedom from external intervention and control over the conditions and content of their work(Freidson,1977).In other words,the core characteristics of a profession are autonomy and monopoly(Rothman,1987).Pro-fessionalization then is the movement of an occupation towards the acquisition of autonomy and monopoly.One substantive indicator that emergency management as afield is indeed pro-fessionalizing results from the institution of the Federal Response Plan in1992.The importance of the Federal Response Plan with its Emergency Support Functions to emergency management professionalization is that it implicitly lays the foundation for standardization of emergency management knowledge,skills,and abilities.A wide number of federal agencies and the American Red Cross must work together incorporating an assumption of co-ordination and communication.Thus,the Fed-eral Response Plan recognizes that many agencies and organizations are part of a disaster response—as opposed to onlyfirst responders such as police and firefighters—highlighting the need for more trained and educated specialists in a variety offields.According to Petak(1985,p.6),emergency managers must have the conceptual skill to understand:(1)the total system,(2)the uses to which the pro-ducts of the efforts of various professionals will be put,(3)the potential linkages between the activities of various professional specialists,and(4)the specifications for output formats and language which are compatible with the needs and under-standings of others within the total system.Professionalization occurs through sponsoring,development,and execution of training by organizations in order to certify individuals as professional emergency managers.The increasing availability of specialized degree programs in disaster management and the certification program for professionals in thefield are indica-tive of monopolization of emergency management.A system of professional licens-ing or certification ensures that practitioners can perform their duties with a certain degree of expertise(Barnhart,1997;Green,1999).Through certification,offered by the International Association of Emergency Managers(IAEM),thefield is able to ensure that future entrants have passed through an appropriate system of selection, training,and socialization,and turned out in a standardized professional mold (MacDonald,1995).The basis of emergency management certification is training.FEMA’s Emergency Management Institute(EMI)provides extensive training that is also available through states.Training is an attempt to build a specialized body of knowledge leading to authority of expertise(Haskell,1984;Beckman,1990).Emergency man-agers are endeavoring to control the educational input in order to develop,define, and monopolize professional knowledge and ensure that practitioners pass through an appropriate system of training and socialization(Larson,1978).Moreover,a national effort of state emergency management directors[National Emergency Management Association(NEMA)]with support from FEMA,is developing a process by which states can receive professional accreditation for their emergency management programs.Accreditation is basically a rigorous,compre-hensive evaluation process to assess an agency or a program against a set of standards124J.Wilson,A.Oyola-Yemaiel/Safety Science39(2001)117–131(National Committee for Quality Assurance,1999).Accreditation is a form of self-regulation,which implies less regulation from outsiders and thus greater autonomy for the emergency management practitioners.This process includes complying with a standard of performance where the state must meet certain requirements in the practice of emergency management such as debris management,sheltering and feeding,and damage assessment.NEMA’s(1998,p.29)report defines state emer-gency management accreditation in the following way.Emergency management accreditation is a voluntary national program of excellence dedicated to ensuring disaster-resistant communities through national standards,demonstrated emergency management capabilities and performance,partnerships,and continuous self-improvement.Emergency managers at the federal,state,and local levels of government have not always shared the same idea of what a‘‘professional emergency manager’’or even the‘‘emergency management profession’’entails.But this has been changing and may continue to change rapidly with increased co-ordination among all levels of government through the accreditation and certification processes.If so,the emer-gency management profession will have a greater degree of intergovernmental consistency.6.The process of emergency management professionalization:the case of Florida Florida is an important and interesting state within which to study the changing field of emergency management.First,Florida is the state geographically and his-torically most vulnerable to hurricanes.Between1900and1997Florida has been directly hit by a little more than one-third of the hurricanes that struck the United States(57of159).This is far more than that experienced by any other state (Lecomte and Gahagan,1998,Williams and Duedall,1997).Table2displays a comparison of hurricane landfall between United States coastal states during most of the twentieth century.Fig.1illustrates the tropical storms and hurricanes that have crossed Florida between1992and1999.Second,Florida is one of the fastest-growing states in terms of population. Throughout the twentieth century Florida’s population has grown at a rate con-siderably faster than the rate of growth for the nation as a whole—usually two, three,or four times as rapidly.In1998,the state’s population reached15million, growing15.9%since the1990census of12.9million,which is more rapid than the nation as a whole(8.3%).The current population of Florida is approximately 14,650,000—the4th most populous in the nation(United States Bureau of the Census,1998).By2025,the United States Bureau of the Census(1995)projects Florida will surpass New York to become the third most populous state in the nation with20.7million people.Table3represents Florida’s tremendous growth rate. Moreover,Florida’s coastal population(Fig.2)has grown from just under7.7 million in1980to over10.5million by the mid-1990s,an increase of37%(LecomteTable2United States mainland hurricane strikes by state,1900–1996(National Hurricane Center,1997)Area Category number All Major123451,2,3,4,53,4,5 United States(Texas to Maine)58364715215864 Texas1299603615 Louisiana858312512 Mississippi1150186 Alabama41500105 Florida171617615724 Georgia1400050 South Carolina64220144 North Carolina104101a02511 Virginia211a0041a Maryland01a0001a0 Delaware0000000 New Jersey1a00001a0 New York31a5a0095a Connecticut23a3a0083a Rhode Island02a3a005a3a Massachusetts22a2a0062a New Hampshire1a1a0002a0 Maine5a00005a0a Indicates all hurricanes in this group were moving faster than30mph.State totals will not necessarilyequal United States totals,and Texas or Florida totals will not necessarily equal sum of sectionaltotals.Fig. ndfalling storms in Florida,1992–1999(provided by Florida Department of Community Affairs,1999).J.Wilson,A.Oyola-Yemaiel/Safety Science39(2001)117–131125and Gahagan,1998).According to Florida Department of Community Affairs (1999),more than 9million Floridians live within 10miles of the coast in 1998,more than 62%of the state’s total population.Due to such high coastal population there are more people at risk in Florida from hurricanes than in any other state in the nation.With people comes property;Florida also has the most coastal property exposed to wind storms.From 1980to the mid-1990s,the value of insured residential prop-erty increased by 135%from $178billion to $418billion and insured commercial property increased by 192%from $155billion to $453billion (IIPLR and IRC,1995).Table 4portrays the increase in the value of insured residential and com-mercial property in Florida.According to the Insurance Services Office (2000),Florida had the most insured losses in the country in the period from 1990to 1999with $19.3billion.California was second with $17.5billion and Texas was third with $6.6billion in insured losses.Throughout the 1980s and early 1990s,several legislative attempts to overhaul the emergency management system in Florida were made with little success.But Hurri-cane Andrew demonstrated decidedly that the state lacked sufficient expertise and Table 3Population Growth in Florida (inmillions)Fig.2.Florida’s coastal population (provided by Florida Department of Community Affairs,1999).126J.Wilson,A.Oyola-Yemaiel /Safety Science 39(2001)117–131resources to co-ordinate an operation to handle a major disaster (FEMA,1993).Florida response was uncoordinated,confused,and often inadequate.In response,Governor Lawton Chiles issued an executive order (92–242)establishing the Gov-ernor’s Disaster Planning and Response Review Committee to evaluate current state and local statutes,plans and programs for natural and man-made disasters,and to make recommendations to the Governor and the State Legislature before the 1993legislative session.The 1993Florida legislature acted on most of the committee’s recommendations,including its call for the creation of the Emergency Management Preparedness and Assistance Trust Fund (EMPATF).House Bill 911created the EMPATF through a $2surcharge levied on all private insurance policies,and a $4surcharge on com-mercial policies (Koutnik,1996).Florida Governor Chiles set up the Division of Emergency Management under the Department of Community Affairs,and appointed Joseph Myers to lead the emer-gency program,as per FEMA guidelines (Kory,1998).The specific powers and authorities of the emergency management division are set forth in Chapter 252,part 1of the Florida Statutes,entitled the ‘‘State Emergency Management Act’’(Mittler,1997).This act amended the Florida Statutes in 1995to provide broad powers for the governor to order evacuations and to demand mutual aid agreements between counties,municipalities,and the state.Each local government must also prepare an emergency operations pliance,co-operation,and co-ordination are man-dated by the state (Kory,1998).Thus,the state of Florida is committed to acquiring the needed proficiency to reduce disaster impacts,and therefore Florida Division of Emergency Management (DEM)is seeking to become accredited through the NEMA accreditation process.But,in order for the state to meet this standard of performance each county must also match this standard since the state only assists counties in responding to,recovering from,preparing for and mitigating against local disasters within the state.The DEM is currently conducting focus groups with local emergency managers in order to determine the tasks that local emergency management programs should be able to perform.The determination of these tasks will then be used by the state as part of its standards of performance.Therefore,the state’s attempt to Table 4Increase in value of insured residential and commercial property in Florida (in millions ofdollars)。