Detection of Nine M8.0-L0.5 Binaries The Very Low Mass Binary Population and its Implicatio

- 格式:pdf

- 大小:514.92 KB

- 文档页数:30

使用计算机视觉技术进行多目标追踪的常用软件介绍多目标追踪是计算机视觉领域的研究热点之一,它的目标是实时且准确地识别和跟踪图像或视频中的多个目标。

在处理复杂的场景中,多目标追踪可以广泛应用于视频监控、智能交通、无人驾驶、人机交互等领域。

为了实现高效的多目标追踪,有许多常用软件可以用来辅助实现这一任务。

1. OpenCV (Open Source Computer Vision Library)OpenCV是一种广泛使用的计算机视觉库,提供了许多用于多目标追踪的函数和工具。

它支持多种编程语言,如C++、Python和Java,具有跨平台特性,在Windows、Linux和MacOS等操作系统上可用。

OpenCV提供了各种算法和技术来实现多目标追踪。

其中,基于颜色空间的背景减除算法、卡尔曼滤波器和相关滤波器等被广泛用于跟踪目标。

此外,OpenCV还提供了一些预训练的目标检测器和跟踪器,如Haar分类器、HOG(Histogram of Oriented Gradients)和CSRT(Channel and Spatial Reliability Tracking)等。

2. Tensorflow Object Detection APITensorflow Object Detection API是谷歌公司推出的一个开源项目,旨在简化目标检测和追踪任务的开发。

该API提供了一系列预训练的深度学习模型,如FasterR-CNN、SSD(Single Shot MultiBox Detector)和YOLO(You Only Look Once)等,这些模型可以用于目标检测和多目标追踪。

Tensorflow Object Detection API支持多种架构和模型的选择。

用户可以根据自己的需求选择适合的模型,并进行相应的调整和优化。

此外,该API还提供了一些用于数据预处理、模型训练和推理的工具和功能,使得实现多目标追踪变得更加便捷和高效。

PETS 2009 Benchmark Data(PETS 2009基准数据集)数据摘要:The datasets are multisensor sequences containing different crowd activities.The aim of this workshop is to employ existing or new systems for the detection of one or more of 3 types of crowd surveillance characteristics/events within a real-world environment. The scenarios are filmed from multiple cameras and involve up to approximately forty actors.More specifically, the challenge includes estimation of crowd person count and density, tracking of individual(s) within a crowd, and detection of flow and crowd events.中文关键词:跟踪,事件检测,拥挤人群,人员计数,人群密度,PETS 2009,英文关键词:tracking,event detection,crowd person,count,person density,PETS 2009,数据格式:TEXT数据用途:The aim of this workshop is to employ existing or new systems for the detection of one or more of 3 types of crowd surveillance characteristics/events within a real-world environment.数据详细介绍:PETS 2009 Benchmark DataOverviewThe datasets are multisensor sequences containing different crowd activities. Please e-mail *********************if you require assistance obtaining these datasets for the workshop.Aims and ObjectivesThe aim of this workshop is to employ existing or new systems for the detection of one or more of 3 types of crowd surveillance characteristics/events within a real-world environment. The scenarios are filmed from multiple cameras and involve up to approximately forty actors. More specifically, the challenge includes estimation of crowd person count and density, tracking of individual(s) within a crowd, and detection of flow and crowd events.News06 March 2009: The PETS2009 crowd dataset is released.01 April 2009: The PETS2009 submission details are released. Please see Author Instructions.PreliminariesPlease read the following information carefully before processing the dataset, as the details are essential to the understanding of when notification of events should be generated by your system. Please check regularly for updates. Summary of the Dataset structureThe dataset is organised as follows:Calibration DataS0: Training Datacontains sets background, city center, regular flowS1: Person Count and Density Estimationcontains sets L1,L2,L3S2: People Trackingcontains sets L1,L2,L3S3: Flow Analysis and Event Recognitioncontains sets Event Recognition and Multiple FlowEach subset contains several sequences and each sequence contains different views (4 up to 8). This is shown in the diagram below:Calibration DataThe calibration data (one file for each of the 8 cameras) can be found here. The ground plane is assumed to be the Z=0 plane. C++ code (available here) is provided to allow you to load and use the calibration parameters in your program (courtesy of project ETISEO). The provided calibration parameters were obtained using the freely available Tsai Camera Calibration Software by Reg Willson. All spatial measurements are in metres.The cameras used to film the datasets are:view Model Resolution frame rate Comments001 A xis 223M 768x576 ~7 Progressive scan002 A xis 223M 768x576 ~7 Progressive scan003 P TZ Axis 233D 768x576 ~7 Progressive scan004 P TZ Axis 233D 768x576 ~7 Progressive scan005 S ony DCR-PC1000E 3xCMOS 720x576 ~7 ffmpeg De-interlaced 006 S ony DCR-PC1000E 3xCMOS 720x576 ~7 ffmpeg De-interlaced 007 C anon MV-1 1xCCD w 720x576 ~7 Progressive scan008 C anon MV-1 1xCCD w 720x576 ~7 Progressive scanFrames are compressed as JPEG image sequences. All sequences (except one) contain Views 001-004. A few sequences also contain Views 005-008. Please see below for more information.OrientationThe cameras are installed at the locations shown below to cover an approximate area of 100m x 30m (the scale of the map is 20m):The GPS coordinates of the centre of the recording are: 51°26'18.5N 000°56'40.00WThe direct link to the Google maps is as follows: Google MapsCamera installation points are shown above and sample frames are shown below:view 001 view 002 view 003 view 004view 005 view 006 view 007 view 008SynchronisationPlease note that while effort has been made to make sure the frames from different views are synchronised, there might be slight delays and frame drops in some cases. In particular View 4 suffers from frame rate instability and we suggest it be used as a supplementary source of information. Please let us know if you encounter any problems or inconsistencies.DownloadDataset S0: Training DataThis dataset contains three sets of training sequences from different views provided to help researchers obtain the following models from multiple views: Background model for all the cameras. Note that the scene can contain people or moving objects. Furthermore, the frames in this set are not necessarily synchronised. For Views 001-004 Different sequences corresponding to the following time stamps: 13-05, 13-06, 13-07, 13-19, 13-32, 13-38, 13-49 are provided. For views 005-008 (DV cameras) 144 non-synchronised frames are provided.Includes random walking crowd flow. Sequence 9 with time stmp 12-34 using Views 001-008 and Sequence 10 with timestamp 14-55 using Views 001-004. Includes regular walking pace crowd flow. Sequences 11-15 with timestamps 13-57, 13-59, 14-03, 14-06, 14-29 and for Views 001-004.DownloadBackground set [1.8 GB]City center [1.8 GB]Regular flow [1.3 GB]Dataset S1: Person Count and Density EstimationThree regions, R0, R1 and R2 are defined in View 001 only (shown in the example image). The coordinates of the top left and bottom right corners (in pixels) are given in the following table.Region Top-left Bottom-rightR0 (10,10) (750,550)R1 (290,160) (710,430)R2 (30,130) (230,290)Definition of crowd density (%): crowd density is based on a maximum occupancy (100%) of 40 people in 10 square metres on the ground. One person is assumed to occupy 0.25 square metres on the ground.Scenario: S1.L1 walkingElements: medium density crowd, overcastSequences: Sequence 1 with timestamp 13-57; Sequence 2 with timestamp 13-59. Sequences 1-2 use Views 001-004.Subjective Difficulty: Level 1Task: The task is to count the number of people in R0 for each frame of the sequence in View 1 only. As a secondary challenge the crowd density in regions R1 and R2 can also be reported (mapped to ground plane occupancy, possibly using multiple views).Download [502 MB]Sample Frames:Scenario: S1.L2 walkingElements: high density crowd, overcastSequences: Sequence 1 with timestamp 14-06; Sequence 2 with timestamp 14-31. Sequences 1-2 use Views 001-004.Subjective Difficulty: Level 2Task: This scenario contains a densely grouped crowd who walk from onepoint to another. There are two sequences corresponding timestamps 14-06 and 14-31.The task related to timestamp 14-06 is to estimate the crowd density in Region R1 and R2 at each frame of the sequence.The designated task for the sequence Time_14-31 is to determine both the total number of people entering through the brown line from the left side AND the total number of people exiting from purple and red lines, shown in the opposite figure, throughout the whole sequence. The coordinates of the entry and exit lines are given below for reference.Line Start EndEntry : brown (730,250) (730,530)Exit1 : red (230,170) (230,400)Exit2 : purple (500,210) (720,210)Download [367 MB]Sample Frames:Scenario: S1.L3 runningElements: medium density crowd, bright sunshine and shadows Sequences: Sequence 1 with timestamp 14-17; Sequence 2 with timestamp 14-33. Sequences 1-2 use Views 001-004.Subjective Difficulty: Level 3Task: This scenario contains a crowd of people who, on reaching a point in the scene, begin to run. The task is to measure the crowd density in Region R1 at each frame of the sequence.Download [476 MB]Sample Frames:Dataset S2: People TrackingScenario: S2.L1 walkingElements: sparse crowdSequences: Sequence 1 with timestamp 12-34 using Views 001-008 except View_002 for cross validation (see below).Subjective Difficulty: L1Task: Track all of the individuals in the sequence. If you undertake monocular tracking only, report the 2D bounding box location for each individual in the view used; if two or more views are processed, report the 2D bounding box location for each individual as back projected into View_002 using the camera calibration parameters provided (this equates to a leave-one-out validation). Note the origin (0,0) of the image is assumed top-left. Validation will be performed using manually labelled ground truth.Download [997 MB]Sample Frames:Scenario: S2.L2 walking Elements: medium density crowd Sequences: Sequence 1 with timestamp 14-55 using Views 001-004. Subjective Difficulty: L2Task: Track the individuals marked A and B (see figure) in the sequence and provide 2D bounding box locations of the individuals in View_002 which will be validated using manually labelled ground truth. Note the origin (0,0) of the image is assumed top-left. Note that individual B exits the field of view and returns toward the end of the sequence.Download [442 MB]Scenario: S2.L3 WalkingElements: dense crowdSequences: Sequence 1 with timestamp 14-41 using Views 001-004. Subjective Difficulty: L3Task: Track the individuals marked A and B in the sequence and provide 2D bounding box information in View_002 for each individual which will be validated using manually labelled ground truth.Download [259 MB]Dataset S3: Flow Analysis and Event RecognitionScenario: S3.Multiple FlowsElements: dense crowd, runningSequences: Sequences 1-5 with timestamps 12-43 (using Views 1,2,5,6,7,8) , 14-13, 14-37, 14-46 and 14-52. Sequences 2-5 use Views 001-004. Subjective Difficulty: L2Task: Detect and estimate the multiple flows in the provided sequences, mapped onto the ground plane as a occupancy map flow. Further details of the exact task requirements are contained under Author Instructions. These would be compared with ground truth optical flow of major flows in the sequences on the ground plane.Download [760 MB]Sample Frames:Scenario: S3.Event RecognitionElements: dense crowdSequences: Sequences 1-4 with timestamps 14-16, 14-27, 14-31 and 14-33. Sequences 1-4 use Views 001-004.Subjective Difficulty: L3Task: This dataset contains different crowd activities and the task is to provide a probabilistic estimation of each of the following events: walking, running, evacuation (rapid dispersion), local dispersion, crowd formation and splitting at different time instances. Furthermore, we are interested in systems that can identify the start and end of the events as well as transitions between them. Download [1.2 GB]Sample Frames:Additional InformationThe scenarios can also be downloaded from ftp:///pub/PETS2009/ (use anonymous login). Warning: ftp:// is not listing files correctly on some ftp clients. If you experience problems you can connect to the http server at /PETS2009/.Legal note: The video sequences are copyright University of Reading and permission is hereby granted for free download for the purposes of the PETS 2009 workshop and academic and industrial research. Where the data is disseminated (e.g. publications, presentations) the source should be acknowledged.数据预览:点此下载完整数据集。

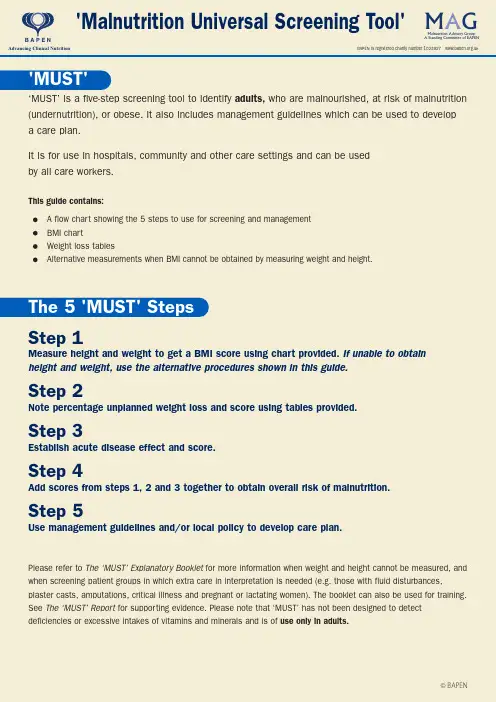

‘MUST’is a five-step screening tool to identify adults,who are malnourished,at risk of malnutrition (undernutrition),or obese.It also includes management guidelines which can be used to develop a care plan.It is for use in hospitals,community and other care settings and can be used by all care workers.This guide contains:A flow chart showing the 5steps to use for screening and management BMI chart Weight loss tablesAlternative measurements when BMI cannot be obtained by measuring weight and height.Please refer to The ‘MUST’Explanatory Booklet for more information when weight and height cannot be measured,and when screening patient groups in which extra care in interpretation is needed (e.g.those with fluid disturbances,plaster casts,amputations,critical illness and pregnant or lactating women).The booklet can also be used for training.See The ‘MUST’Report for supporting evidence.Please note that ‘MUST’has not been designed to detect deficiencies or excessive intakes of vitamins and minerals and is of use only in adults.The 5Step 1Measure height and weight to get a BMI score using chart provided.If unable to obtain height and weight,use the alternative procedures shown in this guide.Step 2Note percentage unplanned weight loss and score using tables provided.Step 3Establish acute disease effect and score.Step 4Add scores from steps 1,2and 3together to obtain overall risk of malnutrition.Step 5Use management guidelines and/or local policy to develop care plan.Malnutrition Advisory Group A Standing Committee of BAPENM A G'Malnutrition Universal Screening Tool'B A P E NAdvancing Clinical NutritionBAPEN is registered charity number ©BAPENStep 1–BMI score (&BMI)Height (feet and inches)Weight (stones and pounds)W e i g h t (k g )Height (m)Note :The black lines denote the exact cut off points (30,20and 18.5kg/m 2),figures on the chart have been rounded to the nearest wholenumber.©BAPENStep 1BMI score+Step 2Weight loss scoreStep 3Acute disease effect score+0Low Risk1Medium Risk2or more High RiskStep 5Management guidelinesObserveDocument dietary intake for 3daysIf adequate –little concern and repeat screening Hospital –weeklyCare Home –at least monthly Community –at least every 2-3months If inadequate –clinical concern –follow local policy,set goals,improve and increase overall nutritional intake,monitor and review care plan regularlyTreat *Refer to dietitian,Nutritional Support Team or implement local policySet goals,improve and increase overall nutritional intake Monitor and review care plan Hospital –weekly Care Home –monthly Community –monthly*Unless detrimental or no benefit is expected from nutritional support e.g.imminent death.If unable to obtain height and weight,see reverse for alternative measurements and use of subjective criteriaAcute disease effect is unlikely to apply outside hospital.See ‘MUST’Explanatory Booklet for further informationStep 4Overall risk of malnutritionAdd Scores together to calculate overall risk of malnutritionScore 0Low Risk Score 1Medium Risk Score 2or more High RiskRe-assess subjects identified at risk as they move through care settingsSee The ‘MUST ’Explanatory Booklet for further details and The ‘MUST ’Report for supporting evidence.All risk categories:Treat underlying condition and provide help and advice on food choices,eating and drinking when necessary.Record malnutrition risk category.Record need for special diets and follow local policy.Obesity:Record presence of obesity.For those with underlying conditions,these are generally controlled before the treatment of obesity.BMI kg/m2Score >20(>30Obese)=018.5-20=1<18.5=2%Score <5=05-10=1>10=2Unplanned weight loss in past 3-6months If patient is acutely ill andthere has been or is likelyto be no nutritional intake for >5daysScore 2Routine clinical careRepeat screening Hospital –weeklyCare Homes –monthly Community –annually for special groups e.g.those >75yrsStep2–Weight loss score©BAPENIf height cannot be measuredUse recently documented or self-reported height (if reliable and realistic).If the subject does not know or is unable to report their height,use one of the alternative measurements to estimate height (ulna,knee height or demispan).If recent weight loss cannot be calculated,use self-reported weight loss (if reliable and realistic).If height,weight or BMI cannot be obtained,the following criteria which relate to them can assist your professional judgement of the subject’s nutritional risk category.Please note,these criteria should be used collectively not separately as alternatives to steps 1and 2of ‘MUST’and are not designed toassign a score.Mid upper arm circumference (MUAC)may be used to estimate BMI category in order to support your overall impression of the subject’s nutritional risk.1.BMIClinical impression –thin,acceptable weight,overweight.Obvious wasting (very thin)and obesity (very overweight)can also be noted.2.Unplanned weight lossClothes and/or jewellery have become loose fitting (weight loss).History of decreased food intake,reduced appetite or swallowing problems over 3-6months and underlying disease or psycho-social/physical disabilities likely to cause weight loss.3.Acute disease effectAcutely ill and no nutritional intake or likelihood of no intake for more than 5days.Further details on taking alternative measurements,special circumstances and subjective criteria can be found in The ‘MUST’Explanatory Booklet .A copy can be downloaded at orpurchased from the BAPEN office.The full evidence-base for ‘MUST’is contained in The ‘MUST’Report and is also available for purchase from the BAPEN office.BAPEN Office,Secure Hold Business Centre,Studley Road,Redditch,Worcs,B987LG.Tel:01527457850.Fax:01527458718.bapen@ BAPEN is registered charity number ©BAPEN 2003ISBN 1899467904Price £2.00All rights reserved.This document may be photocopied for dissemination and training purposes as long as the source is credited and recognised.Copy may be reproduced for the purposes of publicity and promotion.Written permission must be sought from BAPEN if reproduction or adaptation is required.If used for commercial gain a licence fee may be required.Alternative measurements and considerations©BAPEN.First published May 2004by MAG the Malnutrition Advisory Group,a Standing Committee of BAPEN.Reviewed and reprinted with minor changes March 2008and September 2010‘MUST’is supported by the British Dietetic Association,the Royal College of Nursing and the Registered Nursing HomeAssociation.©BAPENAlternative measurements:instructions and tablesIf height cannot be obtained,use length of forearm (ulna)to calculate height using tables below.(See The ‘MUST’Explanatory Booklet for details of other alternative measurements (knee height and demispan)that can also be used to estimate height).Measure between the point of the elbow(olecranon process)and the midpoint of the prominent bone of the wrist (styloid process)(left side if possible).Estimating BMI category from mid upper arm circumference (MUAC)The subject’s left arm should be bent at the elbow at a 90degree angle,with the upper arm held parallel to the side of the body.Measure the distance between the bony protrusion on the shoulder (acromion)and the point of the elbow (olecranon process).Mark the mid-point.Ask the subject to let arm hang loose and measure around the upper arm at the mid-point,making sure that the tapemeasure is snug but not tight.If MUAC is <23.5cm,BMI is likely to be <20kg/m 2.If MUAC is >32.0cm,BMI is likely to be >30kg/m 2.The use of MUAC provides a general indication of BMI and is not designed to generate an actual score for use with ‘MUST’.For further information on use of MUAC please refer to The ‘MUST’ExplanatoryBooklet.Men(<65years) 1.94 1.93 1.91 1.89 1.87 1.85 1.84 1.82 1.80 1.781.76 1.75Men(>65years) 1.87 1.86 1.84 1.821.811.791.781.76 1.75 1.73 1.71 1.70Ulna length(cm)32.031. 1.030.0.029.9.028.528.027.527.026.5Wo men (<65 years) 1.84 1.83 1.81 1.80 1.79 1.77 1.76 1.75 1.73 1.72 1.70 1.69Wo men (>65 years) 1.84 1.831.811.79 1.78 1.76 1.75 1.73 1.71 1.70 1.68 1.66Men(<65years) 1.69 1.67 1.66 1.64 1.62 1.60 1.58 1.57 1.55 1.53 1.51 1.49 1.48 1.46Men(>65years) 1.65 1.63 1.62 1.60 1.59 1.57 1.56 1.541.52 1.51 1.49 1.48 1.46 1.45Ulna length(cm)5.024. 4.023. 3.022. 2.021. 1.020.0.019.9.018.5Wo men (<65 years) 1.65 1.63 1.62 1.61 1.59 1.58 1.56 1.55 1.54 1.52 1.51 1.50 1.48 1.47Wo men (>65 years) 1.611.60 1.581.561.551.531.521.501.481.471.451.441.421.40H E I G H T (m )H E I G H T (m )H E I G H T (m )H E I G H T (m )1.73 1.711.68 1.6726.025.51.68 1.661.65 1.633322255251555555222©BAPEN。

limit of detection的正确用法Limit of detection(检出限)是环境科学、生物化学、物理学等多个领域中重要的概念,尤其在分析化学和生物学研究中,它被广泛应用。

本文将详细介绍Limit of Detection的正确用法,帮助读者更好地理解和应用这一概念。

一、定义与理解Limit of Detection是指能够可靠检测并区分某一特定信号的最小样本量或数值。

在实际应用中,检出限用于评估实验方法的灵敏度,即能区分出最小变化的指标。

其数值大小直接反映了实验方法或检测手段的精度和可靠性。

二、正确使用方法1. 确定实验目的:在使用检出限前,首先应明确实验目的,即需要检测什么物质,以及需要达到何种精确度。

2. 选择合适的检测方法:根据实验目的,选择合适的检测方法,如光谱分析、色谱分析、质谱分析等。

不同的检测方法有不同的检出限。

3. 样品准备:根据检测方法的要求,制备或提取待测样品。

注意样品的均匀性、稳定性和代表性。

4. 仪器与试剂配置:根据检测方法,配置必要的仪器和试剂。

确保仪器功能正常,试剂配制准确无误。

5. 实验操作:按照检测方法进行实验操作,包括样品注入、反应、检测等步骤。

确保操作规范、准确。

6. 数据处理与分析:根据实验数据,计算检出限。

通常采用统计方法,如信号噪声比(S/N)或信号标准偏差(SD)等方法来评估检出限。

7. 验证与确认:在实验过程中,定期对检出限进行验证和确认,确保其准确性和可靠性。

三、注意事项1. 检出限并非唯一的评价指标:除了检出限外,实验过程中还应关注其他重要指标,如定量下限(LOQ)、准确度、精密度等。

2. 实验条件的影响:实验条件(如温度、湿度、压力、时间等)对检出限有一定影响,因此在实验过程中应保持一致的条件。

3. 重复性和稳定性:重复性和稳定性是评估实验结果的重要指标,应在实验过程中关注并记录数据。

4. 误差来源:在计算检出限时,应考虑各种误差来源,如仪器误差、试剂误差、操作误差等,以确保结果的准确性。

In Proceedings of the3rd International Conference on Embedded Software,Philadelphia,PA,pages306–322,October13–15,2003.c Springer-Verlag. Eliminating stack overflow by abstract interpretationJohn Regehr Alastair Reid Kirk WebbSchool of Computing,University of UtahAbstract.An important correctness criterion for software running on embeddedmicrocontrollers is stack safety:a guarantee that the call stack does not over-flow.We address two aspects of the problem of creating stack-safe embeddedsoftware that also makes efficient use of memory:statically bounding worst-casestack depth,and automatically reducing stack memory requirements.Ourfirstcontribution is a method for statically guaranteeing stack safety by performingwhole-program analysis,using an approach based on context-sensitive abstractinterpretation of machine code.Abstract interpretation permits our analysis toaccurately model when interrupts are enabled and disabled,which is essentialfor accurately bounding the stack depth of typical embedded systems.We haveimplemented a stack analysis tool that targets Atmel A VR microcontrollers,andtested it on embedded applications compiled from up to30,000lines of C.Weexperimentally validate the accuracy of the tool,which runs in a few secondson the largest programs that we tested.The second contribution of this paper isa novel framework for automatically reducing stack memory requirements.Weshow that goal-directed global function inlining can be used to reduce the stackmemory requirements of component-based embedded software,on average,to40%of the requirement of a system compiled without inlining,and to68%of therequirement of a system compiled with aggressive whole-program inlining that isnot directed towards reducing stack usage.1IntroductionInexpensive microcontrollers are used in a wide variety of embedded applications such as vehicle control,consumer electronics,medical automation,and sensor networks. Static analysis of the behavior of software running on these processors is important for two main reasons:–Embedded systems are often used in safety critical applications and can be hard to upgrade once deployed.Since undetected bugs can be very costly,it is useful to attempt tofind software defects early.–Severe constraints on cost,size,and power make it undesirable to overprovision resources as a hedge against unforeseen demand.Rather,worst-case resource re-quirements should be determined statically and accurately,even for resources like memory that are convenient to allocate in a dynamic style.0 KB4 KB Without stack boundingWith static stack bounding Fig.1.Typical RAM layout for an embedded program with and without stack bounding.Without a bound,developers must rely on guesswork to determine the amount of storage to allocate to the stack.In this paper we describe the results of an experiment in applying static analysis techniques to binary programs in order to bound and reduce their stack memory re-quirements.We check embedded programs for stack safety :the property that they will not run out of stack memory at run time.Stack safety,which is not guaranteed by tra-ditional type-safe languages like Java,is particularly important for embedded software because stack overflows can easily crash a system.The transparent dynamic stack ex-pansion that is performed by general-purpose operating systems is infeasible on small embedded systems due to lack of virtual memory hardware and limited availability of physical memory.For example,8-bit microcontrollers typically have between a few tens of bytes and a few tens of kilobytes of RAM.Bounds on stack depth can also be usefully incorporated into executable programs,for example to assign appropriate stack sizes to threads or to provide a heap allocator with as much storage as possible without compromising stack safety.The alternative to static stack depth analysis that is currently used in industry is to ensure that memory allocated to the stack exceeds the largest stack size ever observed during testing by some safety margin.A large safety margin would provide good in-surance against stack overflow,but for embedded processors used in products such as sensor network nodes and consumer electronics,the degree of overprovisioning must be kept small in order to minimize per-unit product cost.Figure 1illustrates the rela-tionship between the testing-and analysis-based approaches to allocating memory for the stack.Testing-based approaches to software validation are inherently unreliable,and test-ing embedded software for maximum stack depth is particularly unreliable because its behavior is timing dependent:the worst observed stack depth depends on what code is executing when an interrupt is triggered and on whether further interrupts trigger before the first returns.For example,consider a hypothetical embedded system where the maximum stack depth occurs when the following events occur at almost the same time:1)the main program summarizes data once a second spending 100microseconds2at maximum stack depth;2)a timer interruptfires100times a second spending100mi-croseconds at maximum stack depth;and3)a packet arrives on a network interface up to10times a second;the handler spends100microseconds at maximum stack depth.If these events occur independently of each other,then the worst case will occur roughly once every10years.This means that the worst case will probably not be discovered during testing,but will probably occur in the real world where there may be many in-stances of the embedded system.In practice,the events are not all independent and the timing of some events can be controlled by the test environment.However,we would expect a real system to spend less time at the worst-case stack depth and to involve more events.Another drawback of the testing-based approach to determining stack depth is that it treats the system as a black box,providing developers with little or no feedback about how to best optimize memory usage.Static stack analysis,on the other hand,identifies the critical path through the system and also the maximum stack consumption of each function;this usually exposes obvious candidates for optimization.Using our method for statically bounding stack depth as a starting point,we have developed a novel way to automatically reduce the stack memory requirement of an em-bedded system.The optimization proceeds by evaluating the effect of a large number of potential program transformations in a feedback loop,applying only transformations that reduce the worst-case depth of the stack.Static analysis makes this kind of opti-mization feasible by rapidly providing accurate information about a program.Testing-based approaches to learning about system behavior,on the other hand,are slower and typically only explore a fraction of the possible state space.Our work is preceded by a stack depth analysis by Brylow et al.[3]that also per-forms whole-program analysis of executable programs for embedded systems.How-ever,while they focused on relatively small programs written by hand in assembly lan-guage,we focus on programs that are up to30times larger,and that are compiled from C to a RISC architecture.The added difficulties in analyzing larger,compiled programs necessitated a more powerful approach based on context-sensitive abstract interpreta-tion of machine code;we motivate and describe this approach in Section2.Section3 discusses the problems in experimentally validating the abstract interpretation and stack depth analysis,and presents evidence that the analysis provides accurate results.In Sec-tion4we describe the use of a stack bounding tool to support automatically reducing the stack memory consumption of an embedded system.Finally,we compare our research to previous efforts in Section5and conclude in Section6.2Bounding Stack DepthEmbedded system designers typically try to statically allocate resources needed by the system.This makes systems more predictable and reliable by providing a priori bounds on resource consumption.However,an almost universal exception to this rule is that memory is dynamically allocated on the call stack.Stacks provide a useful model of storage,with constant-time allocation and deallocation and without fragmentation.Fur-thermore,the notion of a stack is designed into microcontrollers at a fundamental level. For example,hardware support for interrupts typically pushes the machine state onto3the stack before calling a user-defined interrupt handler,and pops the machine state upon termination of the handler.For developers of embedded systems,it is important not only to know that the stack depth is bounded,but also to have a tight bound—one that is not much greater than the true worst-case stack depth.This section describes the whole-program analysis that we use to obtain tight bounds on stack depth.Our prototype stack analysis tool targets programs for the Atmel A VR,a popular family of microcontrollers.We chose to analyze binary program images,rather than source code,for a number of reasons:–There is no need to predict compiler behavior.Many compiler decisions,such as those regarding function inlining and register allocation,have a strong effect on stack depth.–Inlined assembly language is common in embedded systems,and a safe analysis must account for its effects.–The source code for libraries and real-time operating systems are commonly not available for analysis.–Since the analysis is independent of the compiler,developers are free to change compilers or compiler versions.In addition,the analysis is not fragile with respect to non-standard language extensions that embedded compilers commonly use to provide developers withfine-grained control over processor-specific features.–Adding a post-compilation analysis step to the development process presents de-velopers with a clean usage model.2.1Analysis Overview and MotivationThefirst challenge in bounding stack depth is to measure the contributions to the stack of each interrupt handler and of the main program.Since indirect function calls and recursion are uncommon in embedded systems[4],a callgraph for each entry point into the program can be constructed using standard analysis techniques.Given a callgraph it is usually straightforward to compute its stack requirement.The second,more difficult,challenge in embedded systems is accurately estimating interactions between interrupt handlers and the main program to compute a maximum stack depth for the whole system.If interrupts are disabled while running interrupt handlers,one can safely estimate the stack bound of a system containing interrupt handlers using this formula:stack bound depth(main)depth(interrupt)However,interrupt handlers are often run with interrupts enabled to ensure that other interrupt handlers are able to meet real-time deadlines.If a system permits at most one concurrent instance of each interrupt handler,the worst-case stack depth of a system can be computed using this formula:stack bound depth(main)depth(interrupt)4Fig.2.This fragment of assembly language for Atmel A VR microcontrollers motivates our approach to program analysis and illustrates a common idiom in embedded soft-ware:disable interrupts,execute a critical section,and then reenable interrupts only if they had previously been enabledUnfortunately,as we show in Section3,this simple formula often provides unneces-sarily pessimistic answers when used to analyze real systems where only some parts of some interrupt handlers run with interrupts enabled.To obtain a safe,tight stack bound for realistic embedded systems,we developed a two-part analysis.Thefirst must generate an accurate estimate of the state of the proces-sor’s interrupt mask at each point in the program,and also the effect of each instruction on the stack depth.The second part of the analysis—unlike thefirst—accounts for potential preemptions between interrupts handlers and can accurately bound the global stack requirement for a system.Figure2presents a fragment of machine code that motivates our approach to pro-gram analysis.Analogous code can be found in almost any embedded system:its pur-pose is to disable interrupts,execute a critical section that must run atomically with respect to interrupt handlers,and then reenable interrupts only if they had previously been enabled.There are a number of challenges in analyzing such code.First,effects of arithmetic and logical operations must be modeled with enough ac-curacy to track data movement through general-purpose and special-purpose registers. In addition,partially unknown data must be modeled.For example,analysis of the code fragment must succeed even when only a single bit of the CPU status register—the master interrupt control bit—is initially known.Second,dead edges in the control-flow graph must be detected and avoided.For ex-ample,when the example code fragment is called in a context where interrupts are dis-abled,it is important that the analysis conclude that the sei instruction is not executed since this would pollute the estimate of the processor state at subsequent addresses.Finally,to prevent procedural aliasing from degrading the estimate of the machine state,a context sensitive analysis must be used.For example,in some systems the code501 (a)Lattice for each bit in the machine stateand1000101111xor110101(b)Logical operations on abstract bits and combining machine states at merge pointsFig.3.Modeling machine states and operations in the abstract interpretationin Figure2is called with interrupts disabled by some parts of the system and is called with interrupts enabled by other parts of the system.With a context-insensitive ap-proach,the analysis concludes that since the initial state of the interruptflag can vary,thefinal state of the interruptflag can also vary and so analysis of both callers of the function would proceed with the interruptflag unknown.This can lead to large over-estimates in stack bounds since unknown values are propagated to any code that could execute after the call.With a context-sensitive analysis the two calls are analyzed sepa-rately,resulting in an accurate estimate of the interrupt state.The next section describes the abstract interpretation we have developed to meet these challenges.2.2Abstracting the Processor StateThe purpose of our abstract interpretation is to generate a safe,precise estimate of the state of the processor at each point in the program;this is a requirement forfindinga tight bound on stack depth.Designing the abstract interpretation boils down to twomain design decisions.First,how much of the machine state should the analysis model?For programs thatwe have analyzed,it is sufficient to model the program counter,general-purpose regis-ters,and several I/O registers.Atmel A VR chips contain32general-purpose registers and64I/O registers;each register stores eight bits.From the I/O space we model theregisters that contain interrupt masks and the processor status register.We do not model main memory or most I/O registers,such as those that implement timers,analog-to-digital conversion,and serial communication.Second,what is the abstract model for each element of machine state?We chose to model the machine at the bit level to capture the effect of bitwise operations on theinterrupt mask and condition code register—we had initially attempted to model themachine at word granularity and this turned out to lose too much information through conservative approximation.Each bit of machine state is modeled using the lattice de-picted in Figure3(a).The lattice contains the values0and1as well as a bottom element, ,that corresponds to a bit that cannot be proven to have value0or1at a particular program point.Figure3(b)shows abstractions of some common logical operators.Abstractions of operators should always return a result that is as accurate as possible.For example,6when all bits of the input to an instruction have the value0or1,the execution of the instruction should have the same result that it would have on a real processor.In this respect our abstract interpreter implements most of the functionality of a standard CPU simulator.For example,when executing the and instruction with as one argument and as the other argument,the result register will con-tain the value.Arithmetic operators are treated similarly,but re-quire more care because bits in the result typically depend on multiple bits in the input. Furthermore,the abstract interpretation must take into account the effect of instructions on processor condition codes,since subsequent branching decisions are made using these values.The example in Figure2illustrates two special cases that must be accounted for in the abstract interpretation.First,the add-with-carry instruction adc,when both of its arguments are the same register,acts as rotate-left-through-carry.In other words,it shifts each bit in its input one position to the left,with the leftmost bit going into the CPU’s carryflag and the previous carryflag going into the rightmost bit.Second,the exclusive-or instruction eor,when both of its arguments are the same register,acts like a clear instruction—after its execution the register is known to contain all zero bits regardless of its previous contents.2.3Managing Abstract Processor StatesAn important decision in designing the analysis was when to create a copy of the ab-stract machine state at a particular program point,as opposed to merging two abstract states.The merge operator,shown in Figure3(b),is lossy since a conservative approx-imation must always be made.We have chosen to implement a context-sensitive anal-ysis,which means that we fork the machine state each time a function call is made, and at no other points in the program.This has several consequences.First,and most important,it means that the abstract interpretation is not forced to make a conservative approximation when a function is called from different points in the program where the processor is in different states.In particular,when a function is called both with inter-rupts enabled and disabled,the analysis is not forced to conclude that the status of the interrupt bit is unknown inside the function and upon return from it.Second,it means that we cannot show termination of a loop implemented within a function.This is not a problem at present since loops are irrelevant to the stack depth analysis as long as there is no net change in stack depth across the loop.However,it will become a problem if we decide to push our analysis forward to bound heap allocation or execution time.Third, it means that we can,in principle,detect termination of recursion.However,our current implementation rarely does so in practice because most recursion is bounded by values that are stored on the stack—which our analysis does not model.Finally,forking the state at function calls means that the state space of the stack analyzer might become large.This has not been a problem in practice;the largest programs that we have ana-lyzed cause the analyzer to allocate about140MB.If memory requirements become a problem for the analysis,a relatively simple solution would be to merge program states that are identical or that are similar enough that a conservative merging will result in minimal loss of precision.72.4Abstract Interpretation and Stack Analysis AlgorithmsThe program analysis begins by initializing a worklist with all entry points into the program;entry points are found by examining the vector of interrupt handlers that is stored at the bottom of a program image,which includes the address of a startup routine that eventually jumps to main().For each item in the worklist,the analyzer abstractly interprets a single instruction.If the interpretation changes the state of the processor at that program point,items are added to the worklist corresponding to each live control flow edge leaving the instruction.Termination is assured because the state space for a program isfinite and because we never revisit states more than once.The abstract interpretation detects control-flow edges that are dead in a particular context,and also control-flow edges that are dead in all contexts.In many systems we have analyzed,the abstract interpretationfinds up to a dozen branches that are provably not taken.This illustrates the increased precision of our analysis relative to the dataflow analysis that an optimizing compiler has previously performed on the embedded pro-gram as part of a dead code elimination pass.In the second phase,the analysis considers there to be a controlflow edge from every instruction in the program to thefirst instruction of every interrupt handler that cannot be proven to be disabled at that program point.An interrupt is disabled if either the master interrupt bit is zero or the enable bit for the particular interrupt is zero.Once these edges are known,the worst-case stack depth for a program can be found using the method developed by Brylow et al.[3]:perform a depth-first search over controlflow edges,explicit and implicit,keeping track of the effect of each instruction on the stack depth,and also keeping track of the largest stack depth seen so far.A complication that we have encountered in many real programs is that interrupt handlers commonly run with all interrupts enabled,admitting the possibility that a new instance of an interrupt handler will be signaled before the previous instance terminates. From an analysis viewpoint reentrant interrupt handlers are a serious problem:systems containing them cannot be proven to be stack-safe without also reasoning about time. In effect,the stack bounding problem becomes predicated on the results of a real-time analysis that is well beyond the current capabilities of our tool.In real systems that we have looked at reentrant interrupt handlers are so common that we have provided a facility for working around the problem by permitting a de-veloper to manually assert that a particular interrupt handler can preempt itself only up to a certain number of times.Programmers appear to commonly rely on ad hoc real-time reasoning,e.g.,“this interrupt only arrives10times per second and so it cannot possibly interrupt itself.”In practice,most instances of this kind of reasoning should be considered to be designflaws—few interrupt handlers are written in a reentrant fashion so it is usually better to design systems where concurrent instances of a single handler are not permitted.Furthermore,stack depth requirements and the potential for race conditions will be kept to a minimum if there are no cycles in the interrupt preemp-tion graph,and if preemption of interrupt handlers is only permitted when necessary to meet a real-time deadline.82.5Other ChallengesIn this section we address other challenges faced by the stack analysis tool:loads into the stack pointer,self-modifying code,indirect branches,indirect stores,and recursive function calls.These features can complicate or defeat static analysis.However,em-bedded developers tend to make very limited use of them,and in our experience static analysis of real programs is still possible and,moreover,effective.We support code that increments or decrements the stack pointer by constants,for example to allocate or deallocate function-scoped data structures.Code that adds non-constants to the stack pointer(e.g.,to allocate variable sized arrays on the stack)would require some extra work to bound the amount of space added to the stack.We also do not support code that changes the stack pointer to new values in a more general way,as is done in the context switch routine of a preemptive operating system.The A VR has a Harvard architecture,making it possible to prove the absence of self-modifying code simply by ensuring that a program cannot reach a“store program memory”instruction.However,by reduction to the halting problem,self-modifying code cannot be reliably detected in the general case.Fortunately,use of self-modifying code is rare and discouraged—it is notoriously difficult to understand and also pre-cludes reducing the cost of an embedded system by putting the program into ROM.Our analysis must build a conservative approximation of the program’s controlflow graph.Indirect branches cause problems for program analysis because it can be diffi-cult to tightly bound the set of potential branch targets.Our approach to dealing with indirect branches is based on the observation that they are usually used in a structured way,and the structure can be exploited to learn the set of targets.For example,when analyzing TinyOS[6]programs,the argument to the function TOSit contained only14recursive loops.Our approach to dealing with recursion,therefore, is blunt:we require that developers explicitly specify a maximum iteration count for each recursive loop in a system.The analysis returns an unbounded stack depth if the developers neglect to specify a limit for a particular loop.It would be straightforward to port our stack analyzer to other processors:the anal-ysis algorithms,such as the whole-program analysis for worst-case stack depth,operate on an abstract representation of the program that is not processor dependent.However, the analysis would return pessimistic results for register-poor architectures such as the Motorola68HC11,since code for those processors makes significant use of the stack, and stack values are not currently modeled by our tool.In particular,we would proba-bly not obtain precise results for code equivalent to the code in Figure2that we used to motivate our approach.To handle register-poor architectures we are developing an approach to modeling the stack that is based on a simple type system for registers that are used as pointers into stack frames.2.6Using the Stack ToolWe have a prototype tool that implements our stack depth analysis.In its simplest mode of usage,the stack tool returns a single number:an upper bound on the stack depth for a system.For example:$./stacktool-w flybywire.elftotal stack requirement from global analysis=55To make the tool more useful we provide a number of extra features,including switching between context-sensitive and context-insensitive program analysis,creating a graphical callgraph for a system,listing branches that can be proven to be dead in all contexts,finding the shortest path through a program that reaches the maximum stack depth,and printing a disassembled version of the embedded program with annotations indicating interrupt status and worst-case stack depth at each instruction.These are all useful in helping developers understand and manually reduce stack memory consump-tion in their programs.There are other obvious ways to use the stack tool that we have not yet implemented. For example,using stack bounds to compute the maximum size of the heap for a sys-tem so that it stops just short of compromising stack safety,or computing a minimum safe stack size for individual threads in a multi-threaded embedded system.Ideally,the analysis would become part of the build process and values from the analysis would be used directly in the code being generated.3Validating the AnalysisWe used several approaches to increase our confidence in the validity of our analysis techniques and their implementations.103.1Validating the Abstract InterpretationTo test the abstract interpretation,we modified a simulator for A VR processors to dump the state of the machine after executing each instruction.Then,we created a separate program to ensure that this concrete state was“within”the conservative approximation of the machine state produced by abstract interpretation at that address,and that the simulator did not execute any instructions that had been marked as dead code by the static analysis.During early development of the analysis this was helpful infinding bugs and in providing a much more thorough check on the abstract interpretation than manual inspection of analysis results—our next-best validation technique.We have tested the current version of the stack analysis tool by executing at least100,000instructions of about a dozen programs,including several that were written specifically to stress-test the analysis,and did notfind any discrepancies.3.2Validating Stack BoundsThere are two important metrics for validating the bounds returned by the stack tool. Thefirst is qualitative:Does the tool ever return an unsafe result?Testing the stack tool against actual execution of about a dozen embedded applications has not turned up any examples where it has returned a bound that is less than an observed stack depth.This justifies some confidence that our algorithms are sound.Our second metric is quantitative:Is the tool capable of returning results that are close to the true worst-case stack depth for a system?The maximum observed stack depth,the worst-case stack depth estimate from the stack tool,and the(non-computable) true worst-case stack depth are related in this way:worst observed true worst estimated worstOne might hope that the precision of the analysis could be validated straightfor-wardly by instrumenting some embedded systems to make them report their worst ob-served stack depth and comparing these values to the bounds on stack depth.For several reasons,this approach produces maximum observed stack depths that are significantly smaller than the estimated worst case and,we believe,the true worst case.First,the timing issues that we discussed in Section1come into play,making it very hard to ob-serve interrupt handlers preempting each other even when it is clearly possible that they may do so.Second,even within the main function and individual interrupt handlers,it can be very difficult to force an embedded system to execute the code path that pro-duces the worst-case stack depth.Embedded systems often present a narrower external interface than do traditional applications,and it is correspondingly harder to force them to execute certain code paths using test inputs.While the difficulty of thorough test-ing is frustrating,it does support our thesis that static program analysis is particularly important in this domain.The71embedded applications that we used to test our analysis come from three families.Thefirst is Autopilot,a simple cyclic-executive style control program for an autonomous helicopter[10].The second is a collection of application programs that are distributed with TinyOS version0.6.1,a small operating system for networked sensor11。

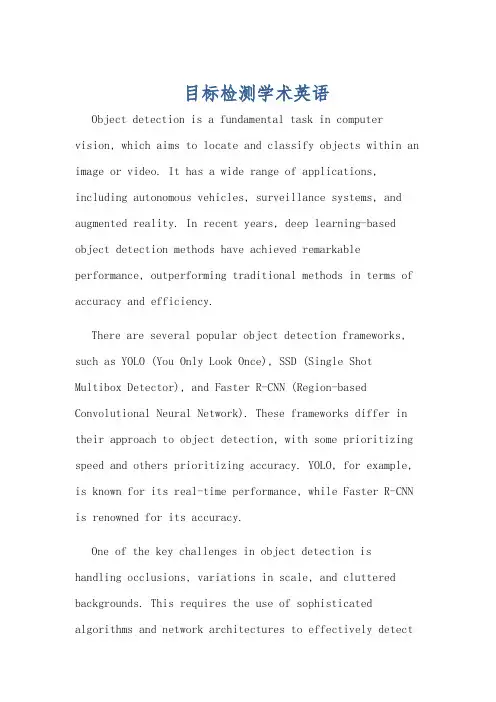

目标检测学术英语Object detection is a fundamental task in computer vision, which aims to locate and classify objects within an image or video. It has a wide range of applications, including autonomous vehicles, surveillance systems, and augmented reality. In recent years, deep learning-based object detection methods have achieved remarkable performance, outperforming traditional methods in terms of accuracy and efficiency.There are several popular object detection frameworks, such as YOLO (You Only Look Once), SSD (Single Shot Multibox Detector), and Faster R-CNN (Region-based Convolutional Neural Network). These frameworks differ in their approach to object detection, with some prioritizing speed and others prioritizing accuracy. YOLO, for example, is known for its real-time performance, while Faster R-CNN is renowned for its accuracy.One of the key challenges in object detection is handling occlusions, variations in scale, and cluttered backgrounds. This requires the use of sophisticated algorithms and network architectures to effectively detectobjects under these conditions. Additionally, object detection models need to be robust to changes in lighting, weather, and other environmental factors.In recent years, there has been a surge of interest in improving object detection performance through the use of attention mechanisms, which allow the model to focus on relevant parts of the image. This has led to the development of attention-based object detection models, such as DETR (DEtection TRansformer) and SETR (SEgmentation-TRansformer).Furthermore, the integration of object detection with other computer vision tasks, such as instance segmentation and pose estimation, has become an active area of research. This integration allows for a more comprehensive understanding of the visual scene and enables more sophisticated applications.In conclusion, object detection is a critical task in computer vision with a wide range of applications. Deep learning-based methods have significantly advanced the state-of-the-art in object detection, and ongoing researchcontinues to push the boundaries of performance and applicability.目标检测是计算机视觉中的一个基本任务,旨在定位和分类图像或视频中的对象。

JOINT INDUSTRY STANDARDAcoustic Microscopy for Non-HermeticEncapsulatedElectronicComponents IPC/JEDEC J-STD-035APRIL1999Supersedes IPC-SM-786 Supersedes IPC-TM-650,2.6.22Notice EIA/JEDEC and IPC Standards and Publications are designed to serve thepublic interest through eliminating misunderstandings between manufacturersand purchasers,facilitating interchangeability and improvement of products,and assisting the purchaser in selecting and obtaining with minimum delaythe proper product for his particular need.Existence of such Standards andPublications shall not in any respect preclude any member or nonmember ofEIA/JEDEC or IPC from manufacturing or selling products not conformingto such Standards and Publications,nor shall the existence of such Standardsand Publications preclude their voluntary use by those other than EIA/JEDECand IPC members,whether the standard is to be used either domestically orinternationally.Recommended Standards and Publications are adopted by EIA/JEDEC andIPC without regard to whether their adoption may involve patents on articles,materials,or processes.By such action,EIA/JEDEC and IPC do not assumeany liability to any patent owner,nor do they assume any obligation whateverto parties adopting the Recommended Standard or ers are alsowholly responsible for protecting themselves against all claims of liabilities forpatent infringement.The material in this joint standard was developed by the EIA/JEDEC JC-14.1Committee on Reliability Test Methods for Packaged Devices and the IPCPlastic Chip Carrier Cracking Task Group(B-10a)The J-STD-035supersedes IPC-TM-650,Test Method2.6.22.For Technical Information Contact:Electronic Industries Alliance/ JEDEC(Joint Electron Device Engineering Council)2500Wilson Boulevard Arlington,V A22201Phone(703)907-7560Fax(703)907-7501IPC2215Sanders Road Northbrook,IL60062-6135 Phone(847)509-9700Fax(847)509-9798Please use the Standard Improvement Form shown at the end of thisdocument.©Copyright1999.The Electronic Industries Alliance,Arlington,Virginia,and IPC,Northbrook,Illinois.All rights reserved under both international and Pan-American copyright conventions.Any copying,scanning or other reproduction of these materials without the prior written consent of the copyright holder is strictly prohibited and constitutes infringement under the Copyright Law of the United States.IPC/JEDEC J-STD-035Acoustic Microscopyfor Non-Hermetic EncapsulatedElectronicComponentsA joint standard developed by the EIA/JEDEC JC-14.1Committee on Reliability Test Methods for Packaged Devices and the B-10a Plastic Chip Carrier Cracking Task Group of IPCUsers of this standard are encouraged to participate in the development of future revisions.Contact:EIA/JEDEC Engineering Department 2500Wilson Boulevard Arlington,V A22201 Phone(703)907-7500 Fax(703)907-7501IPC2215Sanders Road Northbrook,IL60062-6135 Phone(847)509-9700Fax(847)509-9798ASSOCIATION CONNECTINGELECTRONICS INDUSTRIESAcknowledgmentMembers of the Joint IPC-EIA/JEDEC Moisture Classification Task Group have worked to develop this document.We would like to thank them for their dedication to this effort.Any Standard involving a complex technology draws material from a vast number of sources.While the principal members of the Joint Moisture Classification Working Group are shown below,it is not possible to include all of those who assisted in the evolution of this Standard.To each of them,the mem-bers of the EIA/JEDEC and IPC extend their gratitude.IPC Packaged Electronic Components Committee ChairmanMartin FreedmanAMP,Inc.IPC Plastic Chip Carrier Cracking Task Group,B-10a ChairmanSteven MartellSonoscan,Inc.EIA/JEDEC JC14.1CommitteeChairmanJack McCullenIntel Corp.EIA/JEDEC JC14ChairmanNick LycoudesMotorolaJoint Working Group MembersCharlie Baker,TIChristopher Brigham,Hi/FnRalph Carbone,Hewlett Packard Co. Don Denton,TIMatt Dotty,AmkorMichele J.DiFranza,The Mitre Corp. Leo Feinstein,Allegro Microsystems Inc.Barry Fernelius,Hewlett Packard Co. Chris Fortunko,National Institute of StandardsRobert J.Gregory,CAE Electronics, Inc.Curtis Grosskopf,IBM Corp.Bill Guthrie,IBM Corp.Phil Johnson,Philips Semiconductors Nick Lycoudes,MotorolaSteven R.Martell,Sonoscan Inc. Jack McCullen,Intel Corp.Tom Moore,TIDavid Nicol,Lucent Technologies Inc.Pramod Patel,Advanced Micro Devices Inc.Ramon R.Reglos,XilinxCorazon Reglos,AdaptecGerald Servais,Delphi Delco Electronics SystemsRichard Shook,Lucent Technologies Inc.E.Lon Smith,Lucent Technologies Inc.Randy Walberg,NationalSemiconductor Corp.Charlie Wu,AdaptecEdward Masami Aoki,HewlettPackard LaboratoriesFonda B.Wu,Raytheon Systems Co.Richard W.Boerdner,EJE ResearchVictor J.Brzozowski,NorthropGrumman ES&SDMacushla Chen,Wus Printed CircuitCo.Ltd.Jeffrey C.Colish,Northrop GrummanCorp.Samuel J.Croce,Litton AeroProducts DivisionDerek D-Andrade,Surface MountTechnology CentreRao B.Dayaneni,Hewlett PackardLaboratoriesRodney Dehne,OEM WorldwideJames F.Maguire,Boeing Defense&Space GroupKim Finch,Boeing Defense&SpaceGroupAlelie Funcell,Xilinx Inc.Constantino J.Gonzalez,ACMEMunir Haq,Advanced Micro DevicesInc.Larry A.Hargreaves,DC.ScientificInc.John T.Hoback,Amoco ChemicalCo.Terence Kern,Axiom Electronics Inc.Connie M.Korth,K-Byte/HibbingManufacturingGabriele Marcantonio,NORTELCharles Martin,Hewlett PackardLaboratoriesRichard W.Max,Alcatel NetworkSystems Inc.Patrick McCluskey,University ofMarylandJames H.Moffitt,Moffitt ConsultingServicesRobert Mulligan,Motorola Inc.James E.Mumby,CibaJohn Northrup,Lockheed MartinCorp.Dominique K.Numakura,LitchfieldPrecision ComponentsNitin B.Parekh,Unisys Corp.Bella Poborets,Lucent TechnologiesInc.D.Elaine Pope,Intel Corp.Ray Prasad,Ray Prasad ConsultancyGroupAlbert Puah,Adaptec Inc.William Sepp,Technic Inc.Ralph W.Taylor,Lockheed MartinCorp.Ed R.Tidwell,DSC CommunicationsCorp.Nick Virmani,Naval Research LabKen Warren,Corlund ElectronicsCorp.Yulia B.Zaks,Lucent TechnologiesInc.IPC/JEDEC J-STD-035April1999 iiTable of Contents1SCOPE (1)2DEFINITIONS (1)2.1A-mode (1)2.2B-mode (1)2.3Back-Side Substrate View Area (1)2.4C-mode (1)2.5Through Transmission Mode (2)2.6Die Attach View Area (2)2.7Die Surface View Area (2)2.8Focal Length(FL) (2)2.9Focus Plane (2)2.10Leadframe(L/F)View Area (2)2.11Reflective Acoustic Microscope (2)2.12Through Transmission Acoustic Microscope (2)2.13Time-of-Flight(TOF) (3)2.14Top-Side Die Attach Substrate View Area (3)3APPARATUS (3)3.1Reflective Acoustic Microscope System (3)3.2Through Transmission AcousticMicroscope System (4)4PROCEDURE (4)4.1Equipment Setup (4)4.2Perform Acoustic Scans..........................................4Appendix A Acoustic Microscopy Defect CheckSheet (6)Appendix B Potential Image Pitfalls (9)Appendix C Some Limitations of AcousticMicroscopy (10)Appendix D Reference Procedure for PresentingApplicable Scanned Data (11)FiguresFigure1Example of A-mode Display (1)Figure2Example of B-mode Display (1)Figure3Example of C-mode Display (2)Figure4Example of Through Transmission Display (2)Figure5Diagram of a Reflective Acoustic MicroscopeSystem (3)Figure6Diagram of a Through Transmission AcousticMicroscope System (3)April1999IPC/JEDEC J-STD-035iiiIPC/JEDEC J-STD-035April1999This Page Intentionally Left BlankivApril1999IPC/JEDEC J-STD-035 Acoustic Microscopy for Non-Hermetic EncapsulatedElectronic Components1SCOPEThis test method defines the procedures for performing acoustic microscopy on non-hermetic encapsulated electronic com-ponents.This method provides users with an acoustic microscopy processflow for detecting defects non-destructively in plastic packages while achieving reproducibility.2DEFINITIONS2.1A-mode Acoustic data collected at the smallest X-Y-Z region defined by the limitations of the given acoustic micro-scope.An A-mode display contains amplitude and phase/polarity information as a function of time offlight at a single point in the X-Y plane.See Figure1-Example of A-mode Display.IPC-035-1 Figure1Example of A-mode Display2.2B-mode Acoustic data collected along an X-Z or Y-Z plane versus depth using a reflective acoustic microscope.A B-mode scan contains amplitude and phase/polarity information as a function of time offlight at each point along the scan line.A B-mode scan furnishes a two-dimensional(cross-sectional)description along a scan line(X or Y).See Figure2-Example of B-mode Display.IPC-035-2 Figure2Example of B-mode Display(bottom half of picture on left)2.3Back-Side Substrate View Area(Refer to Appendix A,Type IV)The interface between the encapsulant and the back of the substrate within the outer edges of the substrate surface.2.4C-mode Acoustic data collected in an X-Y plane at depth(Z)using a reflective acoustic microscope.A C-mode scan contains amplitude and phase/polarity information at each point in the scan plane.A C-mode scan furnishes a two-dimensional(area)image of echoes arising from reflections at a particular depth(Z).See Figure3-Example of C-mode Display.1IPC/JEDEC J-STD-035April1999IPC-035-3 Figure3Example of C-mode Display2.5Through Transmission Mode Acoustic data collected in an X-Y plane throughout the depth(Z)using a through trans-mission acoustic microscope.A Through Transmission mode scan contains only amplitude information at each point in the scan plane.A Through Transmission scan furnishes a two-dimensional(area)image of transmitted ultrasound through the complete thickness/depth(Z)of the sample/component.See Figure4-Example of Through Transmission Display.IPC-035-4 Figure4Example of Through Transmission Display2.6Die Attach View Area(Refer to Appendix A,Type II)The interface between the die and the die attach adhesive and/or the die attach adhesive and the die attach substrate.2.7Die Surface View Area(Refer to Appendix A,Type I)The interface between the encapsulant and the active side of the die.2.8Focal Length(FL)The distance in water at which a transducer’s spot size is at a minimum.2.9Focus Plane The X-Y plane at a depth(Z),which the amplitude of the acoustic signal is maximized.2.10Leadframe(L/F)View Area(Refer to Appendix A,Type V)The imaged area which extends from the outer L/F edges of the package to the L/F‘‘tips’’(wedge bond/stitch bond region of the innermost portion of the L/F.)2.11Reflective Acoustic Microscope An acoustic microscope that uses one transducer as both the pulser and receiver. (This is also known as a pulse/echo system.)See Figure5-Diagram of a Reflective Acoustic Microscope System.2.12Through Transmission Acoustic Microscope An acoustic microscope that transmits ultrasound completely through the sample from a sending transducer to a receiver on the opposite side.See Figure6-Diagram of a Through Transmis-sion Acoustic Microscope System.2April1999IPC/JEDEC J-STD-0353IPC/JEDEC J-STD-035April1999 3.1.6A broad band acoustic transducer with a center frequency in the range of10to200MHz for subsurface imaging.3.2Through Transmission Acoustic Microscope System(see Figure6)comprised of:3.2.1Items3.1.1to3.1.6above3.2.2Ultrasonic pulser(can be a pulser/receiver as in3.1.1)3.2.3Separate receiving transducer or ultrasonic detection system3.3Reference packages or standards,including packages with delamination and packages without delamination,for use during equipment setup.3.4Sample holder for pre-positioning samples.The holder should keep the samples from moving during the scan and maintain planarity.4PROCEDUREThis procedure is generic to all acoustic microscopes.For operational details related to this procedure that apply to a spe-cific model of acoustic microscope,consult the manufacturer’s operational manual.4.1Equipment Setup4.1.1Select the transducer with the highest useable ultrasonic frequency,subject to the limitations imposed by the media thickness and acoustic characteristics,package configuration,and transducer availability,to analyze the interfaces of inter-est.The transducer selected should have a low enough frequency to provide a clear signal from the interface of interest.The transducer should have a high enough frequency to delineate the interface of interest.Note:Through transmission mode may require a lower frequency and/or longer focal length than reflective mode.Through transmission is effective for the initial inspection of components to determine if defects are present.4.1.2Verify setup with the reference packages or standards(see3.3above)and settings that are appropriate for the trans-ducer chosen in4.1.1to ensure that the critical parameters at the interface of interest correlate to the reference standard uti-lized.4.1.3Place units in the sample holder in the coupling medium such that the upper surface of each unit is parallel with the scanning plane of the acoustic transducer.Sweep air bubbles away from the unit surface and from the bottom of the trans-ducer head.4.1.4At afixed distance(Z),align the transducer and/or stage for the maximum reflected amplitude from the top surface of the sample.The transducer must be perpendicular to the sample surface.4.1.5Focus by maximizing the amplitude,in the A-mode display,of the reflection from the interface designated for imag-ing.This is done by adjusting the Z-axis distance between the transducer and the sample.4.2Perform Acoustic Scans4.2.1Inspect the acoustic image(s)for any anomalies,verify that the anomaly is a package defect or an artifact of the imaging process,and record the results.(See Appendix A for an example of a check sheet that may be used.)To determine if an anomaly is a package defect or an artifact of the imaging process it is recommended to analyze the A-mode display at the location of the anomaly.4.2.2Consider potential pitfalls in image interpretation listed in,but not limited to,Appendix B and some of the limita-tions of acoustic microscopy listed in,but not limited to,Appendix C.If necessary,make adjustments to the equipment setup to optimize the results and rescan.4April1999IPC/JEDEC J-STD-035 4.2.3Evaluate the acoustic images using the failure criteria specified in other appropriate documents,such as J-STD-020.4.2.4Record the images and thefinal instrument setup parameters for documentation purposes.An example checklist is shown in Appendix D.5IPC/JEDEC J-STD-035April19996April1999IPC/JEDEC J-STD-035Appendix AAcoustic Microscopy Defect Check Sheet(continued)CIRCUIT SIDE SCANImage File Name/PathDelamination(Type I)Die Circuit Surface/Encapsulant Number Affected:Average%Location:Corner Edge Center (Type II)Die/Die Attach Number Affected:Average%Location:Corner Edge Center (Type III)Encapsulant/Substrate Number Affected:Average%Location:Corner Edge Center (Type V)Interconnect tip Number Affected:Average%Interconnect Number Affected:Max.%Length(Type VI)Intra-Laminate Number Affected:Average%Location:Corner Edge Center Comments:CracksAre cracks present:Yes NoIf yes:Do any cracks intersect:bond wire ball bond wedge bond tab bump tab leadDoes crack extend from leadfinger to any other internal feature:Yes NoDoes crack extend more than two-thirds the distance from any internal feature to the external surfaceof the package:Yes NoAdditional verification required:Yes NoComments:Mold Compound VoidsAre voids present:Yes NoIf yes:Approx.size Location(if multiple voids,use comment section)Do any voids intersect:bond wire ball bond wedge bond tab bump tab lead Additional verification required:Yes NoComments:7IPC/JEDEC J-STD-035April1999Appendix AAcoustic Microscopy Defect Check Sheet(continued)NON-CIRCUIT SIDE SCANImage File Name/PathDelamination(Type IV)Encapsulant/Substrate Number Affected:Average%Location:Corner Edge Center (Type II)Substrate/Die Attach Number Affected:Average%Location:Corner Edge Center (Type V)Interconnect Number Affected:Max.%LengthLocation:Corner Edge Center (Type VI)Intra-Laminate Number Affected:Average%Location:Corner Edge Center (Type VII)Heat Spreader Number Affected:Average%Location:Corner Edge Center Additional verification required:Yes NoComments:CracksAre cracks present:Yes NoIf yes:Does crack extend more than two-thirds the distance from any internal feature to the external surfaceof the package:Yes NoAdditional verification required:Yes NoComments:Mold Compound VoidsAre voids present:Yes NoIf yes:Approx.size Location(if multiple voids,use comment section)Additional verification required:Yes NoComments:8Appendix BPotential Image PitfallsOBSERV ATIONS CAUSES/COMMENTSUnexplained loss of front surface signal Gain setting too lowSymbolization on package surfaceEjector pin knockoutsPin1and other mold marksDust,air bubbles,fingerprints,residueScratches,scribe marks,pencil marksCambered package edgeUnexplained loss of subsurface signal Gain setting too lowTransducer frequency too highAcoustically absorbent(rubbery)fillerLarge mold compound voidsPorosity/high concentration of small voidsAngled cracks in package‘‘Dark line boundary’’(phase cancellation)Burned molding compound(ESD/EOS damage)False or spotty indication of delamination Low acoustic impedance coating(polyimide,gel)Focus errorIncorrect delamination gate setupMultilayer interference effectsFalse indication of adhesion Gain set too high(saturation)Incorrect delamination gate setupFocus errorOverlap of front surface and subsurface echoes(transducerfrequency too low)Fluidfilling delamination areasApparent voiding around die edge Reflection from wire loopsIncorrect setting of void gateGraded intensity Die tilt or lead frame deformation Sample tiltApril1999IPC/JEDEC J-STD-0359Appendix CSome Limitations of Acoustic MicroscopyAcoustic microscopy is an analytical technique that provides a non-destructive method for examining plastic encapsulated components for the existence of delaminations,cracks,and voids.This technique has limitations that include the following: LIMITATION REASONAcoustic microscopy has difficulty infinding small defects if the package is too thick.The ultrasonic signal becomes more attenuated as a function of two factors:the depth into the package and the transducer fre-quency.The greater the depth,the greater the attenuation.Simi-larly,the higher the transducer frequency,the greater the attenu-ation as a function of depth.There are limitations on the Z-axis(axial)resolu-tion.This is a function of the transducer frequency.The higher the transducer frequency,the better the resolution.However,the higher frequency signal becomes attenuated more quickly as a function of depth.There are limitations on the X-Y(lateral)resolu-tion.The X-Y(lateral)resolution is a function of a number of differ-ent variables including:•Transducer characteristics,including frequency,element diam-eter,and focal length•Absorption and scattering of acoustic waves as a function of the sample material•Electromechanical properties of the X-Y stageIrregularly shaped packages are difficult to analyze.The technique requires some kind offlat reference surface.Typically,the upper surface of the package or the die surfacecan be used as references.In some packages,cambered packageedges can cause difficulty in analyzing defects near the edgesand below their surfaces.Edge Effect The edges cause difficulty in analyzing defects near the edge ofany internal features.IPC/JEDEC J-STD-035April1999 10April1999IPC/JEDEC J-STD-035Appendix DReference Procedure for Presenting Applicable Scanned DataMost of the settings described may be captured as a default for the particular supplier/product with specific changes recorded on a sample or lot basis.Setup Configuration(Digital Setup File Name and Contents)Calibration Procedure and Calibration/Reference Standards usedTransducerManufacturerModelCenter frequencySerial numberElement diameterFocal length in waterScan SetupScan area(X-Y dimensions)Scan step sizeHorizontalVerticalDisplayed resolutionHorizontalVerticalScan speedPulser/Receiver SettingsGainBandwidthPulseEnergyRepetition rateReceiver attenuationDampingFilterEcho amplitudePulse Analyzer SettingsFront surface gate delay relative to trigger pulseSubsurface gate(if used)High passfilterDetection threshold for positive oscillation,negative oscillationA/D settingsSampling rateOffset settingPer Sample SettingsSample orientation(top or bottom(flipped)view and location of pin1or some other distinguishing characteristic) Focus(point,depth,interface)Reference planeNon-default parametersSample identification information to uniquely distinguish it from others in the same group11IPC/JEDEC J-STD-035April1999Appendix DReference Procedure for Presenting Applicable Scanned Data(continued) Reference Procedure for Presenting Scanned DataImagefile types and namesGray scale and color image legend definitionsSignificance of colorsIndications or definition of delaminationImage dimensionsDepth scale of TOFDeviation from true aspect ratioImage type:A-mode,B-mode,C-mode,TOF,Through TransmissionA-mode waveforms should be provided for points of interest,such as delaminated areas.In addition,an A-mode image should be provided for a bonded area as a control.12Standard Improvement FormIPC/JEDEC J-STD-035The purpose of this form is to provide the Technical Committee of IPC with input from the industry regarding usage of the subject standard.Individuals or companies are invited to submit comments to IPC.All comments will be collected and dispersed to the appropriate committee(s).If you can provide input,please complete this form and return to:IPC2215Sanders RoadNorthbrook,IL 60062-6135Fax 847509.97981.I recommend changes to the following:Requirement,paragraph number Test Method number,paragraph numberThe referenced paragraph number has proven to be:Unclear Too RigidInErrorOther2.Recommendations forcorrection:3.Other suggestions for document improvement:Submitted by:Name Telephone Company E-mailAddress City/State/ZipDate ASSOCIATION CONNECTING ELECTRONICS INDUSTRIESASSOCIATION CONNECTINGELECTRONICS INDUSTRIESISBN#1-580982-28-X2215 Sanders Road, Northbrook, IL 60062-6135Tel. 847.509.9700 Fax 847.509.9798。