ORACLE连接HDFS有个专项的解决方案

- 格式:docx

- 大小:24.90 KB

- 文档页数:5

HDFS 保障可靠性的措施什么是HDFS?HDFS(Hadoop分布式文件系统)是Apache Hadoop项目的核心组件之一。

它的设计目标是运行在廉价硬件上并能够处理大型数据集(例如,具有超过千亿个文件和文件大小超过几百PB的数据集)。

HDFS采用了一种主/从结构的架构,其中一个节点(namenode)充当文件系统的管理者,而其他节点(datanodes)充当存储文件的工作者。

文件被分成块,每个块在多个datanodes上都有多个副本。

这种方式确保了数据的可靠性和快速访问速度。

可靠性的重要性对于大数据应用来说,数据的可靠性是至关重要的。

在这种环境下使用不可靠的存储系统可能会导致数据损坏,损失或不一致。

为了避免这些问题,HDFS采用了大量的技术来确保数据的可靠性和一致性。

HDFS保障可靠性的措施以下是HDFS维护数据可靠性的核心技术:数据复制在HDFS上存储的数据是通过复制机制进行保护的。

文件被划分为块,并在HDFS集群中的多个节点之间复制。

默认情况下,每个块被复制到三个datanode 上。

这种复制机制确保了即使出现故障,数据仍然可以在其他节点上找到。

数据块健康检查HDFS通过运行常规检查和修复操作来保持数据块的持久性和一致性。

HDFS通过两个进程来完成这项任务:namenode和datanode。

namenode通过一个“心跳”机制来检查集群中节点的健康状态。

如果namenode 检测到节点失踪(即,没有心跳信号),则节点将被标记为不可用。

datanode周期性地将块报告给namenode,并向namenode报告块状态和位置信息。

如果namenode检测到块已经丢失副本,则它会触发一系列的复制操作,以确保在其他节点上复制块。

数据节点冗余在HDFS上存储的数据会根据预定义的复制规则在多个节点上分散存储。

当一个节点失败时,系统会自动从其他可用节点上复制缺失的数据。

此外,HDFS还支持机架感知性策略,即尽可能地将副本部署到不同的机架中,这将极大程度地减少数据丢失的影响。

HDFS 数据可靠性保障措施概述Apache Hadoop Distributed File System(HDFS)是Apache Hadoop软件库中基于Java的分布式文件系统。

它是Java平台上最流行的分布式文件系统之一,主要设计目标是将大规模数据集存储于集群中,提供高吞吐量数据访问的能力,具备水平扩展性以及容错能力。

要保障大规模数据集的安全可靠,HDFS 提供了一系列的数据可靠性保障措施。

这些保障措施的目的是防止数据丢失,并保证数据的完整性,从而保证可靠的数据处理。

HDFS 数据可靠性保障措施数据复制数据复制是HDFS数据可靠性保证的基本措施之一。

HDFS通过将数据复制多份并存储到不同的计算机上来达到高可靠性的目的。

HDFS中的数据块在存储前首先需要进行切分,切分成若干个数据块,每个数据块会被复制到集群中的不同计算机上,保证了一旦某个计算机硬件故障或网络故障,数据仍然可以从其他计算机中读取到。

复制的数据块在HDFS文件系统中有一个主副本机制。

其中原始数据块被称为主副本,其余的复制数据块被称为次副本。

副本之间的数据转移发生在HDFS创建文件时,以及在数据丢失后的数据点恢复过程中。

该策略保证了HDFS数据不会因为节点故障而丢失,有利于提高数据的可靠性和稳定性。

数据块检验为保证数据可靠性,HDFS会对存储在分布式文件系统上的数据块进行定期检查,并对其进行纠错处理。

在HDFS中,数据块被分割成固定大小的块,每个块都有校验和。

在数据块读取时,HDFS会对数据块进行校验和计算,并与原始校验和比较,这个过程可以保证数据的完整性。

另外,HDFS 提供了一个条带功能(Stripe)。

他会将数据块按照一定的方式进行分割,保证数据块之间的数据分布均衡,并将数据块的校验和合并以提升数据访问效率。

文件访问时,只有满足所有数据块的条件才能对数据文件进行访问。

NN Checkpoint 和 QJMNameNode Checkpoint(NN Checkpoint)和Quorum Journal Manager(QJM)也是HDFS数据可靠性保证的重要措施。

数据存储解决方案数据存储是指将数据存储在各种存储介质中,以便在需要时能够访问和使用。

对于大部分企业来说,选择适合自己业务和预算的数据存储解决方案至关重要。

下面是一些常见的数据存储解决方案:1. 传统数据库:传统关系型数据库(如Oracle、MySQL)是最常见的数据存储解决方案之一。

它们使用表格来存储数据,并支持复杂的查询和事务处理。

这种解决方案可靠稳定,但对大规模数据处理能力有限。

2. 分布式文件系统:分布式文件系统(如Hadoop HDFS、Amazon S3)适用于海量数据存储和处理。

它们将数据分散存储在多个节点上,具有高可靠性和可扩展性。

这样的解决方案适用于需要处理大规模数据集的企业。

3. NoSQL数据库:NoSQL数据库(如MongoDB、Cassandra)是另一种流行的数据存储解决方案。

它们区别于传统关系型数据库,通过键值对、文档或列族的形式存储数据。

NoSQL数据库可扩展性强,适用于高速写入和读取大量数据的场景。

4. 冷热数据分离:数据存储解决方案还可以针对数据的热度进行分离。

将常用的热数据存储在高性能存储介质(如固态硬盘)上,将不常用的冷数据存储在低成本存储介质(如云存储)上。

这种方法可以提高处理和存储效率,并减少成本。

5. 云存储:云存储是将数据存储在云服务提供商的服务器上。

云存储方便易用,无需建设和维护专门的数据中心。

同时,云存储还具有高可靠性和弹性扩展性,能够根据需求动态调整存储容量。

6. 存储虚拟化:存储虚拟化技术允许将多个物理存储资源虚拟化为一个统一的逻辑存储池。

这样可以提高存储利用率、简化管理,并提供灵活的存储分配和迁移功能。

综上所述,不同的企业有不同的需求,需要选择适合自己业务和预算的数据存储解决方案。

无论是传统数据库、分布式文件系统、NoSQL数据库还是冷热数据分离、云存储或存储虚拟化,都可以根据实际需求进行选择和部署。

解决oracle数据库“监听程序当前无法识别连接描述符中请求的服务”今晚搞了几个小时的oracle配置,现在终于连上了,同时也发现好多人每次重装系统后都得重装oracle,现将我如何处理这一类问题的方法分享如下,嘿嘿,百试不爽的我的oracle安装路径在F盘的app文件夹下,首先找到F:\app\Administrator\product\11.1.0\db_1\NETWORK\ADMIN下的listener.ora和tnsnames.ora这两个文件,用记事本打开就可以用下面的这些代码替换listener.ora中原有的代码注:路径要是自己的安装路径# listener.ora Network Configuration File: F:\app\Administrator\product\11.1.0\db_1\network\admin\listener.ora# Generated by Oracle configuration tools.SID_LIST_LISTENER =(SID_LIST =(SID_DESC =(SID_NAME = PLSExtProc)(ORACLE_HOME = F:\app\Administrator\product\11.1.0\db_1)(PROGRAM = extproc))(SID_DESC =(GLOBAL_DBNAME = orcl)(ORACLE_HOME = F:\app\Administrator\product\11.1.0\db_1)(SID_NAME = orcl)))LISTENER =(DESCRIPTION_LIST =(DESCRIPTION =(ADDRESS = (PROTOCOL = TCP)(HOST = localhost)(PORT = 1521))))# tnsnames.ora Network Configuration File: F:\app\Administrator\product\11.1.0\db_1\NETWORK\ADMIN\tnsnames.ora# Generated by Oracle configuration tools.ORCL =(DESCRIPTION =(ADDRESS = (PROTOCOL = TCP)(HOST = localhost)(PORT = 1521))(CONNECT_DATA =(SERVER = DEDICA TED)(SERVICE_NAME = orcl)))EXTPROC_CONNECTION_DATA =(DESCRIPTION =(ADDRESS_LIST =(ADDRESS = (PROTOCOL = IPC)(KEY = EXTPROC1)))(CONNECT_DATA =(SID = PLSExtProc)(PRESENTATION = RO)))用下面的这些代码替换tnsnames.ora 中原有的代码# tnsnames.ora Network Configuration File: F:\app\Administrator\product\11.1.0\db_1\NETWORK\ADMIN\tnsnames.ora# Generated by Oracle configuration tools.ORCL =(DESCRIPTION =(ADDRESS = (PROTOCOL = TCP)(HOST = localhost)(PORT = 1521))(CONNECT_DATA =(SERVER = DEDICA TED)(SERVICE_NAME = orcl)))EXTPROC_CONNECTION_DATA =(DESCRIPTION =(ADDRESS_LIST =(ADDRESS = (PROTOCOL = IPC)(KEY = EXTPROC1)))(CONNECT_DATA =(SID = PLSExtProc)(PRESENTATION = RO)))替换完成之后重启oracle数据库服务就可以了。

sqoop mysql\oracle数据导入到hbase 各种异常解决 --columns指定字段细节注意最近使用sqoop把数据从oracle导入到hbase 遇到各种问题耗了很多时间遇到的异常在网上也没找到过先例可能有朋友也遇到了这样的问题整理一下留在这里欢迎拍砖看到的朋友如果有更好的方法可以回复一起学习使用sqoop 导mysql的数据时一切顺利很方便导oracle的时候问题就来了--query命令:使用这个命令的时候需要注意的是 where后面的参数,$CONDITIONS这个参数必须加上而且存在单引号与双引号的区别,如果--query后面使用的是双引号那么需要在$CONDITIONS加上\ 即 \$CONDITIONS./sqoop import --connect jdbc:oracle:thin:@192.168.8.130:1521:dcshdev--username User_data2 --password yhdtest123qa --query "select * from so_ext t where \$CONDITIONS " -m 4 --hbase-create-table --hbase-table hso--column-family so --hbase-row-key id --split-by id如果使用--columns指令来指定字段也出现了问题因为在一行中写太多命令是不可能的,--columns 如果字段太多在命令中写也不方便所以使用shell脚本要方便的多那么在脚本中换行使用 \ 来换行有个问题就是使用--columns 指定的字段大小写要注意得使用小写。

如果使用大写导数据不会有异常会成功的,hbase中表也会创建好,但是scan 的时候你会发现没有数据这个蛋疼吧--columns id,order_id,order_code而且每个字段之间用逗号分隔,但是不能有空格,在sqoop中没有处理空格,如果在这个命令后的参数有空格的话就不能和oracle表中的字段对应上了结果虽然没有错误能够显示成功导入多了条数据,但是scan的时候会是0条数据关于导mysql和oracle的时候还有个区别:导mysql表的数据时不需要指定太多的命令就可以成功导入,但是oracle就需要很多命令,如--split-by这个切分参数在导mysql的时候就不需要,但是如果在导oracle的时候不加上就会出错了不信你试试。

hdfs ec策略摘要:一、HDFS概述二、EC策略介绍三、HDFS+EC策略的优势四、HDFS EC策略的实施步骤五、面临的挑战与解决方案六、总结与展望正文:一、HDFS概述HDFS(Hadoop Distributed File System)是Hadoop生态系统中的核心组件,为大数据处理提供了一个高可靠性、高可扩展性的分布式文件系统。

HDFS采用主从架构,由一个NameNode和多个DataNode组成。

NameNode负责管理文件系统的命名空间和元数据,DataNode则负责存储实际的数据块。

二、EC策略介绍EC(Erasure Coding)策略是一种数据保护技术,通过对数据进行编码和解码,实现在多个数据块丢失的情况下仍能恢复数据。

在HDFS中,EC策略将数据分成多个数据块,并对这些数据块进行编码。

当部分数据块丢失时,通过解码其他数据块来恢复丢失的数据。

三、HDFS+EC策略的优势1.提高数据可靠性:EC策略可以实现在多个数据块丢失的情况下仍能恢复数据,提高了数据的可靠性。

2.提高存储空间利用率:相较于传统的副本策略,EC策略可以使用更少的存储空间来实现相同的数据保护效果。

3.降低成本:EC策略可以减少DataNode的数量,降低硬件和运维成本。

4.简化运维:EC策略简化了运维工作,避免了因数据副本丢失而导致的复杂问题。

四、HDFS EC策略的实施步骤1.配置NameNode和DataNode:配置EC策略所需的参数,如编码算法、数据块数量等。

2.创建文件:在HDFS中创建需要保护的文件,并设置相应的权限和权限策略。

3.数据切片:NameNode将文件分割成多个数据块,并根据EC策略对数据块进行编码。

4.数据存储:DataNode将编码后的数据块存储在本地,并定期向NameNode汇报数据块的状态。

5.数据访问:客户端通过NameNode访问HDFS,并根据EC策略获取和解码数据。

五、面临的挑战与解决方案1.性能挑战:EC策略在数据写入和读取时会增加额外的编码和解码操作,可能影响性能。

3.7 习题一、选择题1. B2. C3. B4. D5. B6. C7. D8. D二、填空题1.在HDFS文件系统读取文件的过程中,客户端通过对输入流调用_read() 方法开始读取数据;写入文件的过程中客户端通过对输出流调用___write()___方法开始写入数据。

2.HDFS全部文件的元数据是存储在NameNode节点的___硬盘______(硬盘/内存),为了解决这个瓶颈,HDFS产生了____HA_____机制。

三、简答题1. 举例说明Hadoop的体系结构。

Hadoop其实是由一系列的软件库组成的框架。

这些软件库也可称作功能模块,它们各自负责了Hadoop的一部分功能,其中最主要的是Common、HDFS和YARN。

Common提供远程调用RPC、序列化机制,HDFS负责数据的存储,YARN则负责统一资源调度和管理等。

2.HDFS中数据副本的存放策略是什么?HDFS默认的副本系数是3,这适用于大多数情况。

副本存放策略是将第一个副本存放在本地机架的节点上,将第二个副本放在同一机架的另一个节点上,将第三个副本放在不同机架的节点上。

这种策略减少了机架间的数据传输,这就提高了写操作的效率。

Node和DataNode的功能分别是什么?元数据节点NameNode是管理者,一个Hadoop集群只有一个NameNode节点,是一个通常在HDFS实例中的单独机器上运行的软件。

NameNode主要负责HDFS文件系统的管理工作,具体包括命名空间管理(namespace)和文件block管理。

NameNode决定是否将文件映射到DataNode的复制块上。

对于最常见的3个复制块,第一个复制块存储在同一个机架的不同节点上,最后一个复制块存储在不同机架的某个节点上。

NameNode是HDFS的大脑,它维护着整个文件系统的目录树,及目录树里所有的文件和目录,这些信息以两种文件存储在本地文件中:一种是命名空间镜像,也称为文件系统镜像(file system image,FSImage),即HDFS元数据的完整快照,每次NameNode启动时,默认会加载最新的命名空间镜像,另一种是命名空间镜像的编辑日志(edit log)。

如何在Oracle中集成Hadoop文: SRC 张旭东许多垂直行业都在关注文件系统中庞大的数据。

这些数据中通常包含大量无关的明细信息,以及部分可用于趋势分析或丰富其他数据的精华信息。

尽管这些数据存储在数据库之外,但一些客户仍然希望将其与数据库中的数据整合在一起以提取对业务用户有价值的信息。

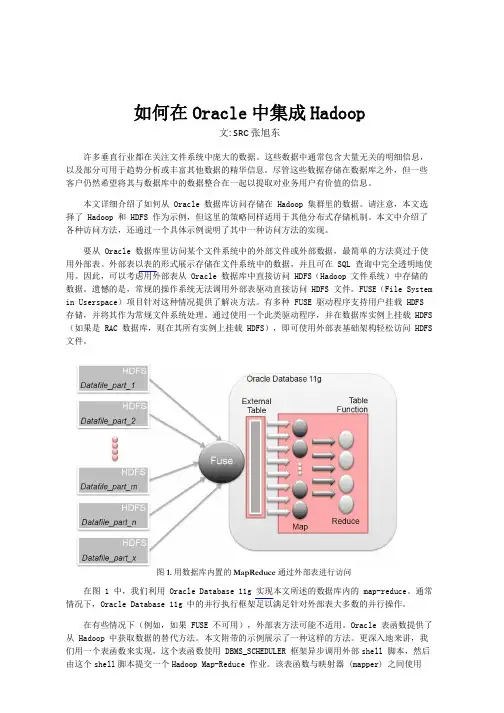

本文详细介绍了如何从 Oracle 数据库访问存储在 Hadoop 集群里的数据。

请注意,本文选择了 Hadoop 和 HDFS 作为示例,但这里的策略同样适用于其他分布式存储机制。

本文中介绍了各种访问方法,还通过一个具体示例说明了其中一种访问方法的实现。

要从 Oracle 数据库里访问某个文件系统中的外部文件或外部数据,最简单的方法莫过于使用外部表。

外部表以表的形式展示存储在文件系统中的数据,并且可在 SQL 查询中完全透明地使用。

因此,可以考虑用外部表从 Oracle 数据库中直接访问 HDFS(Hadoop 文件系统)中存储的数据。

遗憾的是,常规的操作系统无法调用外部表驱动直接访问 HDFS 文件。

FUSE(File System in Userspace)项目针对这种情况提供了解决方法。

有多种 FUSE 驱动程序支持用户挂载 HDFS 存储,并将其作为常规文件系统处理。

通过使用一个此类驱动程序,并在数据库实例上挂载 HDFS (如果是 RAC 数据库,则在其所有实例上挂载 HDFS),即可使用外部表基础架构轻松访问 HDFS 文件。

图 1. 用数据库内置的 MapReduce 通过外部表进行访问在图 1 中,我们利用 Oracle Database 11g 实现本文所述的数据库内的 map-reduce。

通常情况下,Oracle Database 11g 中的并行执行框架足以满足针对外部表大多数的并行操作。

在有些情况下(例如,如果 FUSE 不可用),外部表方法可能不适用。

Oracle 表函数提供了从 Hadoop 中获取数据的替代方法。

ORACLE中客户端与服务器端连接问题解决方案ORACLE中客户端连接服务器端常见问题(转)要排除客户端与服务器端的连接问题,首先检查客户端配置是否正确(客户端配置必须与数据库服务器端监听配置一致),再根据错误提示解决。

下面列出几种常见的连接问题:1、ORA-*****: TNS: 没有监听器显而易见,服务器端的监听器没有启动,另外检查客户端IP地址或端口填写是否正确。

启动监听器:$ lsnrctl start或C:\lsnrctl start2、ORA-*****: TNS: 监听程序无法启动专用服务器进程对于Windows而言,没有启动Oracle实例服务。

启动实例服务:C:\oradim Cstartup -sid myoracle3、ORA-*****: TNS: 操作超时出现这个问题的原因很多,但主要跟网络有关。

解决这个问题,首先检查客户端与服务端的网络是络连通,则检查两端的防火墙是否阻挡了连接。

4、ORA-*****: TNS: 无法处理服务名检查输入的服务名与配置的服务名是否一致。

另外注意生成的本地服务名文件(Windows下如D:\oracle\ora92\network\admin\tnsnames.ora,Linux/Unix下$ORACLE_HOME/network/admin/tnsnames.ora)里每项服务的首行服务名称前不能有空格。

5、ORA-*****: TNS: 监听进程不能解析在连接描述符中给出的*****_NAME打开Net Manager,选中服务名称,检查服务标识栏里的服务名输入是否正确。

该服务名必须与服务器端监听器配置的全局数据库名一致。

6、Windows下启动监听服务提示找不到路径用命令或在服务窗口中启动监听提示找不到路径,或监听服务启动异常。

打开注册表,进入HKEY_LOCAL_*****/SYSTEM/CurrentControlSet/Services/OracleOraHome92TNSListener项,查看ImagePath 字符串项是否存在,如果没有,设定值为D:\oracle\ora92\BIN\*****,不同的安装路径设定值做相应的更改。

ORACLE 数据库故障解决方案引言概述:ORACLE 数据库是目前最常用的企业级数据库之一,然而在使用过程中,难免会遇到各种故障。

本文将介绍一些常见的 ORACLE 数据库故障解决方案,帮助读者更好地应对数据库故障。

一、数据库连接问题的解决方案:1.1 检查网络连接:确保数据库服务器和客户端之间的网络连接正常。

可以通过 ping 命令或者其他网络工具来测试网络连通性。

1.2 检查监听器状态:监听器是用于监听数据库连接请求的服务,如果监听器未启动或者出现异常,可能导致数据库连接失败。

可以使用 lsnrctl 命令来检查监听器的状态,并根据需要启动或重启监听器。

1.3 检查防火墙设置:防火墙可能会阻止数据库连接请求通过特定的端口,导致连接失败。

确保防火墙的设置允许数据库连接请求通过所需的端口。

二、数据库备份与恢复问题的解决方案:2.1 定期备份数据库:定期备份数据库是防止数据丢失的重要手段。

可以使用RMAN (Recovery Manager) 工具进行数据库备份,可以选择全量备份、增量备份或者归档日志备份等方式。

2.2 恢复数据库:当数据库出现故障时,可以使用备份文件进行数据库恢复。

可以通过 RMAN 工具进行数据库恢复,根据备份类型选择相应的恢复策略。

2.3 监控数据库恢复过程:在进行数据库恢复时,需要密切监控恢复过程,确保恢复过程的顺利进行。

可以使用 RMAN 工具提供的恢复状态查询命令来监控恢复进度。

三、数据库性能问题的解决方案:3.1 优化 SQL 查询语句:SQL 查询语句是数据库性能的关键因素之一。

通过优化 SQL 查询语句,可以提高数据库的查询效率。

可以使用 EXPLAIN PLAN 命令来分析查询语句的执行计划,并根据需要进行索引优化或者重写查询语句。

3.2 调整数据库参数:数据库参数的设置对数据库性能有很大影响。

可以通过修改数据库参数来优化数据库性能,如调整缓冲区大小、并发连接数等。

hdfs数据库安全配置基线配置HDFS(Hadoop 分布式文件系统)的安全基线是确保Hadoop 集群安全的重要步骤之一。

以下是配置HDFS 安全基线的一些常见措施:身份验证和授权:使用Kerberos 进行用户身份验证,确保只有授权用户能够访问HDFS。

实施基于角色的访问控制(RBAC),限制用户对文件和目录的访问权限。

使用Access Control Lists(ACLs)对文件和目录进行更细粒度的权限控制。

数据加密:在传输过程中对HDFS 数据进行加密,可以使用Hadoop 加密套件(Hadoop Encryption Zones)或第三方加密工具。

在磁盘上对HDFS 数据进行加密,保护数据在磁盘上的存储安全。

日志和审计:启用HDFS 的审计日志功能,记录用户访问和操作的详细信息,以便进行审计和安全分析。

定期检查和分析审计日志,及时发现异常行为和安全事件。

网络安全:通过防火墙和网络隔离措施保护HDFS 节点的网络访问。

使用安全协议(如SSL/TLS)保护HDFS 节点之间的通信。

操作安全:使用安全的Hadoop 配置文件,并限制对配置文件的访问权限。

定期更新Hadoop 和HDFS 的软件版本,及时应用安全补丁和更新。

监控和警报:配置监控系统对HDFS 集群进行实时监控,及时发现异常情况和安全事件。

配置警报系统,对关键安全事件和异常行为进行及时警报和响应。

灾备和备份:实施HDFS 的数据备份和灾备方案,确保数据的可靠性和可恢复性。

定期测试数据备份和灾备恢复流程,确保数据备份和恢复方案的有效性。

培训和意识提升:对Hadoop 管理员和用户进行安全培训,提高他们对HDFS 安全的意识和理解。

定期进行安全演练和应急响应演练,加强团队的安全应对能力。

综上所述,配置HDFS 的安全基线涉及多个方面,包括身份验证和授权、数据加密、日志和审计、网络安全、操作安全、监控和警报、灾备和备份、培训和意识提升等。

标题:Oracle 集裙故障处理案例正文:一、概述Oracle 数据库在企业应用中扮演着重要的角色,为了保障数据的安全性和稳定性,很多企业都会采用集裙的方式来部署 Oracle 数据库。

然而,即使采用了集裙部署,也无法完全避免故障的发生。

在实际运维中,处理集裙故障是数据库管理员必须面对的挑战之一。

本文将以实际案例为例,探讨在 Oracle 集裙中常见的故障处理方法。

二、故障现象描述我们的案例是发生在一家电商企业的 Oracle 数据库集裙上。

在一天凌晨的数据库备份过程中,其中一台节点的数据库突然宕机,无法对外提供服务。

这导致部分业务受到影响,需要尽快将故障排除恢复服务。

三、排查故障原因1. 查看日志信息我们登入到集裙中的其他正常节点,查看日志信息。

日志中显示了一些关于存储和网络异常的报警信息。

2. 检查存储状态我们通过存储管理工具查看存储的状态。

发现存储设备上的部分磁盘出现了异常,可能是造成数据库宕机的原因之一。

3. 检查网络连接我们也检查了集裙节点之间的网络连接状态,发现了某个节点与存储之间的网络连接存在异常。

四、故障处理过程1. 修复存储设备针对存储设备上的异常,我们立即通联存储设备厂家进行了紧急维护。

通过他们的帮助,我们成功修复了存储设备上的磁盘异常,并恢复了存储的正常状态。

2. 修复网络连接我们对节点与存储之间的网络连接进行了调试和修复。

最终找到了网络连接异常的原因,并采取相应措施进行了修复。

3. 数据库恢复在经过以上步骤的处理之后,我们重新启动了故障节点上的数据库实例,并进行了数据完整性检查和恢复操作。

故障节点顺利恢复,并重新加入到了集裙中,正常对外提供服务。

五、故障处理总结通过以上的故障处理过程,我们总结了以下几点经验和教训:1. 定期检查存储设备的健康状态,及时排除潜在风险。

2. 注意集裙节点之间的网络连接状态,及时发现并解决异常。

3. 在处理集裙故障时,要有条不紊地逐步排查,不要操之过急。

湖仓一体大数据平台解决方案往下集成数据,往上搭载应用。

数据资产。

基础设施阿里云本地IDC…H 为云电信云腾讯云Azure AWS 京东云引擎层S-EMR阿里云-EMRAWS-EMRH 为云-MRS 星环-TDH 数据集成数据研发数据运维数据服务数据治理数据工厂规范建模指标管理参数配置API 工厂脚本/向导模式自定义函数导入在线测试 数据查询标签工厂实体管理标签管理任务管理算法工厂算法开发资源管理指标运维指标任务监控指标查询常规运维数据生产运维数据质量运维API 中心API 授权API 调用数据订阅标签中心量级、覆盖率标签值分布控制台项目管理子账号管理角色权限管理工作空间管理AccessKey管理平台安全设置数据地图数据管理类目管理常规开发离线开发实时开发数据安全数据脱敏数据加密数据规划资产盘点资产盘点报告元数据管理生命周期治理项管理治理效果分析全链血缘元数据检索元数据分析数据探查探查报告探查任务配置探查实例管理数据源管理数据源数据文件规范建表可视化建表DDL 建表数据同步离线同步实时同步API 运维配置、告警安全组配置标签运维标签任务监控标签查询算法运维算法任务监控配置及告警数据标准数据标准管理标准覆盖率评估2.传统数仓的问题技术架构效率低门槛高平台管理开发效率依赖离线T+1导出报表缺少实时元数据管理未打通实时离线数据的联系宽表建设平台治理批流统一湖仓一体数仓建设思路SQL 统一开发流程引入Hudi 加速宽表产出基于Flink SQL 构建实时数仓数仓平台化建设统一规范体系(1/3)业务板块规范定义 模型设计数据应用业务系统业务板块2业务板块1业务源数据1业务源数据2业务源数据3……数据域/主题域统计粒度(维度组合)一致性维度修饰词派生指标原子指标(业务过程+度量)维表(DIM )把逻辑维度物理化的宽表统计周期(时间维)汇总事实表(DWS )把明细事实聚合的事实表数据应用层(ADS )业务过程事务事实表(DWD)最原始粒度的明细数据维度属性统一规范,OneData 建模方法论(2/3)统一规范,可视化建模工具(3/3)统一元数据价值主张:特点:基于SQL 统一开发流程afhaTableSQL离线批处理实时流处理即席查询Lambda架构Lambda架构的主要思想:)、服务优点:1数据的不可变性2数据的重新计算缺点:双重计算+双重服务输入数据流批处理数据流实时计算数据流预处理结果增量处理结果批处理流处理即席查询API服务自助取数批处理视图增量处理视图Lambda 架构-数仓分层结构DIMRedisHBase ESMySQLADSKafkaES HBaseHiveHiveHiveDWSKafkaDWDKafkaE T LPrestoOLAPClichHouse DorisDBSourceMessae Queue RDS/ binlogS Q LS Q LS Q LS Q LS Q LC D CS Q LE T LKafkaHiveODS大数据平台技术栈大数据平台Kafka数据源Flink数据处理Data API Presto impala数据服务报表应用数据消费预警数据存储OGGPG 数据源MySQL解析层分布式消息队列流计算平台结果数据层数据接口层应用层Oracle数据源MySQL数据源层clickhouse IoTMQTTkuduStarRocks 原DorisDBKappa 架构优点:(1)架构简单,生产统一(2)一套逻辑,维护简单缺点:(1)适用场景的通用性不高(2)大数据量回溯成本高,生产压力大(3)流式计算结果不准确最终需要对账输入数据流ODS DWD DWSKafkaKafkaKafka服务DB应用Kappa 架构-数仓分层结构DIMRedisHBaseESMySQLADSKafkaES HBaseHiveDWSDWDE T LPrestoOLAPClichHouse DorisDBSourceMessae Queue RDS/ binlogS Q LS Q LS Q LS Q LS Q LC D CS Q LKafkaODSKafkaKafka方案对比与实际需求引入数据湖Hudi加速宽表构建Kafka Full D atai n c r e m e n t d atad atabasesKafkaDorisDB kudu clickhouseHudi架构图增量实时更新时间漫游Hudi数据湖典型PipelineHudi数据湖关键特性引入数据湖Hudi-湖仓一体架构MySQL OracleSQL Server PostgreSQL Redis结构化数据MongoDBJSON XML CSV Kafka ORC半结构化数据Parquet音频视频文档电子邮件非结构化数据数据源DataX(批量同步)API 接口(Restful )数据集成文件直传Flink-CDC (流式写入)Flink 计算/分析引擎计算引擎Spark Hive机器学习训练Presto 分析引擎Impala元数据管理Apache Hudi数据湖-存储存储对象S3OSSCOSHDFSAPI 服务机器学习推理数据服务消息订阅数据应用大数据平台湖仓一体平台智能推荐BI 报表即席查询人脸识别数据大屏引入数据湖Hudi-湖仓一体数仓分层结构DIMRedisHBase ESMySQLADSKafkaES HBaseHiveHiveHiveDWSKafkaDWDKafkaE T LPrestoOLAPClichHouse DorisDBSourceMessae Queue RDS/ binlogS Q LS Q LS Q LS Q LS Q LC D CS Q LE T LHudi on FlinkHudi on FlinkHudi on FlinkKafkaHive引入数据湖Hudi-湖仓一体产品核心功能数据集成:Ø批量集成Ø实时集成Ø消息集成数据湖管理:Ø结构化数据存储Ø半结构化数据存储Ø非结构化数据存储数据研发:Ø实时计算Ø数据智能加工Ø离线计算湖仓一体-Hudi On Flink 的实现KafkaKafkaSource GeneratorBinlogRecord InstantTimeFileIndexer WriteProcessOperatorFileIndexer WriteProcessOperatorCommitSinkMetadata PartitionerFileIndexerWriteProcessOperatorcheckpoint湖仓一体平台建设3.湖仓一体大数据平台核心功能-①实时数据接入自动接入接入配置湖仓一体大数据平台产品核心功能·实时同步+实时开发+实时运维配置来源表信息实时同步配置目标表Kafka信息通道控制设置实时开发源表中配置Kafka信息结果表中配置Kafka写入的目标库信息维表信息实时运维发布至运维设置启停与告警设置告警规则设置监控范围湖仓一体大数据平台产品核心功能-⑤元数据实时更新CDC SourceDatabaseSchemaTransformDDLDMLBinlog Kafka SinkAVROKafkaBinlog Kafka SourceHudi SinkCheckpointMetadataReportFetch湖仓一体大数据平台产品核心功能-⑥数据资产管理体系湖仓一体大数据平台产品核心功能-⑦性能压测压测场景:数据准备:20228压测结果:压测场景单条数据量压测数据量压测链路压测结果Kafka生产与消费20个字段,228个字节40WMySQL数据源到Kafka耗时46s(qps:8700)Kafka消费耗时4.6s(qps:8.7W)实时计算Oracle-MySQL20个字段,228个字节40W Oracle数据源数据新增到新增数据写到目标数据库MySQL(3进程,分配内存3G)qps:3778 40W*5qps:3715实时计算MySQL-Kudu20个字段,228个字节40W MySQL数据新增,经过Flink实时计算写到Kudu表中qps:5250结论:实时计算支持主流数据库1500万/小时的数据处理能力,且资源占用较低湖仓一体大数据平台产品未来支持功能-①增强SQL能力湖仓一体大数据平台产品未来支持功能-②精细化资源管理自动扩容缩容细粒度资源调度Flink on K8s4问题不支持事务由于传统大数据方案不支持事务,有可能会读到未写完成的数据,造成数据统计错误。

oracle数据同步解决方案

《Oracle数据同步解决方案》

Oracle数据库作为业界领先的关系型数据库管理系统,被广泛应用于企业的核心业务系统中。

在日常运营过程中,数据同步是一个非常重要的问题,特别是在多个地点或多个数据库之间需要实时同步数据时。

为了解决这个问题,需要一套稳定可靠的数据同步解决方案。

首先,Oracle自身提供了多种数据同步工具,比如GoldenGate 和Data Guard。

这些工具可以使用户在多个数据库之间实现高效的数据同步,确保数据的一致性和完整性。

GoldenGate可以在不同数据库之间实时同步数据,而Data Guard则可以提供可靠的数据备份和灾难恢复功能。

另外,还有一些第三方的数据同步工具,比如DBSync和HVR,它们可以与Oracle数据库无缝集成,并提供更加灵活和定制化的数据同步方案。

这些工具通常提供了更多的功能和配置选项,可以满足不同企业的特定需求。

除了工具之外,还可以考虑使用云平台提供的数据同步服务。

比如Oracle自己的Oracle Cloud,以及亚马逊的AWS和微软的Azure等云平台,都提供了数据同步服务,可以帮助企业快速搭建稳定可靠的数据同步方案。

总之,针对Oracle数据库的数据同步需求,企业可以根据自身的实际情况选择合适的解决方案。

无论是使用自带的工具、

第三方的数据同步软件,还是运用云平台的数据同步服务,都需要根据数据量、频次、实时性等因素进行考量,以确保数据同步的效率和稳定性。

选择合适的数据同步解决方案,将为企业带来更高的生产效率和数据安全。

hdfs fence机制-回复HDFS(分布式文件系统)是用于存储和处理大规模数据集的开源分布式文件系统。

在分布式环境中,由于硬件故障、软件错误或网络问题等原因,节点之间的通信可能会出现问题,导致数据不一致或丢失。

为了解决这个问题,HDFS引入了Fence机制来确保数据的正确性和可靠性。

Fence机制的主要目标是在检测到故障的节点之间建立一道防火墙,以避免问题扩散到整个系统。

当一个节点发生故障时,Fence机制会将该节点从系统中隔离开,阻止任何数据的读取和写入操作,直到问题得到修复为止。

接下来,我将详细介绍HDFS Fence机制的工作原理和应用场景。

1. Fence机制的工作原理Fence机制需要配合HDFS的故障检测和恢复机制来工作。

当HDFS发现一个节点出现故障时,它会首先尝试进行一些简单的修复操作,如重启节点或重新连接网络。

如果这些操作无效,HDFS将触发Fence机制来隔离故障节点。

Fence机制的关键在于有效地识别故障节点。

HDFS使用心跳机制来监控节点的状态。

每个节点都会定期发送心跳信号给NameNode,表示自己的存活状态和可用性。

当一个节点连续数次未发送心跳信号时,NameNode会认为该节点出现故障,并将其标记为"FENCED"状态。

一旦一个节点被标记为"FENCED",它将被HDFS排除在外,不再处理任何数据请求。

任何尝试读取或写入该节点上的数据的请求都会被拒绝。

这样可以避免数据的读写操作受到故障节点的影响。

2. Fence机制的应用场景Fence机制主要应用于以下两种场景中:2.1 故障节点的隔离和修复当一个节点发生故障时,Fence机制可以快速将其隔离,防止故障节点对整个系统产生负面影响。

隔离故障节点的同时,系统管理员可以对该节点进行修复操作,如重启节点、替换硬件等。

一旦修复完成,节点将被重新连接到系统,恢复正常的数据读写功能。

2.2 数据损坏的修复有时候节点的硬件故障或软件错误可能会导致数据损坏。

大数据接入方案概述大数据是当今企业决策的重要支持工具,可以帮助企业从各个角度进行数据分析和预测,从而做出更明智的决策。

为了实现大数据分析,企业需要建立一套高效的大数据接入方案,以确保数据的准确性、完整性和及时性。

本文将介绍一个常见的大数据接入方案,包括数据采集、数据传输、数据存储和数据处理等环节的解决方案。

数据采集数据采集是大数据接入的第一步,它是指将各种数据源的数据进行收集和整合。

常见的数据源包括企业内部系统、传感器数据、外部数据源等。

内部系统数据采集企业内部系统是大数据分析的重要数据来源。

为了将内部系统的数据纳入大数据分析范围,可以使用以下几种方法进行数据采集:1.日志采集:对于存在日志的系统,可以通过采集系统生成的日志文件,将其中的关键信息提取出来并存储到大数据平台中。

2.数据库同步:对于数据库驱动的系统,可以利用数据库的数据同步功能,将关键数据实时或定期地同步到大数据平台。

3.API接口:如果内部系统提供了API接口,可以通过调用API接口将数据推送到大数据平台。

外部数据源采集除了内部系统数据,外部数据源也是大数据分析的重要数据来源。

外部数据源可以是公开的数据集、社交媒体数据、传感器数据等多种形式。

1.爬虫采集:对于公开的数据集或网页数据,可以使用爬虫技术进行数据采集。

通过编写爬虫程序,可以从目标网站上抓取数据,并将数据保存到大数据平台中。

2.API接口调用:一些外部数据源提供了API接口,可以通过调用接口获取数据并存储到大数据平台中。

数据传输在数据采集完成后,需要将采集到的数据传输到大数据平台进行存储和处理。

数据传输应该满足以下几个要求:1.高效性:数据传输的速度应足够快,以便及时将数据传输到大数据平台。

2.安全性:数据传输过程中应采用加密和身份验证等安全机制,以保护数据的安全性。

3.稳定性:数据传输过程中应保持稳定,避免数据丢失或传输失败。

为了满足以上要求,可以使用以下几种数据传输方案:1.消息队列:使用消息队列来进行数据传输,可以实现高效、稳定和安全的数据传输。

使用ORACLE连接HDFS有个专项的解决方案[O]racle [D]irect [C]onnector for [H]adoop Distributed Files System,简称ODCH。

该软件包可以到Oracle官网下载:/technetwork/bdc/big-data-connectors/downloads/index.html选择第一项:Oracle SQL Connector for Hadoop Distributed File System Release 2.1.0我们这里使用2.1版本,下载到安装包后,解压即可:[root@ora11g ~]# unzip oraosch-2.1.0.zip变态呀,压缩包里还有压缩包:[root@ora11g ~]# unzip orahdfs-2.1.0.zip -d /usr/local/[root@ora11g ~]# chown -R oracle install /usr/local/orahdfs-2.1.0在解压缩文件的bin中,hdfs_stream有两个环境变量需要手动指定,编辑该文件:[root@ora11g ~]# vi /usr/local/orahdfs-2.1.0/bin/hdfs_stream增加两行环境变量:OSCH_HOME=/usr/local/orahdfs-2.1.0HADOOP_HOME=/usr/local/hadoop-0.20.2再次编辑grid用户环境变量:[root@ora11g ~]$ vi /home/grid/.bash_profile增加下列内容:export DIRECTHDFS_HOME=/usr/local/orahdfs-2.1.0export OSCH_HOME=${DIRECTHDFS_HOME}export ORAHDFS_JAR=${DIRECTHDFS_HOME}/jlib/orahdfs.jarexport HDFS_BIN_PATH=${DIRECTHDFS_HOME}/bin以oracle用户身份登录,创建两个目录,分别用于保存ODCH的操作日志和位置文件(location files):[oracle@ora11g ~]$ mkdir /data/ora11g/ODCH/{logs,extdir} -p[oracle@ora11g ~]$ chmod -R 777 /data/ora11g/ODCH/而后以sysdba身份登录到ORACLE,创建3个directory对象:SQL> create or replace directory ODCH_LOG_DIR as '/data/ora11g/ODCH/logs';grant read, write on directory ODCH_LOG_DIR to SCOTT;create or replace directory ODCH_DATA_DIR as '/data/ora11g/ODCH/extdir';grant read, write on directory ODCH_DATA_DIR to SCOTT;create or replace directory HDFS_BIN_PATH as '/usr/local/orahdfs-2.1.0/bin';grant read,write,execute on directory HDFS_BIN_PATH to SCOTT;Directory created.SQL>Grant succeeded.SQL>Directory created.SQL>Grant succeeded.SQL>Directory created.SQL>Grant succeeded.HDFS_BIN_PATH: hdfs_stream脚本所在目录.XTAB_DATA_DIR:用来存放“位置文件”(location files)的目录。

“位置文件”(location files) 是一个配置文件,里面包含HDFS的文件路径/文件名以及文件编码格式。

ODCH_LOG_DIR, Oracle用来存放外部表的log/bad等文件的目录.创建外部表,注意location目前是随便写的,随后还要再修改:SQL> conn scott/tigerConnected.SQL> CREATE TABLE odch_ext_table2 ( ID NUMBER3 ,OWNER VARCHAR2(128)4 ,NAME VARCHAR2(128)5 ,MODIFIED DATE6 ,Val NUMBER7 ) ORGANIZATION EXTERNAL8 (TYPE oracle_loader9 DEFAULT DIRECTORY "ODCH_DATA_DIR"10 ACCESS PARAMETERS11 (12 records delimited by newline13 preprocessor HDFS_BIN_PATH:hdfs_stream14 badfile ODCH_LOG_DIR:'odch_ext_table%a_%p.bad'15 logfile ODCH_LOG_DIR:'odch_ext_table%a_%p.log'16 fields terminated by ',' OPTIONALLY ENCLOSED BY '"'17 missing field values are null18 (19 ID DECIMAL EXTERNAL,20 OWNER CHAR(200),21 NAME CHAR(200),22 MODIFIED CHAR DATE_FORMAT DATE MASK "YYYY-MM-DD HH24:MI:SS",23 Val DECIMAL EXTERNAL24 )25 )26 LOCATION ('odch/tmpdata.csv')27 ) PARALLEL REJECT LIMIT UNLIMITED;Table created..切换至grid用户,创建hdfs中的目录并上传文件到hdfs:[grid@ora11g ~]$ hadoop dfs -mkdir odch[grid@ora11g ~]$ hadoop dfs -put tmpdata.csv odch/Tmpdata.csv文件是我们通过all_objects生成的,SQL脚本为:select rownum,owner,object_name,created,data_object_id from all_objects然后,通过ODCH的jar包,生成位置文件:[grid@ora11g ~]$ hadoop jar> ${ORAHDFS_JAR} oracle.hadoop.hdfs.exttab.ExternalTable> -D oracle.hadoop.hdfs.exttab.tableName=odch_ext_table> -D oracle.hadoop.hdfs.exttab.datasetPaths=odch> -D oracle.hadoop.hdfs.exttab.datasetRegex=tmpdata.csv> -D oracle.hadoop.hdfs.exttab.connection.url="jdbcracle:thin:@//192.168.30.244:1521/jssdb"> -D er=SCOTT> -publishDEPRECATED: The class oracle.hadoop.hdfs.exttab.ExternalTable is deprecated.It is replaced by oracle.hadoop.exttab.ExternalTable.Oracle SQL Connector for HDFS Release 2.1.0 - ProductionCopyright (c) 2011, 2013, Oracle and/or its affiliates. All rights reserved.[Enter Database Password:]The publish command succeeded.ALTER TABLE "SCOTT"."ODCH_EXT_TABLE"LOCATION('osch-20130516031513-6000-1');The following location files were created.osch-20130516031513-6000-1 contains 1 URI, 4685141 bytes4685141 hdfs://hdnode1:9000/user/grid/odch/tmpdata.csvThe following location files were deleted.odch/tmpdata.csv not deleted. It was not created by OSCH.其中,-D:指定相关参数tableName:外部表名字datasetPaths:源数据存放路径(HDFS)datasetRegex:数据文件名connection.url racle数据库连接串er:数据库用户名scott这个生成的osch-20130516031513-6000-1就是所谓的位置文件,真正指明我们的目标数据文件,在HDFS保存的位置,查看下文件内容就明白了:[root@ora11g ~]# more /data/ora11g/ODCH/extdir/osch-20130516031513-6000-1<?xml version="1.0" encoding="UTF-8" standalone="yes"?><locationFile><header><version>1.0</version><fileName>osch-20130516031513-6000-1</fileName><createDate>2013-05-16T13:54:02</createDate><publishDate>2013-05-16T03:15:13</publishDate><productName>Oracle SQL Connector for HDFS Release 2.1.0 - Production</productName><productVersion>2.1.0</productVersion></header><uri_list><uri_list_item size="4685141" compressionCodec="">hdfs://hdnode1:9000/user/grid/odch/tmpdata.csv</uri_list_item></uri_list></locationFile>根据提示修改odch_ext_table外部表读取的文件:SQL> ALTER TABLE "SCOTT"."ODCH_EXT_TABLE"2 LOCATION3 (4 'osch-20130516031513-6000-1'5 );Table altered.SQL> set line 150 pages 1000;SQL> col owner for a10SQL> col name for a20SQL> select * from odch_ext_table where rownum<10;ID OWNER NAME MODIFIED VAL---------- ---------- -------------------- ------------ ----------1 SYS ICOL$ 15-MAY-13 22 SYS I_USER1 15-MAY-13 463 SYS CON$ 15-MAY-13 284 SYS UNDO$ 15-MAY-13 155 SYS C_COBJ# 15-MAY-13 296 SYS I_OBJ# 15-MAY-13 37 SYS PROXY_ROLE_DATA$ 15-MAY-13 258 SYS I_IND1 15-MAY-13 419 SYS I_CDEF2 15-MAY-13 549 rows selected.数据被读取出来了,竣工。