A GPU Accelerated Algorithm for Compressive sensing based

Image Super-resolution*

Xifei Wu1, Hui Xiang1+, Peng Lu1

1School of Computer Science and Technology, Shandong University, Jinan, 250101, China

Abstract—this paper presents a parallel algorithm designed for Super-resolution Image Reconstruction based on Compressive sensing in the ATI Stream platform. In the accelerating process, we select part of the serial program as the objects to be sped up according to the execution time of each stage, set appropriate parallel granularity to make full use of GPU's computational horsepower, and make a rational use of different kinds of memory space in GPU. At last, the result of the parallel algorithm is shown and analyzed. Compared to the serial algorithm, parallel algorithm has significantly accelerated results.

Keywords: Compressive Sensing; Image Super-resolution; parallel computing; GPU

I.INTRODUCTION*

Compressive sensing, also known as compressed sensing, is a technique motivated by recent results in sparse signal representation, which ensures that linear relationships among high-resolution signals can be precisely recovered from their low-dimensional projections. According to this theory, the pressure of sampling original signal is greatly released: sample rate is not limited by Nyquist sampling theorem, and the process of compressing original signal is omitted. However, recovering high-resolution signals from low-dimensional projections is a sufficient complex work for personal computers, and parallel computing can be used to solve this problem.

As the low-dimensional projections contain most information of high-resolution signals, thus it can be used in most of the field that original signal can do, such as Face Recognition [1], Image Classification [2], etc. In [3], the authors proposed a method of Image Super-Resolution based on the perspective of compressive sensing, and had a review of the previous proposed method, at last, made a conclusion that Image Super-Resolution based on compressive sensing had the best performance.

As the problem above is the same as recovering high-resolution signals from low-dimensional projections, the course is very complex and time-consuming for serial program, thus proposing a parallel algorithm to solve the problem is very necessary and meaningful. In this paper, we *Supported by Key Project of National Natural Science Foundation of China under Grant No. 60633030; Foundation for Outstanding Young Scientist in Shandong Province under Grant No. BS2009DX017

+Corresponding author:

Phn: +86-531-86738912, Email: hxiang@https://www.doczj.com/doc/fe16738961.html, present a parallel algorithm to solve the problem and make a comparison between them, the results show that parallel program can achieve the same result with less time than the serial program.

According to the serial program, we divided the process into several stages. The stage that consumes more time is firstly considered, then make a analyze that if it is suitable for parallel computing: is the same process executed many times in the program, and do they have data relation in each time, etc, then, a kernel function is designed for the stage, which will be executed in many threads after execution.

There are some other researches which have some success on parallel implementation of image (signal) reconstruction based on Compressive Sensing.

M.Andrecut [4] has carried through GPU implement on signal resuming from sparse signal uses MP (Matching Pursuit) algorithm based on iterative greedy. The results showed: in the single-precision computations, GPU speed is a multiple of 31 times; in double precision arithmetic, the speed is a multiple of 21 times, but the reconstructed high-resolution image based on MP algorithm does not perform so well as the image based on the algorithm used in this paper(Figure 5).

Alexandre Borghi[5] accelerates parallel implementation on a simple Compressive Sensing problem .Using the Moreau-Yosida strategy, full taking the advantages of parallel platforms, shared memory, vector, parallel, multi-core, the algorithm compare test in multi-core CPU platform and GPU platform. Experiment results show that in multi-threaded case, the algorithm of GPU achieves relatively different accelerated results.

The rest of this paper is organized as follows.Section Ⅱintroduces the algorithm of Super-resolution image reconstruction based on Compressive Sensing. The main methods and steps of parallel GPU accelerating program are presented in Section Ⅲ. In Section Ⅳ, the experimental results of CPU and GPU program are given and analyzed. Finally, the conclusions are drawn in Section Ⅴ.

II.SUPER-RESOLUTION IMAGE RECONSTRUCTION BASED ON COMPRESSIVE

SENSING

A.The theory of Compressive Sensing

The theory of Compressive Sensing was proposed by the Candès [6], Romberg [7], Tao [8], and Donoho [9], etc.

2011 Workshop on Digital Media and Digital Content Management

Candès has proved that: as long as the signal in an orthogonal space is sparse, we can lower the frequency of (M×N) sample signal, and can reconstruct the signal with high probability.

More specifically, suppose signal X has a sparse

representation α in some orthogonal basis (e.g., wavelet, Fourier) or tight frame (e.g., curvelet, Gabor) Ψ, X=Ψ × α,

α is a sparse representation of signal X, and Ψ is a sparse

basis of signal X; then find a speculation basis Φ: M × N

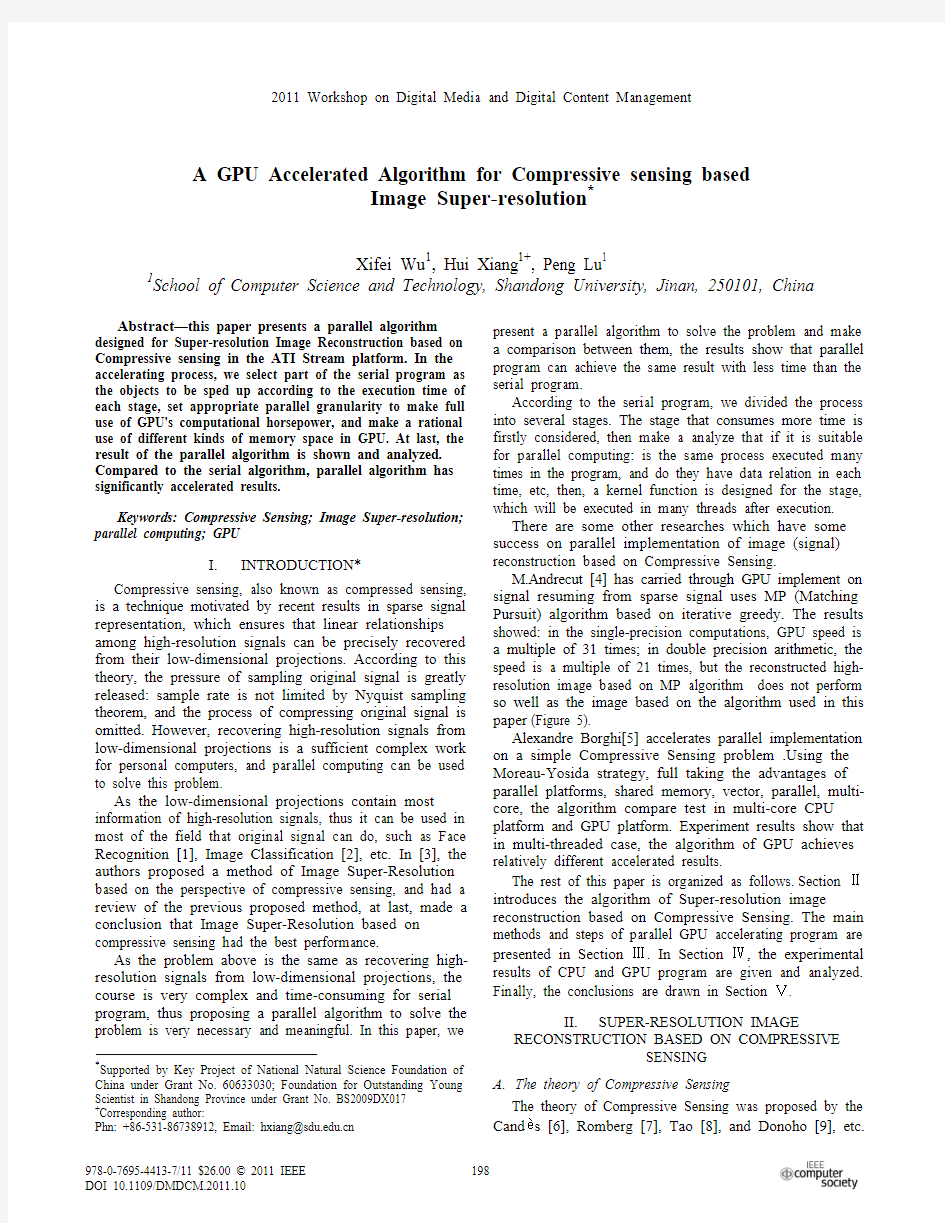

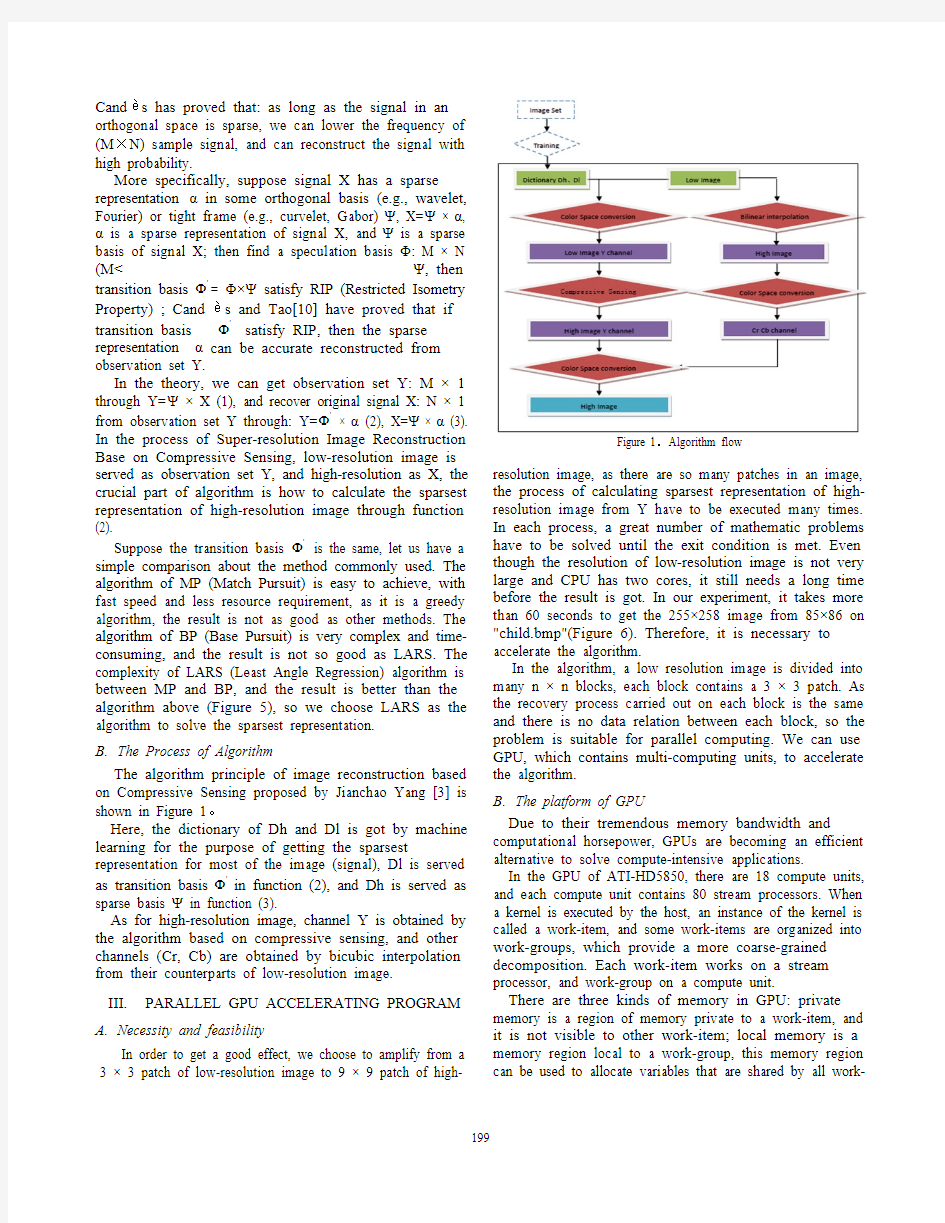

(M< observation set Y. In the theory, we can get observation set Y: M × 1 through Y=Ψ × X (1), and recover original signal X: N × 1 from observation set Y through: Y='Φ × α (2), X=Ψ × α (3). In the process of Super-resolution Image Reconstruction Base on Compressive Sensing, low-resolution image is served as observation set Y, and high-resolution as X, the crucial part of algorithm is how to calculate the sparsest representation of high-resolution image through function (2). Suppose the transition basis 'Φ is the same, let us have a simple comparison about the method commonly used. The algorithm of MP (Match Pursuit) is easy to achieve, with fast speed and less resource requirement, as it is a greedy algorithm, the result is not as good as other methods. The algorithm of BP (Base Pursuit) is very complex and time-consuming, and the result is not so good as LARS. The complexity of LARS (Least Angle Regression) algorithm is between MP and BP, and the result is better than the algorithm above (Figure 5), so we choose LARS as the algorithm to solve the sparsest representation. B.The Process of Algorithm The algorithm principle of image reconstruction based on Compressive Sensing proposed by Jianchao Yang [3] is shown in Figure 1。 Here, the dictionary of Dh and Dl is got by machine learning for the purpose of getting the sparsest representation for most of the image (signal), Dl is served as transition basis 'Φin function (2), and Dh is served as sparse basis Ψ in function (3). As for high-resolution image, channel Y is obtained by the algorithm based on compressive sensing, and other channels (Cr, Cb) are obtained by bicubic interpolation from their counterparts of low-resolution image. III.PARALLEL GPU ACCELERATING PROGRAM A.Necessity and feasibility In order to get a good effect, we choose to amplify from a 3 × 3 patch of low-resolution image to 9 × 9 patch of high- Figure 1.Algorithm flow resolution image, as there are so many patches in an image, the process of calculating sparsest representation of high-resolution image from Y have to be executed many times. In each process, a great number of mathematic problems have to be solved until the exit condition is met. Even though the resolution of low-resolution image is not very large and CPU has two cores, it still needs a long time before the result is got. In our experiment, it takes more than 60 seconds to get the 255×258 image from 85×86 on "child.bmp"(Figure 6). Therefore, it is necessary to accelerate the algorithm. In the algorithm, a low resolution image is divided into many n × n blocks, each block contains a 3 × 3 patch. As the recovery process carried out on each block is the same and there is no data relation between each block, so the problem is suitable for parallel computing. We can use GPU, which contains multi-computing units, to accelerate the algorithm. B.The platform of GPU Due to their tremendous memory bandwidth and computational horsepower, GPUs are becoming an efficient alternative to solve compute-intensive applications. In the GPU of ATI-HD5850, there are 18 compute units, and each compute unit contains 80 stream processors. When a kernel is executed by the host, an instance of the kernel is called a work-item, and some work-items are organized into work-groups, which provide a more coarse-grained decomposition. Each work-item works on a stream processor, and work-group on a compute unit. There are three kinds of memory in GPU: private memory is a region of memory private to a work-item, and it is not visible to other work-item; local memory is a memory region local to a work-group, this memory region can be used to allocate variables that are shared by all work- items in that work-group; global memory is a memory region permits read/write access to all work-items in all work-group. Private memory and local memory are faster to be read or written than global memory, but the space is very small. In GPU of ATI-HD5850, there are 64K local memory and 16K private memory in each compute unit, and the global memory can be used is about 134M. C.Design of Parallel GPU Accelerating The serial algorithm can be divided into several stages, and the time consumed in each stage can be recorded by function of clock. Take the picture of “child.bmp" for example, the time costs in each stage is shown in Figure 2: Figure2. CPU execution time The execution time mainly concentrates on the stage of the convolution (about 8 second) and stages of n × n blocks recovery (about 52 second). Therefore, we selected these two procedures as the objects to be sped up. 1)Distribution of accelerate tasks Convolution stage: The number of threads in the GPU is the same as the number of elements of Y value in low-resolution image, and the id of the threads is management in 2-dimension, after the allocation, every thread has a unique thread id. According to the id number, each thread calculate the location of the data they need in the original matrix, has a calculation, and write the result to the corresponding position of the result matrix. There is a read conflict in the process of reading original matrix, but in the process of writing data, each thread is independent to each other. The process of kernel function of convolution stage is shown in part one of section D. Recycling stages of n × n block: As the low-resolution image is divided into small patches in the same size of 3 × 3, and the algorithm to get the sparsest representation from each patch is the same, so they have the same process, and without data relation. In the serial program for "child.bmp", they are accomplished in the statement of for, and execute one after another for 1764 times, using the same algorithm, so we write a kernel function for the algorithm, and the 1764 tasks are assigned to 1764 work items. Thus, they can work in the form of parallel. 2) GPU memory allocation Private and local memory of GPU is very limited, which is a big bottleneck for the effect of parallel. Allocating and using memory rationally is a very important to a good performance of parallel algorithm. We use the memory in accordance with the following principles: Allocate the private memory or local memory for the variables that take up less space and will be operated frequently; Allocate global memory for the variables which takes up more space and will be operated infrequently; As the memory cannot be allocated dynamic in the architecture of openCL, thus all the memory spaces used for the kernel function were allocated at one time before execution, and repeatedly use these spaces during the algorithm until the end of the process, thus reducing memory consumption. D. Implement of Parallel GPU Accelerating According to the design mind above, we do the programming on the ATI Stream SDK VS2008 platform with the help of language openCL. 1)The kernel function for Convolution stage listed below: //src is original matrix //arr is a vector for convolution //res is a result matrix //width is widht of matrix src __kernel void conv2(__global float *src, __global float *arr, __global float* dst,uint width) { //get the coordinates of current id in the thread matrix through openCL API:get_global_id posx <- get_global_id(0) posy <- get_global_id(1) get the length of vector arr value <- 0; for i = 0;i < len ; i++ do begin //get original data from matrix src and vector arr according to posx and posy poss <- posx * widthA + (posy - i) value <- value + src[poss] * arr[i]; repeat //write the result to the corresponding place of result matrix dst[posx * widthA + posy] = value } The size of thread matrix is the same as original matrix, each thread accomplishes its corresponding assign, and then the final result is got. 2) The kernel function of algorithm of LARS As most part of this kernel is hold by a statement of while, so it is hard to be divided into small granularity. The statement of while is very long and complex, the size of variable in each thread is more than 60K (most part of the memory is taken by the type of float array). The size of Dh dictionary is about 324K, and Dl is about 576K. In the GPU HD5850, the local memory of each work-gr private memory is 16K, it is not even eno item (in which a thread is run on), so mos float array used in this kernel is allocated in may be, this is the reason why this kernel fu work very well. In order to reduce the length of code, twenty five functions besides the kernel fun file, which are called by kernel functions. D language, in the standard of openCL, permitted to be passed as a parameter, a permitted, either, so we define a new stru below, the element of size is a pointer to a two element, which store the width and heig and the element of data is a pointer to float- int s[2], float d[91], then , generate a variab and initial it by arr.size = s, arr.data = d, the passed as parameter. IV. EXPERIMENTS This paper focuses on the GPU imp Super-resolution image reconstruction Compressive Sensing. We experiment on th Intel Core2 Duo 2.33GHZ CPU and 2GB code with VS2008 and AMD Stream SDK We run the algorithm on CPU and GPU The result of amplifying “child.bmp” from 258 is as follows: Table 1. RESULT COMPARISION IN DIFFERENT P MILLISECOND) CPU GPU-HD58Convolution stage 7907 7.084 Recycling stages of n × n block 52891 8825 Whole program 60828 9642 Some other experiment results, as show n Figure 3. Convolution time comparison (unit: m 2000 4000 6000 8000 Img1 Img2Img3 C G H roup is 64K, and ough for a work-st of the type of n global memory, function does not there are about nctions in ".CL" Different from C pointer is not and quote is not ucture cArray as a integer-array of ght of float array, array: ory, for example, ble of cArray arr, en cArray can be plementation of n based on he computer with B memory ,and platform. (ATI-HD5850). 85 × 86 to 255 × PLATFORM(UNIT: 50 Speedup Ratio 1129 5.993 6.309 in Figure 3, 4: millisecond) Figure 4. Whole time comparison From Table 1 and Figure 3, 4, we program using ATI HD5850 h compared with serial program. The parallel algorithm for the performances very well, and the sp times. However, in the stages of n algorithm does not perform so wel of LARS algorithms: a statement the parts of the kernel function, and is uncertain, there is a data relation so a small granularity cannot b function needs at least 60K memo array, so most of the float-array memory, which is slower to be rea memory and local memory. In each of time is wasted for waiting to global memory. During our GPU program, there need to solve: 1> How to divide the problem w small granularity problem, wh parallel. If a matrix is so large in private or local memory, global memory, how to achiev a series of operations of this m data of matrix into local memo read or written, in parallel algo 2> If the resolution of an image memory may be not enough f stage of n × n block. V. CONCLU This paper proposes a parallel ATI-HD5850 for Image Supe Compressive Sensing in the AT select two part of the serial algori sped up according to execution tim first stage, the whole work is divide and makes full use of GPU's comp the second stage, the speed to read memory is a great obstacle to the algorithm. As a result, parallel prog of the time used by serial program. CPU GPU(ATI-HD5850) 10000 200003000040000500006000070000 Image1 Image2 Im n (unit: millisecond) e can see that the parallel has a significant effect e stage of convolution eed is a multiple of 1129 n × n block, the parallel ll, as there are two limits of while nearly take all d the number of iteration in each time of iteration, e generated; the kernel ory for variable and float y is allocated in global ad or written than private h work-item, a great part read or write data from e are some problems we with data relation into a hich is more suitable for e that cannot be allocated and finally allocated in ve a good performance in matrix through move some ory, which is faster to be orithm. e is too high, the global for kernel function in the USION program with the aid of er-resolution based on I Stream platform. We thm as the objects to be me of each stage. At the ed into small granularity, utational horsepower. At or write data from global performance of parallel gram used only one-sixth mage3 CPU GPU(ATI-HD5850) VI. ACKNOWLEDGEMENTS We would like to thank AMD Corporation. They give us a lot of help, such as the platform of GPU (ATI HD5850), the material of openCL Language. VII. REFERENCE [1] John Wright, Allen Y. Yang, Arvind Ganesh, ect. Robust Face Recognition via Sparse Representation. IEEE Transactions on Pattern Analysis and Machine Intelligence . 2008. [2] Jianchao Yang, Kai Yu, etc. Linear Spatial Pyramid Matching Using Sparse Coding for Image Classification. Computer Vision and Pattern Recognition, 2009. CVPR 2009. [3] Jianchao Yang, John Wright, Thomas Huang, Yi Ma, etc. Image Super-Resolution as Sparse Representation of Raw Image Patches. IEEE Conference on Computer Vision and Pattern Recognition . 2008. [4] M. Andrecut. Fast GPU Implementation of Sparse Signal Recovery from Random Projections. Institute for Biocomplexity and Informatics University of Calgary . [5] Alexandre Borghi, etc. A Simple Compressive Sensing Algorithm for Parallel Many-Core Architectures. [6] E Candès, Compressive sampling. Proceedings of the International Congress of Mathematicians . Madrid , Spain , 2006, 3 :1433 - 1452. [7] E Candès, J Romberg, Terence Tao. Robust uncertainty principles: Exact signal reconstruction from highly incomplete frequency information. IEEE Transaction Information Theory , 2006, 52 (2):4892509. [8] E J Candès and T Tao. Near optimal signal recovery from random Projections: Universal Encoding Strategies?. IEEE Transaction Information Theory , VOL. 52, NO. 12, DECEMBER 2006 [9] Donoho, D. L., Compressed Sensing, IEEE Transactions on Information Theory , V. 52(4), 1289–1306, 2006 [10] Candes E, Tao T. Decoding by linear programming. IEEE Transactions on Information Theory, 2005, 51(12):4203-4215 [11] Technical Overview: ATI Stream Computing. AMD.LTD. Figure 5. The results of different algorithm based on compressive sensing (From left to right: the result of Match Pursuit, the result of Base Pursuit, the result of Least Angle Regression (used in this paper)) Figure 6. The flower and child image used in the experiments (From left to right: low-resolution image, Super-resolution image using bicubic interpolation, Super-resolution image using compressive sensing (used in this paper), Original image which produces low-resolution image) 稳压管型号标号对照表 代号参数代号参数代号参数代号参数 2B1 1.9V-2.1V4B1 3.7V-3.9V6B1 5.5V-5.8V9B18.3V-8.7V 2B2 2.0V-2.2V4B2 3.8V-4.0V6B2 5.6V-5.9V9B28.5V-8.9V 2B3 2.1V-2.3V4B3 3.9V-4.1V6B3 5.7V-6.0V9B38.7V-9.1V 2C1 2.2V-2.4V4C1 4.0V-4.2V6C1 5.8V-6.1V9C18.9V-9.3V 2C2 2.3V-2.5V4C2 4.1V-4.3V6C2 6.0V-6.3V9C29.1V-9.5V 2C3 2.4V-2.6V4C3 4.2V-4.4V6C3 6.1V-6.4V9C39.3V-9.7V 3A1 2.5V-2.7V5A1 4.3V-4.5V7A1 6.3V-6.6V11A19.5V-9.9V 3A2 2.6V-2.8V5A2 4.4V-4.6V7A2 6.4V-6.7V11A29.7V-10.1V 3A3 2.7V-2.95A3 4.5V-4.7V7A3 6.6V-6.9V11A39.9V-10.3V 3B1 2.8V-3.0V5B1 4.6V-4.8V7B1 6.7V-7.0V11B110.2V-10.6V 3B2 2.9V-3.1V5B2 4.7V-4.9V7B2 6.9V-7.2V11B210.4V-10.8V 3B3 3.0V-3.2V5B3 4.8V-5.0V7B37.0V-7.3V11B310.7V-11.3V 3C1 3.1V-3.3V5C1 4.9V-5.1V7C17.2V-7.6V11C110.9V-11.3V 3C2 3.2V-3.4V5C2 5.0V-5.2V7C27.3V-7.7V11C211.1V-11.6V 3C3 3.3V-3.5V5C3 5.1V-5.3V7C37.5V-7.9V11C311.4V-11.9V 4A1 3.4V-3.6V6A1 5.2V-5.4V9A17.7V-8.1V12A111.6V-12.1V 4A2 3.5V-3.7V6A2 5.3V-5.5V9A27.9V-8.3V12A211.8V-12.4V 4A3 3.6V-3.8V6A3 5.4V-5.7V9A38.1V-8.5V12A312.2V-12.7V 常用5W稳压管参数 二极管1N53335W\3.3V 二极管1N5333A5W\3.3V 二极管1N5333B5W3.3V 二极管1N53345W\3.6V 型号DMIPS/MHz制造工艺CPU 65nm MSM7227T 800MHz 50nm MT6573 800MHz 50nm MT6573 1GHz 80nm PXA930 800MHz 65nm PXA920 800MHz Cortex-A545nm MSM7225A 800MHz 45nm MSM7227A 1GHz 45nm MSM8225 双核1GHz Cortex-A728nm MT6583 双核1.5GHz 28nm MT6589 四核1.2GHz 45nm OMAP3620 1GHz 45nm S5PC111/S5PC110 1GHz Scorpion65nm QSD8250 1GHz 65nm QSD8650 1GHz 65nm QSD8650 1.2GHz 45nm MSM8255 1GHz 45nm MSM8260 双核1.2GHz 45nm MSM8260 双核1.5GHz 45nm APQ8060 双核1.5GHz 45nm MSM8260 双核1.7GHz Cortex-A940nm MT6575 1GHz 40nm MT6575 1.5GHz 40nm MT6577 双核1GHz 40nm U8500 双核1GHz 40nm Tegra2 双核1.2GHz 45nm OMAP4460 双核1.2GHz 45nm Exynos4210 双核1.2GHz 45nm OMAP4460 双核1.5GHz 45nm OMAP4470 双核1.5GHz 40nm海思K3V2四核 1.4GHz 40nm Tegra3 四核1.5GHz 32nm Exynos4412 四核1.4GHz 32nm MX5Q 四核1.4GHz 32nm Exynos4412 四核1.6GHz Medfield32nm Z2460 1.6GHz单线程krait28nm MSM8960 双核1.5GHz 28nm APQ8064 四核1.5GHz 32nm Exynos5450 四核2GHz 28nm Tegra4 四核2.5GHz 摘要:近年来,人工智能成为学术界和工业界的研究热点,并已经成功应用于医疗健康等领域。着重介绍了人工智能在医学影像领域最新的研究与应用进展,包括智能成像设备、智能图像处理与分析、影像组学、医学影像与自然语言处理的结合等前沿方向。分析了研究和发展从源头入手的全链条人工智能技术的重要性和可行性,阐述了学术界和工业界在这一重要方向上的创新性工作。同时指出,人工智能在医学影像领域中的研究尚处于起步阶段,人工智能与医学影像的结合将成为国际上长期的研究热点。 关键词:人工智能; 医学影像; 成像方法; 图像处理与分析; 自然语言处理 1 引言 人工智能(artificial intelligence, AI)是当下学术界和产业界的一个热点。经过近几年的高速发展,深度学习已经实现了在传统的图像、视频、语音识别等领域的落地,并迅速地向文本处理、自然语言理解、人机对话、情感计算等方面渗透,并在安防、物流、无人驾驶等行业发挥了重要作用。 人口老龄化问题的显现以及人们对健康与日俱增的要求,对目前有限的医疗资源和医疗技术提出了更大的挑战。医疗领域亟需新的技术满足这些需求。与此同时,国内外与医疗相关的人工智能技术也在飞速地发展,科研和创业项目如雨后春笋,为解决医疗领域的挑战提供了新的机遇。目前已经出现了计算机辅助诊断、智能专家系统、手术机器人、智能药物研发以及健康管理等多种产品。 在众多的医疗信息中,医学影像是疾病筛查和诊断、治疗决策的最主要的信息来源。基于医学影像的诊断和治疗是一个典型的长链条、专业化的领域,涵盖了医学影像成像、图像处理与分析、图像可视化、疾病早期筛查、风险预测、疾病辅助检测与诊断、手术计划制定、术中辅助导航、随访跟踪与分析、康复计划制定等一系列方向。目前,医院存储的信息超过90%是影像信息,影像信息已经形成了巨大的数据积累。为此,基于医学影像大数据的人工智能技术与应用就成为医疗机构、科研、产业和政府共同关注的焦点。 医学影像链可以分为成像和图像挖掘两部分。首先,作为信息源头的医学成像设备,其成像质量会对后续疾病的检测、诊断与治疗起到至关重要的作用。利用AI技术可以实现医学影像成像质量的提升,AI优化的扫描工作流可以显著提高扫描效率,并使成像质量趋于标准化,从而给整个医疗健康链条带来深远的影响,具有重要的临床与科研价值。 移动设备的芯片 prajnamas发布于2011 年08 月20 日 | 1条评论 如果正在读文章的你,曾经有过配机的经历,那么对CPU、显卡、内存和硬盘这些东西一定不会陌生。事实上,移动设备(手机、平板等等)也有CPU、显卡、内存和“硬盘”这些东西,架构与电脑差距不大。 小小的手机居然放得下这么多东西?事实上,手机虽然架构与电脑完全一样,但形态上却不太一样。手机芯片集成了CPU、显卡和内存等等一系列组件,并且用最新的制程进行加工,其体积非常之小(只相当于成年人的小指指甲盖大小)。下图是iPhone 4内部的A4大小: 图上的Flash意指闪存,对移动设备而言相当于电脑的“硬盘”。A4 + 闪存的功能即相当于整个台机之上的CPU、显卡、内存、主板和硬盘集合,小小体积,巨大能量。本文主要想为大家介绍一下移动设备芯片之上的CPU与显卡,细数各家之长,让大家明白Android所用芯片与iPhone/iPad的不同。 因为这是一个产业链 移动设备明显已经成为产业链。手机的每个部件都会有相应的供应商,音频、视频、屏幕、通信、摄像头、闪存等等。芯片自然也是一样,大名鼎鼎的高通、Nvidia、德州仪器都出售移动设置芯片;而且借此东风,还活得挺好。 如果说市面上Android 机器所用的芯片着着实实花了你的眼,那么小编可以告诉您一句,其实它们都出自一家厂商。你震惊了吗?这家厂商就是过去不显水不露水的ARM,当然最近借移动设置东风,确实火了一把。 与桌面CPU不同的是,移动设备CPU只有一家寡头,那就是ARM 。它的营销模式与Intel/AMD 不一样的是,Intel/AMD 自己生产CPU然后出售;ARM 只授权核心技术,得到授权的厂商在进行深加工后自行联系芯片代工厂进行生产。得到ARM授权的厂商有但不仅限于高通、Nvidia、德州仪器、苹果、三星、LG、索尼爱立信。 所以,市面上那些乱花渐欲迷人眼的各种芯片,背后都只有一家ARM。ARM在移动设备上获得成功的原因有很多,营销模式是其一,极度省电是其二。它的计算能力或许不及 Intel/AMD的CPU那么强悍,但是移动设备更看重的是效能比(同等电量所能支持的运算),这点ARM确实远超Intel/AMD,在次世代能够成功也就是顺理成章了。 当然,ARM的成功自然也遭到了Intel的嫉妒。Intel出产了一款叫做Atom的低功耗芯片用以对抗ARM,但“得益”于自身对市场的不熟悉以及控制功耗方面不过关,至今也未能获得成功。 CPU一家独大,但显卡却是百家争鸣 相比较于ARM在CPU领域一家独大,移动设备显卡却是百家争鸣,目前数得出来的就有PowerVR系列、AMD Adreno系列、Nvidia Tegra系列以及ARM新兼并的Mali架构。 PowerVR系列是目前移动设备上占有率最大的显卡,掌权者是Imagination公司。使用PowerVR的公司数不胜数,其中就包括苹果(iPhone 4/iPad/iPad2以及即将上市的iPhone 5)和索尼(Sony的PS Vita)。事实上,苹果公司有一部分Imagination的股份。 Adreno系列显卡昔日属于AMD旗下,但在2008年已经出售给了高通。高能同时也从ARM 处得到了授权,结合两者制作出了自家的芯片MSM8x xx系列。小编会在第三节详细介绍。 而一直在桌面显卡占据半边天的Nvidia,也用从ARM处得到的授权以及自家的显卡技术,制作出了Tegra系列芯片。由于Nvidia在芯片生产上浸淫已久,其所用的制程一直领先于其它芯片厂商;但功耗却显得略高。尽管如此,它还是占领了大量Android平板。 2020年公需课程智慧医疗 智慧医疗(练习一:数字医疗影像诊断) 1、(单选,10分) 以下不属于常见的数字医疗影像技术的是() A、X-射线 B、CT扫描 C、解剖 D、核磁共振 答案:C 2、(单选,10分) 2. 以下不属于数字医疗影像技术的临床应用的是() A、辅助手术 B、医疗教学 C、影像报告 D、图像采集 答案:D 3、(单选,10分) 下列信息不属于目前我国医学影像行业面临两大痛点的是() A、医学影像医生缺口大 B、效率高 C、工作繁琐重复 D、服务模式亟待创新 答案:B 4、(单选,10分) 医疗影像人工智能的三大要素是() A、算法、数据和算力 B、算法、算力和应用 C、算法、数据和服务 D、算法、算力和效率 答案:A 5、(单选,10分) 医学影像人工智能诊断系统正确构建流程是()①结构化数据构建②面向临床问题的模型设计③AI服务模式建立④AI算法选择和模型建立 A、②①③④ B、②①④③ C、①②④③ D、①②③④ 答案:B 6、(单选,10分) 以下不属于人工智能方法在医学图像处理中的应用领域的是() A、图像分割 B、图像配准 C、图像重建 D、图像存储 答案:D 7、(单选,10分) 以下不属于人工智能方法给医学影像诊断过程带来的改变的是() A、医生阅片时间变短 B、观察区域更加完整 C、诊断过程更加稳定 D、诊断准确率因个体差异较大 答案:D 8、(单选,10分) 以下不属于当前人工智能+数字医疗影像应用在服务模式中存在的问题是() A、当前AI模型缺乏临床实验验证 B、当前AI模型设计参考最新临床指南规范较少 C、当前AI服务模式并未结合医师的实际应用情况 D、当前AI服务模式可以完全取代放射科医师 答案:D 9、(单选,10分) 传统的医学图像处理方式是由工程师们创造一套规则,算法根据规则对图像进行处理,准确率较高。 A、正确 B、错误 答案:B 10、(单选,10分) 目前医学影像领域人工智能算法快速突破,算力持续增长,如何构建强大的人工智能算法模型成为提升诊断准确度的最关键因素。 A、正确 B、错误 答案:B 电机型号及参数对照表: 1.按工作电源种类划分:可分为直流电机和交流电机。 (1)直流电动机按结构及工作原理可划分:无刷直流电动机和有刷直流电动机。 有刷直流电动机可划分:永磁直流电动机和电磁直流电动机。 电磁直流电动机划分:串励直流电动机、并励直流电动机、他励直流电动机和复励直流电动机。 永磁直流电动机划分:稀土永磁直流电动机、铁氧体永磁直流电动机和铝镍钴永磁直流电动机。 (2)其中交流电机还可划分:单相电机和三相电机。 2.按结构和工作原理可划分:可分为直流电动机、异步电动机、同步电动机。 (1)同步电机可划分:永磁同步电动机、磁阻同步电动机和磁滞同步电动机。 (2)异步电机可划分:感应电动机和交流换向器电动机。 感应电动机可划分:三相异步电动机、单相异步电动机和罩极异步电动机等。 交流换向器电动机可划分:单相串励电动机、交直流两用电动机和推斥电动机。 3.按起动与运行方式可划分:电容起动式单相异步电动机、电容运转式单相异步电动机、电容起动运转式单相异步电动机和分相式单相异步电动机。 4.按用途可划分:驱动用电动机和控制用电动机。 (1)驱动用电动机可划分:电动工具(包括钻孔、抛光、磨光、开槽、切割、扩孔等工具)用电动机、家电(包括洗衣机、电风扇、电冰箱、空调器、录音机、录像机、影碟机、吸尘器、照相机、电吹风、电动剃须刀等)用电动机及其他通用小型机械设备(包括各种小型机床、小型机械、医疗器械、电子仪器等)用电动机。 (2)控制用电动机又划分:步进电动机和伺服电动机等。 5.按转子的结构可划分:笼型感应电动机(旧标准称为鼠笼型异步电动机)和绕线转子感应电动机(旧标准称为绕线型异步电动机)。 6.按运转速度可划分:高速电动机、低速电动机、恒速电动机、调速电动机。低速电动机又分为齿轮减速电动机、电磁减速电动机、力矩电动机和爪极同步电动机等。 调速电动机除可分为有级恒速电动机、无级恒速电动机、有级变速电动机和无级变速电动机外,还可分为电磁调速电动机、直流调速电动机、PWM变频调速电动机和开关磁阻调速电动机。 异步电动机的转子转速总是略低于旋转磁场的同步转速。 同步电动机的转子转速与负载大小无关而始终保持为同步转速。 手机CPU数据比较 2013年3月13日 1、德州仪器 这个品牌想必大家都不陌生,一些高端机型上都会配有这家厂商的CPU,高性能且耗能少是它主要的特点,但因为造价昂贵,多应用在高端旗舰产品上,而且德州仪器的CPU 与GPU也无法达成较好的协调,总会加强了一方面,而去减弱另外一方面的实力。 2.Intel 无论从PC市场还是手机市场,Intel在CPU上都占有较大的份额,众所周知Intel 电脑平台的CPU讲究的是高性能低功耗,屡次创新制造技术,在手机CPU上Intel页很好的贯彻了这一理念,它的缺点就是每频率下来性能比较低。 3.高通 高通的CPU在市场上占据了相当一部分的份额,市面上中低端安卓智能手机CPU都会有它的身影,主频比较高,运算能力强,且定位十分准确,让它在这个强手如林的市场上有了自己的一席之地,但处理能力强也导致了它的图形处理相对偏弱,且耗能较高 4.三星 三星的蜂鸟在前面小编也说了,单核之王,而后来研发的Exynos猎户座CPU也有高效的性能表现,在对数据和图形运算方面均表现优异,但也就因为这点,导致猎户座的散热偏大,而且目前市场上对三星猎户座的优化并不是太好,兼容性是它的鸡肋,但随着三星将猎户座CPU不断推广,兼容性问题总有一天会得到完美的解决。 5.Marvell Marvell(迈威科技集团有限公司,现更名美满),成立于1995年,总部在硅谷,在中国上海设有研发中心,是一家提供全套宽带通信和存储解决方案的全球领先半导体厂商,是一个针对高速,高密度,数字资料存贮和宽频数字数据网络市场,从事混合信号和数字信号处理集成电路设计、开发和供货的厂商。 提到这个名字或许用户会感觉有点陌生,但提到ARM CPU想必大家就会立马熟悉了,它的CPU也算是最大发挥了PXA的性能,强劲的性能背后总会有个诟病,那就是功耗大,功耗大也会引发一定的散热问题。 美满电子科技(Marvell)在中国的总部位于上海张江科技园,并在北京、合肥和深圳设有业务运营 6.Nvidia(英伟达) 在显卡方面,Nvidia有着无法超越的优势以及各种专利技术,在CPU方面,它也以体积小性能强劲功耗低而著称,Tegra2不光在图形方面做了强化,还在优化增强了音频处理,甚至可以运行虚幻3的游戏引擎,这不得不说是一种进步。但为了降低功耗,Tegra2出现了视频解码等问题,这想必是Nvidia下一步要解决的问题。 7.华为 华为在2012年推出了最小的四核处理器,华为自主研发的海思 K3V2 ,是2012年业 简介 变频调速电机简称变频电机,是变频器驱动的电动机的统称。实际上为变频器设计的电机为变频专用电机,电机可以在变频器的驱动下实现不同的转速与扭矩,以适应负载的需求变化。变频电动机由传统的鼠笼式电动机发展而来,把传统的电机风机改为独立出来的风机,并且提高了电机绕组的绝缘性能。在要求不高的场合如小功率和频率在额定工作频率工作情况下,可以用普通鼠笼电动机代替。 主要参数 品牌:ABB 产品类型:三相异步电动机 型号:QABP 4KW-4P 极数:4极 额定功率:4KW 额定电压:380/415/440(V) 额定转速:1450(rpm) 产品认证:CE 应用范围:机械设备行业均可 技术特点 效率高 达到欧洲CEMEP-EU效率等级电机标准二级值,符合中华人民共和国国家标准GB18613-2002中小型三相异步电动机能效限定值。双频宽电压 电压范围220V~690V,适用50Hz和60Hz电源。 噪声低 通过优化电磁设计、通风状况、结构尺寸等技术,M2JA系列电动机的噪声较低。 轴承负载能力高 电动机选用深沟球轴承,寿命长,80-132中心高电动机为永久型润滑,160-355设有加油装置。 可靠性好 电动机为全封闭风冷结构,防护等级IP55,材料及工艺符合环境要求。电动机机械强度高,坚固耐用,防锈防腐性强。绕组可靠性好,采用F级绝缘结构,B级考核。并可根据用户需要增加PTC热敏电阻或热敏开关。 本系列电机功率从0.25KW-315KW,机座中心高从71mm-355mm。可广泛应用于轻工,纺织,化工,冶金,机床等需要调速转动装置的行业中,是一种理想的调速动力源。 特殊设计 电磁设计 对于变频电动机,由于临界转差率反比于电源频率,可以在临界转差率接近1时直接启动,因此,过载能力和启动性能不在需要过多考虑,而要解决的关键问题是如何改善电动机对非正弦波电源的适应能力。方式一般如下: 1)尽可能的减小定子和转子电阻。 稳压二极管型号对照表 美标稳压二极管型号 1N4727 3V0 1N4728 3V3 1N4729 3V6 1N4730 3V9 1N4731 4V3 1N4732 4V7 1N4733 5V1 1N4734 5V6 1N4735 6V2 1N4736 6V8 1N4737 7V5 1N4738 8V2 1N4739 9V1 1N4740 10V 1N4741 11V 1N4742 12V 1N4743 13V 1N4744 15V 1N4745 16V 1N4746 18V 1N4747 20V 1N4748 22V 1N4749 24V 1N4750 27V 1N4751 30V 1N4752 33V 1N4753 36V 1N4754 39V 1N4755 43V 1N4756 47V 1N4757 51V 需要规格书请到以下地址下载, https://www.doczj.com/doc/fe16738961.html,/products/Rectifiers/Diode/Zener/ 经常看到很多板子上有M记的铁壳封装的稳压管,都是以美标的1N系列型号标识的,没有具体的电压值,刚才翻手册查了以下3V至51V的型号与电压的对照值,希望对大家有用 1N4727 3V0 1N4728 3V3 1N4729 3V6 1N4730 3V9 1N4731 4V3 1N4733 5V1 1N4734 5V6 1N4735 6V2 1N4736 6V8 1N4737 7V5 1N4738 8V2 1N4739 9V1 1N4740 10V 1N4741 11V 1N4742 12V 1N4743 13V 1N4744 15V 1N4745 16V 1N4746 18V 1N4747 20V 1N4748 22V 1N4749 24V 1N4750 27V 1N4751 30V 1N4752 33V 1N4753 36V 1N4754 39V 1N4755 43V 1N4756 47V 1N4757 51V DZ是稳压管的电器编号,是和1N4148和相近的,其实1N4148就是一个0.6V 的稳压管,下面是稳压管上的编号对应的稳压值,有些小的稳压管也会在管体上直接标稳压电压,如5V6就是5.6V的稳压管。 1N4728A 3.3 1N4729A 3.6 1N4730A 3.9 1N4731A 4.3 1N4732A 4.7 1N4733A 5.1 1N4734A 5.6 1N4735A 6.2 1N4736A 6.8 1N4737A 7.5 1N4738A 8.2 1N4739A 9.1 1N4740A 10 1N4741A 11 1N4742A 12 1.单、双核,是A8还是A9构架 2.多少纳米的工艺,多少平方毫米的封装面积,涉及到功耗及发热 3.主频、二级缓存和内存通道控制器的位宽等CPU参数 4.GPU的三角形输出率和像素填充率等性能 四核对比 市场上最主流的,以后也会被大品牌使用的四核处理器有三星Exynos 4412,NVIDIA Tegra3,高通APQ8064,海思k3v2 三星的处理器: 盖世2的是Exynos 4210 盖世三的是Exynos 4212 我们知道,四核的Exynos 4412并不会跑在1.5GHz,而是1.4GHz,因此四核处理器在达到双核两倍性能的同时,功耗却只有双核的八成。换句话说,四核处理器在实现双核同样性能的时候,大约只需要区区40%的电力,这意味着续航和发热都可能会大大改善。虽然四核的绝对性能对我们而言实际上没有什么太大的意义,但是32nm HKMG带来的功耗降低是非常显著的,即便不为了性能,也有足够的理由去选择。 Exynos 4212你可以看做是为三星Exynos 4210推出的升级版,采用Cortex A9架构,工艺制程为32NM, GPU英文全称Graphic Processing Unit,中文翻译为“”。GPU是相对于CPU 的一个概念,由于在现代的计算机中(特别是家用系统,游戏的发烧友)图形的处理变得越来越重要,需要一个专门的图形的核心处理器。GPU是显示卡的“大脑”,它决定了该显卡的档次和大部分性能,同时也是2D显示卡和3D显示卡的区别依据。2D显示芯片在处理3D图像和特效时主要依赖CPU的处理能力,称为“软加速”。3D显示芯片是将三维图像和特效处理功能集中在显示芯片内,也即所谓的“”功能。通常是显示卡上最大的芯片(也是引脚最多的)。现在市场上的显卡大多采用和AMD-ATI两家公司的。 三星Exynos 4412与NVIDIA Tegra3的对比:首先,Tegra3采用的是40nm Fast G工艺制造,功耗相对较大,虽然有伴核,但是那个只能在待机时使用,对于日常使用而言帮助不大。其次,Tegra3的内存仅为单通道LPDDR2 1066,而Exynos 4412则支持双通道LPDDR2 1066,是Tegra3的两倍。最后,Tegra3为了支持伴核,二级缓存的速度只有正常的一半,这也会影响性能。 总体而言,Exynos 4412对于Tegra3的优势是全面且明显的,甚至连频率都略胜一筹(100MHz),因此在现阶段可查的产品中,毫无疑问是最强四核,最出名的代表产品就是三星自己家的S3,还有联想手机K860。 NVIDIA Tegra3:这个应该是最早出来的四核处理器了,基于40纳米工艺,功耗与Tegra 2持平。这里不做过多介绍,只能感叹一句Tegra3老了。代表产品多了去了,大品牌四核手机基本上用的就是这个处理器, 高通APQ8064:属于高通骁龙S4处理器最顶级的一个芯片,采用28nm工艺制造,集成最新的Adreno 320 GPU,整合四个Krait架构CPU核心,每核主频最高达1.5GHz/1.7GHz。它是全球首款采用28nm制程的四核移动处理器,同时也是高通首款四核心处理器 海思k3v2,这款是华为自主研发的一款处理器,基于A9架构,主频分为1.2GHz 和1.5GHz,采用ARM架构35NM制造工艺、64位内存总线,是Tegra 3内存总 一些整流桥堆的分类和型号 桥式整流器品种多,性能优良,整流效率高,稳定性好,最大整流电流从0.5A到50A,最高反向峰值电压从50V到1000V。 1. 贴片系列: MB2S、MB4S、MB6S、MB8S、MB10S。 DB101S、DB102S、DB103S、DB104S、DB105S、DB106S、DB107S。 DB151S、DB152S、DB153S、DB154S、DB155S、DB156S、DB157S。 2. 板桥系列: DB101、DB102、DB103、DB104、DB105、DB106、DB107。 DB151、DB152、DB153、DB154、DB155、DB156、DB157。 3. 圆桥系列: W005、W01、W02、W04、W06、W08、W10. 2W005、2W01、2W02、2W04、2W06、2W08、2W10。 4. 扁桥2A: RS201、RS202、RS203、RS204、RS205、RS206、RS207。 KBP2005、KBP201、KBP202、KBP204、KBP206、KBP208、KBP210。 扁桥KBL4A:KBL4005、KBL401、KBL402、KBL404、KBL406、KBL408、KBL410。扁桥GBP2A:GBP2005、GBP201、GBP202、GBP204、GBP206、GBP208、GBP210。扁桥GBL4A:GBL4005、GBL401、GBL402、GBL404、GBL406、GBL408、GBL410。扁桥GBJ系列: GBJ4005、GBJ401、GBJ402、GBJ404、GBJ406、GBJ408、GBJ410。 GBJ6005、GBJ601、GBJ602、GBJ604、GBJ606、GBJ608、GBJ610。 GBJ8005、GBJ801、GBJ802、GBJ804、GBJ806、GBJ808、GBJ810。 GBJ10005、GBJ1001、GBJ1002、GBJ1004、GBJ1006、GBJ1008、GBJ1010。 GBJ15005、GBJ1501、GBJ1502、GBJ1504、GBJ1506、GBJ1508、GBJ1510。 GBJ25005、GBJ2501、GBJ2502、GBJ2504、GBJ2506、GBJ2508、GBJ2510。 GBJ35005、GBJ3501、GBJ3502、GBJ3504、GBJ3506、GBJ3508、GBJ3510。 扁桥RBV50A系列: RBV50005、RBV5001、RBV5002、RBV5004、RBV5006、RBV5008、RBV5010。 扁桥KBU系列: KBU4005、KBU401、KBU402、KBU404、KBU406、KBU408、KBU410。 KBU6005、KBU601、KBU602、KBU604、KBU606、KBU608、KBU610。 KBU8005、KBU801、KBU802、KBU804、KBU806、KBU808、KBU810。 KBU10005、KBU1001、KBU1002、KBU1004、KBU1006、KBU1008、KBU1010。 KBU15005、KBU1501、KBU1502、KBU1504、KBU1506、KBU1508、KBU1510。KBU25005、KBU2501、KBU2502、KBU2504、KBU2506、KBU2508、KBU2510。 KBU35005、KBU3501、KBU3502、KBU3504、KB32506、KBU3508、KBU3510。 扁桥GBU系列: GBU4005、GBU401、GBU402、GBU404、GBU406、GBU408、GBU410。 GBU6005、GBU601、GBU602、GBU604、GBU606、GBU608、GBU610。 GBU8005、GBU801、GBU802、GBU804、GBU806、GBU808、GBU810。 常用稳压管型号参数对照 3V到51V 1W稳压管型号对照表1N4727 3V0 1N4728 3V3 1N4729 3V6 1N4730 3V9 1N4731 4V3 1N4732 4V7 1N4733 5V1 1N4734 5V6 1N4735 6V2 1N4736 6V8 1N4737 7V5 1N4739 9V1 1N4740 10V 1N4741 11V 1N4742 12V 1N4743 13V 1N4744 15V 1N4745 16V 1N4746 18V 1N4747 20V 1N4748 22V 1N4749 24V 1N4750 27V 1N4751 30V 1N4753 36V 1N4754 39V 1N4755 43V 1N4756 47V 1N4757 51V 摩托罗拉IN47系列1W稳压管IN4728 3.3v IN4729 3.6v IN4730 3.9v IN4731 4.3 IN4732 4.7 IN4733 5.1 IN4735 6.2 IN4736 6.8 IN4737 7.5 IN4738 8.2 IN4739 9.1 IN4740 10 IN4741 11 IN4742 12 IN4743 13 IN4744 15 IN4745 16 IN4746 18 IN4747 20 IN4749 24 IN4750 27 IN4751 30 IN4752 33 IN4753 34 IN4754 35 IN4755 36 IN4756 47 IN4757 51 摩托罗拉IN52系列 0.5w精密稳压管IN5226 3.3v IN5227 3.6v 《医学影像成像原理》名词解释 《医学影像成像原理》名词解释 第一章 1.X 线摄影(radiography):是X 线通过人体不同组织、器官结构的衰减作用,产生人体医疗情报信息传递给屏-片系统,再通过显定影处理,最终以X 线平片影像方式表现出来的技术。 2.X 线计算机体层成像(computed tomography,CT):经过准直器的X 线束穿透人体被检测层面;经人体薄层内组织、器官衰减后射出的带有人体信息 的X 线束到达检测器,检测器将含有被检体层面信息X 线转变为相应的电信号; 通过对电信号放大,A/D 转换器变为数字信号,送给计算机系统处理;计算机按 照设计好的方法进行图像重建和处理,得到人体被检测层面上组织、器官衰减系 数(|)分布,并以灰度方式显示人体这一层面上组织、器官的图像。 3.磁共振成像(magnetic resonance imaging,MRI):通过对静磁场 (B0) 中的人体施加某种特定频率的射频脉冲电磁波,使人体组织中的氢质子(1H)受 到激励而发生磁共振现象,当RF 脉冲中止后,1H 在弛豫过程中发射出射频信号 (MR 信号),被接收线圈接收,利用梯度磁场进行空间定位,最后进行图像重 建而成像的。 4.计算机X 线摄影(computed radiography,CR):是使用可记录并由激光读出X 线影像信息的成像板(IP)作为载体,经X 线曝光及信息读出处理,形成数字式平片影像。 5.数字X 线摄影(digital radiography,DR):指在具有图像处理功能的计算机控制下,采用一维或二维的X 线探测器直接把X 线影像信息转化为数字信号的技术。 6.影像板(imaging plate,IP):是CR 系统中作为采集(记录)影像信息的接收器(代替传统X 线胶片),可以重复使用,但没有显示影像的功能。7.平板探测器(flat panel detector,FPD):数字X 线摄影中用来代替屏- 片系统作为X 线信息接收器(探测器)。 8.数字减影血管造影(digital subtraction angiography,DSA):是计算机 与常规X 线血管造影相结合的一种检查方法,能减去骨骼、肌肉等背景影像,突出显示血管图像的技术。 9.计算机辅助诊断(computer aided diagnosis,CAD):借助人工智能等技术对医学影像作图像分割、特征提取和定量分析等增加诊断信息,用以辅助医 生对各种医学影像进行诊断的技术。 希望这篇文章能对智能手机新手,特别是想买安卓手机的机友们有所帮助。 买Android手机,除了CPU外,接下来最重要的可能就是ROM、RAM、GPU SD卡的大小了。玩Android手机的朋友,特别是要买Android手机的朋友,那就得赶紧来了解一下手机内存RAM ROM CPU GPU 、还有SD卡的重要性了,不然,在买手机的时候可能会吃亏。 因为一些手机厂家在宣传自己手机的时候,会声称自己的手机内存有4G或者多少G,但其实有些混淆概念,在手机行业里,发展到现在,其实已经把ROM、RAM、SD卡都混淆通称为内存了,这个是商家的误导,很多商家在宣传时将SD卡和ROM宣传成内存,混淆视听,让你以为这个手机的内存很大,其实这并非真正意义上的内存RAM。 CPU 在日常生活中都是被购物者所忽略的手机性能之一,其实一部性能卓越的智能手机最为重要的肯定是它的“芯”也就是CPU,如同电脑CPU一样,它是整台手机的控制中枢系统,也是逻辑部分的控制中心。微处理器通过运行存储器内的软件及调用存储器内的数据库,达f控的目的。 RAM,白话来讲,就是我们常说的真正意义上的内存,就相当于你电脑的内存,目前来说512M的RAM可以保证任何手机的流畅性,毕竟目前的电脑使用1G内存都可以保证基本的使用。 ROM,简单的说,就是相当于你windows电脑的C盘,这个也非常非常重要,试想一下,如果安装了操作系统后,你的C盘只有一点点空间,那会导致什么后果?就算你的RAM再大,你的电脑也会死机,也会慢的像蜗牛。而Android手机中,ROM的重要性也更是非常重要,如果你的Android手机ROM只有512M,那么你的手机操作系统就会占去100M或200M,那么你最后就剩下不到300M的ROM可以使用,这300M会被如何使用?首先你的联系人如果有1000人,那么就会占去40多M的空间,每次安装一个程序或者游戏,即便你安装到了SD卡中,但你的ROM空间依然还是会被占用一部分,其次系统自带的应用、浏览器、地图、电话、短信等等历史记录,全部都存在ROM中,如果你是手机玩家,这ROM空间就会往往完全不够使用,而且让你的手机变的很慢,目前来讲,1G以上的ROM才会刚刚够,当然,如果你只是普通手机用户,不安装什么应用程序,那么512M的ROM还是够用的。) GPU 图形处理器,基本左右是输出多边形生成率用于3d建模,像素填充率用于色彩渲染图面,纹理填充率用于贴图,主要处理与图形有关的任务,尤其是游戏,图形设计3d建模,包括渲染手机的桌面等。手机gpu一般都是与手机cpu一起封装在soc里,类似电脑cpu的核芯显卡,或apu概念。gpu单独封装在独立的电子板上才能称为显卡。手机gpu与视频无关,手机视频软解靠cpu和neon,硬解靠dsp。一般可以认为手机里的gpu主要是与游戏有关,gpu强,游戏性能也强。 SD,我们俗称就是手机的外部存储空间,这个我们可以理解成电脑的D盘、E盘,或者外接移动硬盘也行,这个地方本来是放我们的多媒体资料的,我们知道,电脑的D盘其实是可以安装程序的,但是这一点却和Android不同,即便你使用了APP2SD类的软件将各类应用程序安装到了SD卡中,其实程序的系统数据还是写在了ROM中,SD卡相当于只是存放多媒体类的资料,如游戏的数据文件。 废话半天,尽量写的非常白话,总结一下三者差距不大的时候,首选那个; 2018年AI+医学影像行业深度分析报告 主要观点 1.医学影像诊断需求迫切,“AI+医学影像”有望破解行业痛点 我国医学影像数据的年增长率约为30%,而放射科医师数量的年增长率约为4.1%,医生缺口日益增加,繁重的任务带来较高的误诊、漏诊率,人工智能技术在医学影像领域的应用有望破解行业痛点,提高效率,提升质量。若人工智能在影像诊断领域渗透率稳步提升,有望带来“AI+医学影像”诊断市场快速增长。 2.政策、技术双重驱动,“AI+医学影像”蓄势待发 从政策层面来看,国家近年来陆续出台系列政策高度支持医学影像行业以及“AI+医疗”的发展,7月出台的《新一代人工智能发展规划》再次对人工智能多个领域发展提出更高要求,针对“AI+医学影像”行业的具体扶植政策也有望快速出台。从技术层面来看,大量深度学习平台和框架开源降低基础算法门槛,GPU、FPGA、ASCI等处理器的性能快速提升,医学影像领域算力不断突破,目前企业发展的瓶颈在于高质量数据的获取和标注。随着医疗进入大数据时代,数据的质量和规模将实现快速提升,同时,影像数据联网及云平台推进助力数据价值更快提升,有望带来“AI+医疗影像”行业加速发展。 3.科技巨头加速跑马圈地,创业公司受资本热捧 “AI+医疗影像”市场百家争鸣,目前尚未出现占据绝对优势地位的领跑企业。IBM Watson、谷歌、腾讯、阿里、科大讯飞等具备技术优势和资源整合实力的科技巨头近年来纷纷以医疗影像为突破口布局医疗人工智能领域,在技术和产品上不断取得快速发展。同时,2016年下半年以来,“AI+医学影像”已成为创业资本的投资风口,无论是从投资数量还是金额上,都可以看出其火热程度,有助于医疗影像人工智能更快推进。 4.商业模式逐渐明晰,平台分成与技术授权各具潜力 “AI+医学影像”已走出实验室,产品逐步落地,商业模式逐渐明晰。目前来看,主要的商业模式包括平台分成模式及技术解决方案两种。我们认为对接基层医院、民营医院,收取诊断服务费用的商业模式较为理想,一方面,平台分成式的商业模式具有典型的边际成本递减的特征,且可涵盖B2B 及B2B2C领域;另一方面,基层医疗市场对于人工智能医学影像的需求迫切,市场空间广阔。在技术突破、数据获取、资源整合方面具备优势,并快速推进取得明显先发优势的公司最具潜力。同时,技术解决方案针对大型医院、影像设备厂商具备吸引力,具备明显技术优势的公司通过技术授权模式变现。 5.投资建议: 人工智能在医学影像领域落地可能性较大,在技术突破、数据获取、资源整合方面具备优势,并快速推进取得明显先发优势的平台型公司最具潜力,同时,技术明显领先的公司有望通过技术授权获得变现。推荐万东医疗、科大讯飞、东软集团。一级市场建议关注推想科技、依图科技、雅森科技、健培科技、汇医慧影。 6.风险提示: 技术进步不达预期;资源整合速度不达预期;市场竞争程度超出预期 桥堆型号与参数对照表 力邦电磁炉故障代码 E1:无锅.每隔3秒一声短笛音报警.连续性分钟转入待机. E2:电源电压过低.两长三短笛音报警.响两次转入待机.(间隔5秒). E3:电源电压过高.两长四短笛音报警.间隔5秒响一次. E4:锅超温.三长三短笛音报警.响两次转入待机.(间隔5秒). E6:锅空烧.两长三短笛音报警.响两次转入待机.(间隔5秒). E0:IGBT超温.四长三短笛音报警.响两次转入待机.(间隔5秒). E7:TH开路(管温传感器).四长五短笛音报警.间隔5秒响一次. E8:TH短路(管温传感器).四长四短笛音报警.间隔5秒响一次. E9:锅传感器开路.三长五短笛音报警.间隔5秒响一次. EE:锅传感器短路.三长四短笛音报警.间隔5秒响一次. E5:VCE过高.无声.重新试探启动. 定时结束:响一长声转入待机. 无时基信号.灯不亮.响两秒停两秒.连续. 美联电磁炉自动保护出错屏显代码: E---0 输入电压过低] E---1 输入电压过高 E---2 IGBT温度传感器开路或温度过低保护 E---3 IGBT温度传感器短路或温度过高保护 E---4 灶面温度传感器开路或温度过低保护 E---5 灶面温度传感器短路或温度过高保护] 开机自动关机:机内超温保护. 澳柯玛电磁炉 数码管显示故障代码及排除故障 (无数码显示的电磁炉不在范围之内) 现象故障原因检修方法 显示E1 炉面温度超过235℃并持续3S 电磁炉炉面温度冷却后再开机 显示E2 IGBT温度超过85℃并持续3S 电磁炉内部温度冷却后再开机 显示E3 检测电流过大检测电压是否正常或负载是否过大 显示E4 输入电压过低调节电源电压或更换主控板 显示E5 输入电压过高调节电源电压或更换主控板 显示E6 炉面上热敏电阻短路检查线路或更换热敏电阻 显示E7 炉面上热敏电阻断路检查线路或更换热敏电阻 显示E8 IGBT处的热敏电阻短路检查线路或更换热敏电阻 显示E9 IGBT处的热敏电阻断路检查线路或更换热敏电阻 注:线路板为PD版本的机型,增加E0代码,缺少E5、E6、E9代码,E0表示内部故障,E4表示电源欠压/过压,E7表示炉面的热敏电阻断路/开路,E8表示IGBT处的热敏电阻短路/短路。数码管显示故障代码及排除故障 苏泊尔电磁炉常见故障代码 稳压管型号大全 2009-12-23 21:52 稳压二极管型号对照表 美标稳压二极管型号 1N4727 3V0 1N4728 3V3 1N4729 3V6 1N4730 3V9 1N4731 4V3 1N4732 4V7 1N4733 5V1 1N4734 5V6 1N4735 6V2 1N4736 6V8 1N4737 7V5 1N4738 8V2 1N4739 9V1 1N4740 10V 1N4741 11V 1N4742 12V 1N4743 13V 1N4744 15V 1N4745 16V 1N4746 18V 1N4747 20V 1N4748 22V 1N4749 24V 1N4750 27V 1N4751 30V 1N4752 33V 1N4753 36V 1N4754 39V 1N4755 43V 1N4756 47V 1N4757 51V 需要规格书请到以下地址下载, https://www.doczj.com/doc/fe16738961.html,/products/Rectifiers/Diode/Zener/ 经常看到很多板子上有M记的铁壳封装的稳压管,都是以美标的1N系列型号标识的,没有具体的电压值,刚才翻手册查了以下3V至51V的型号与电压的对照值,希望对大家有用 1N4727 3V0 1N4728 3V3 1N4730 3V9 1N4731 4V3 1N4732 4V7 1N4733 5V1 1N4734 5V6 1N4735 6V2 1N4736 6V8 1N4737 7V5 1N4738 8V2 1N4739 9V1 1N4740 10V 1N4741 11V 1N4742 12V 1N4743 13V 1N4744 15V 1N4745 16V 1N4746 18V 1N4747 20V 1N4748 22V 1N4749 24V 1N4750 27V 1N4751 30V 1N4752 33V 1N4753 36V 1N4754 39V 1N4755 43V 1N4756 47V 1N4757 51V DZ是稳压管的电器编号,是和1N4148和相近的,其实1N4148就是一个0.6V的稳压管,下面是稳压管上的编号对应的稳压值,有些小的稳压管也会在管体上直接标稳压电压,如5V6就是5.6V的稳压管。 1N4728A 3.3 1N4729A 3.6 1N4730A 3.9 1N4731A 4.3 1N4732A 4.7 1N4733A 5.1 1N4734A 5.6 1N4735A 6.2 1N4736A 6.8 1N4737A 7.5 1N4738A 8.2 1N4739A 9.1稳压管型号_标号对照表

最新智能手机CPU&GPU性能数据对比大全

人工智能在医学影像中的研究与应用

智能手机CPU及GPU介绍

2020年公需课程智慧医疗(练习一:数字医疗影像诊断)

电机型号及参数对照表

智能手机使用的几款cpu技术性能

电机型号及参数对照表

稳压二极管型号对照表

手机常见的cpu与gpu

一些整流桥堆的分类和型号

常用稳压管型号参数对照

《医学影像成像原理》名词解释教学内容

新手必看:RAM、CPU、GPU三个手机硬指标,哪个最重要!

2018年AI+医学影像行业深度分析报告

桥堆型号与参数对照表

稳压管型号大全