PLASMA: Combining Predicate Logic and Probability for Information

Fusion and Decision Support

Francis Fung1, Kathryn Laskey2, Mike Pool1, Masami Takikawa1, Ed Wright1

1Information Extraction and Transport, Inc.

1911 North Fort Myer Dr., Suite 600

Arlington, VA 22209

2Department of Systems Engineering and Operations Research

George Mason University

Fairfax, VA 22030

{fung, pool, takikawa, wright}@https://www.doczj.com/doc/d73203008.html,

klaskey@https://www.doczj.com/doc/d73203008.html,

Abstract

Decision support and information fusion in complex domains requires reasoning about inherently uncertain properties of and relationships among varied and often unknown number of entities interacting in differing and often unspecified ways. Tractable probabilistic reasoning about such complex situations requires combining efficient inference with logical reasoning about which variables to include in a model and what the appropriate probability distributions are. This paper describes the PLASMA architecture for predicate logic based assembly of situation-specific probabilistic models. PLASMA maintains a declarative representation of a decision theoretically coherent first-order probabilistic domain theory. As evidence about a situation is absorbed and queries are processed, PLASMA uses logical inference to reason about which known and/or hypothetical entities to represent explicitly in the situation model, which known and/or uncertain relationships to represent, what functional forms and parameters to specify for the local distributions, and which exact or approximate inference and/or optimization techniques to apply. We report on a prototype implementation of the PLASMA architecture within IET’s Quiddity*Suite, a knowledge-based probabilistic reasoning toolkit. Examples from our application experience are discussed.

Introduction

Decision support in complex, dynamic, uncertain environments requires support for situation assessment. In an open world, situation assessment involves reasoning about an unbounded number of entities of different types interacting with each other in varied ways, giving rise to observable indicators that are ambiguously associated with the domain entities generating them. There are strong ar-guments for probability as the logic of choice for reasoning Copyright ? 2005, American Association for Artificial Intelligence

(https://www.doczj.com/doc/d73203008.html,). All rights reserved.under uncertainty (e.g., Jaynes, 2003). Graphical models, and in particular Bayesian networks (Pearl, 1988), provide a parsimonious language for expressing joint probability distributions over large numbers of interrelated random variables. Efficient algorithms are available for updating probabilities in graphical models given evidence about some of the random variables. For this reason, Bayesian networks have been widely and successfully applied to situation assessment (Laskey et. al., 2000; Das, 2000). Despite these successes, there are major limitations to the applicability of standard Bayesian networks for situa-tion assessment. In open-world problems, it is impossible to specify in advance a fixed set of random variables and a fixed dependency structure that is adequate for the range of problems a decision support system is likely to encounter. Nevertheless, graphical models provide a powerful lan-guage for parsimonious specification of recurring patterns in the structural features and interrelationships among do-main entities. In recent years, a number of languages have emerged extending the expressiveness of Bayesian net-works to represent recurring structural and relational patterns as objects or frames with attached probabilistic information (Laskey and Mahoney, 1997; Koller and Pfeffer, 1997; Bangs? and Wuillemin, 2000). There is an emerging consensus around certain fundamental ap-proaches to representing uncertain information about the attributes, behavior, and interrelationships of structured entities (Heckerman, et al., 2004). This consensus reflects fundamental logical notions that cut across surface syntac-tic differences. The newly emerging languages express probabilistic information as collections of graphical model fragments, each of which represents a related collection of generalizations about the domain. These model fragments serve as templates that can be instantiated any number of times and combined to form arbitrarily complex models of a domain. Recent work in probabilistic logic has clarified the semantics of such languages (e.g., Laskey, 2004; Sato, 1998; Bacchus, et al., 1997).

PLASMA has its logical basis in Multi-Entity Bayesian Network (MEBN) logic. A theory in MEBN logic consists of a set of Bayesian network fragments (MEBN fragments, or MFrags) that collectively express a joint probability distribution over a variable, and possibly unbounded num-ber of random variables. MEBN logic can express a prob-ability distribution over interpretations of any finitely axiomatizable theory in first-order logic, and can represent the accrual of new information via Bayesian conditioning (Laskey, 2004).

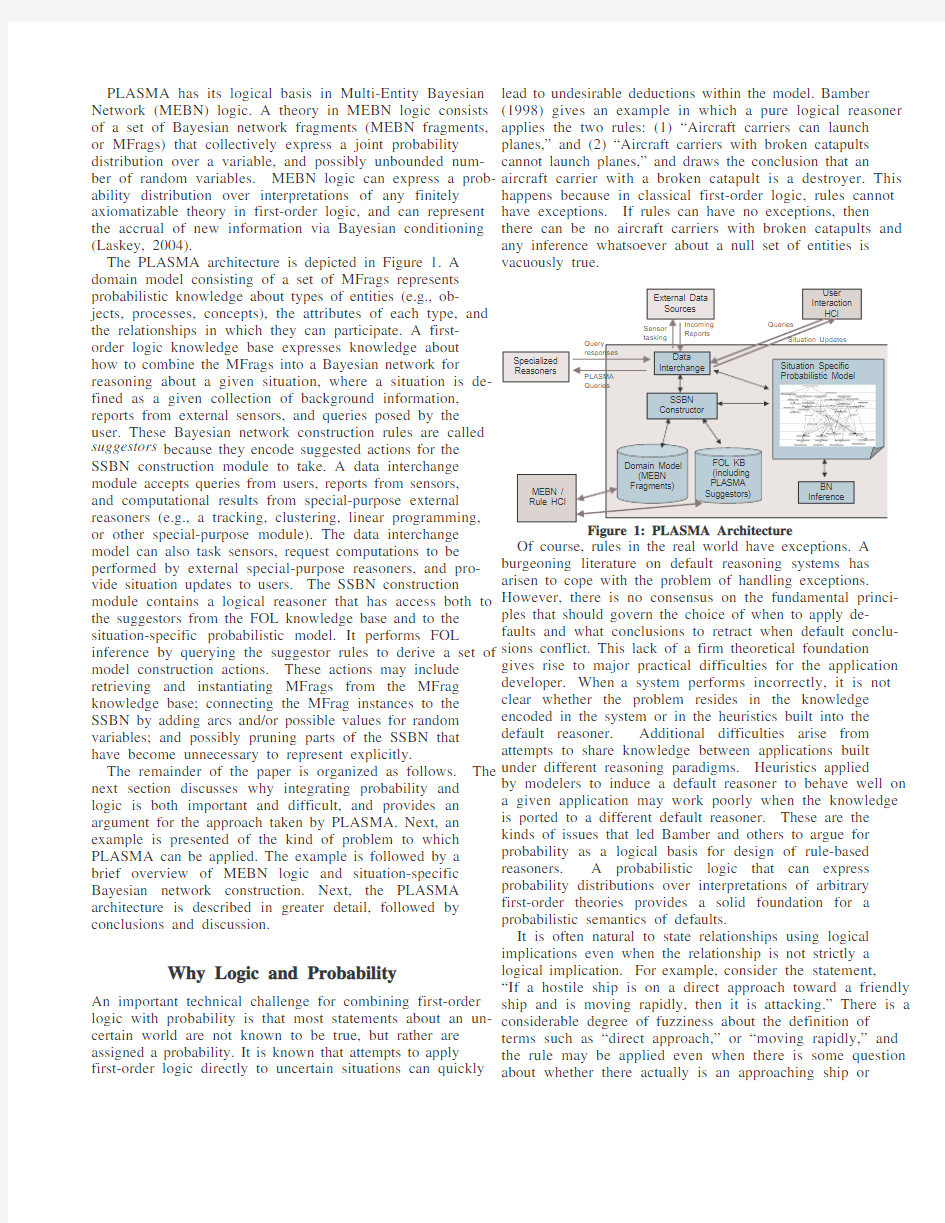

The PLASMA architecture is depicted in Figure 1. A domain model consisting of a set of MFrags represents probabilistic knowledge about types of entities (e.g., ob-jects, processes, concepts), the attributes of each type, and the relationships in which they can participate. A first-order logic knowledge base expresses knowledge about how to combine the MFrags into a Bayesian network for reasoning about a given situation, where a situation is de-fined as a given collection of background information, reports from external sensors, and queries posed by the user. These Bayesian network construction rules are called suggestors because they encode suggested actions for the SSBN construction module to take. A data interchange module accepts queries from users, reports from sensors, and computational results from special-purpose external reasoners (e.g., a tracking, clustering, linear programming, or other special-purpose module). The data interchange model can also task sensors, request computations to be performed by external special-purpose reasoners, and pro-vide situation updates to users. The SSBN construction module contains a logical reasoner that has access both to the suggestors from the FOL knowledge base and to the situation-specific probabilistic model. It performs FOL inference by querying the suggestor rules to derive a set of model construction actions. These actions may include retrieving and instantiating MFrags from the MFrag knowledge base; connecting the MFrag instances to the SSBN by adding arcs and/or possible values for random variables; and possibly pruning parts of the SSBN that have become unnecessary to represent explicitly.

The remainder of the paper is organized as follows. The next section discusses why integrating probability and logic is both important and difficult, and provides an argument for the approach taken by PLASMA. Next, an example is presented of the kind of problem to which PLASMA can be applied. The example is followed by a brief overview of MEBN logic and situation-specific Bayesian network construction. Next, the PLASMA architecture is described in greater detail, followed by conclusions and discussion.

Why Logic and Probability

An important technical challenge for combining first-order logic with probability is that most statements about an un-certain world are not known to be true, but rather are assigned a probability. It is known that attempts to apply first-order logic directly to uncertain situations can quickly lead to undesirable deductions within the model. Bamber (1998) gives an example in which a pure logical reasoner applies the two rules: (1) “Aircraft carriers can launch planes,” and (2) “Aircraft carriers with broken catapults cannot launch planes,” and draws the conclusion that an aircraft carrier with a broken catapult is a destroyer. This happens because in classical first-order logic, rules cannot have exceptions. If rules can have no exceptions, then there can be no aircraft carriers with broken catapults and any inference whatsoever about a null set of entities is vacuously true.

Probabilistic Model

BN

Of course, rules in the real world have exceptions. A burgeoning literature on default reasoning systems has arisen to cope with the problem of handling exceptions. However, there is no consensus on the fundamental princi-ples that should govern the choice of when to apply de-faults and what conclusions to retract when default conclu-sions conflict. This lack of a firm theoretical foundation gives rise to major practical difficulties for the application developer. When a system performs incorrectly, it is not clear whether the problem resides in the knowledge encoded in the system or in the heuristics built into the default reasoner. Additional difficulties arise from attempts to share knowledge between applications built under different reasoning paradigms. Heuristics applied by modelers to induce a default reasoner to behave well on a given application may work poorly when the knowledge is ported to a different default reasoner. These are the kinds of issues that led Bamber and others to argue for probability as a logical basis for design of rule-based reasoners. A probabilistic logic that can express probability distributions over interpretations of arbitrary first-order theories provides a solid foundation for a probabilistic semantics of defaults.

It is often natural to state relationships using logical implications even when the relationship is not strictly a logical implication. For example, consider the statement, “If a hostile ship is on a direct approach toward a friendly ship and is moving rapidly, then it is attacking.” There is a considerable degree of fuzziness about the definition of terms such as “direct approach,” or “moving rapidly,” and the rule may be applied even when there is some question about whether there actually is an approaching ship or

whether the report is spurious. We would like to allow the modeler to express model construction actions in a natural rule form, without forcing the modeler to explicitly ac-count for probabilistic relationships in the suggestor itself. We have touched on some of the many difficulties that can arise from the attempt to give ad-hoc probabilistic interpretations to logical rules. IET’s approach to overcoming this technical challenge is: to maintain a fully probabilistic representation of the situation-specific model, to use logical inference only as a guide for constructing and augmenting the model, and then to compute query responses by performing probabilistic inference on the constructed model. To achieve this, PLASMA provides a mechanism for promoting likely statements into provi-sional assumptions that are analyzed by a logical inference engine as if they were true. The inference engine is used to evaluate suggestor triggers that guide the construction of a situation-specific Bayesian network. That is, logical rea-soning with default assumptions is used to select hypotheses that are sufficiently promising to merit explicit reasoning about their relative likelihoods, and exact or approximate probabilistic inference is used to evaluate these likelihoods.

For example, suppose that a limited amount of flu vac-cine exists and we require judicious distribution in order to prevent localized outbreaks from turning into national epi-demics. Suppose that we define a local outbreak as any situation in which more than ten people in a neighborhood have the flu. Suppose that our data are at the symptom level and that we also have data about patient addresses. Our reasoning tools must be able to adroitly move back and forth between probabilistically diagnosing individual cases based on symptoms, aggregating the individual cases, reasoning about which likely flu holders live in the same neighborhood, and evaluating the likelihood of out-breaks. PLASMA accomplishes this via threshold values on model construction operations. So, for example, we might set a threshold of .8 indicating that for purposes of our suggestor reasoning tools, any person whose likelihood of having the flu is greater than .8 is simply assumed to have the flu. These individuals are regarded as definite flu cases for purposes of constructing the situation-specific model, but when the model is applied to draw inferences, their medical conditions are again treated as uncertain.

A Case Study

We illustrate the PLASMA functionality using a scenario taken from Cohen, Freeman and Wolf (1996). The article presents a model of human cognition that the authors argue accounts for the decision making process of the U.S. captain and Tactical Air Officer (TAO). The authors state that the officers’ reasoning was not Bayesian. We argue that although they are correct that this kind of situated, adaptive reasoning cannot be captured by a standard Bayesian network, it can be modeled quite naturally by MEBN logic and situation-specific Bayesian network construction.

As the scenario begins, U.S. ships were conducting freedom of navigation demonstrations in Libyan-claimed waters. The Libyan leader was threatening to attack any vessel that ventured below the “Line of Death.” One night, a gunboat emerged from a Libyan port, turned toward an AEGIS-equipped cruiser, and increased its speed. The U.S. officers believed the gunboat’s behavior indicated an intent to attack. First, a direct approach at a speed of 75 knots by a combat vessel from a hostile power is strongly indicative of an attack. Second, the Aegis cruiser was a logical target for an attack by Libya, being 20 miles within the "Line of Death." Furthermore, apparent missile launches toward other American ships had been detected earlier that day, indicating that Libya was actively engaging surface vessels.

However, there were conflicting indicators. The gunboat was ill-equipped to take on the Aegis cruiser, let alone the larger U.S. fleet poised to come to its aid. The Libyans possessed air assets that were far better suited for such an assault. However, the officers reasoned that the Libyans might be willing to use every asset at hand against the U.S., regardless of the consequences to the striking asset. On the other hand, a more natural target for attack would have been another U.S. cruiser even further below the “Line of Death.”

A more serious complication was that the officers believed that the gunboat probably did not have the capability to detect the cruiser at the range at which it had turned inbound. Perhaps, they reasoned, the gunboat was receiving localization data from another source, or perhaps it possessed superior sensing technology of which U.S. intelligence was unaware. On the other hand, virtually any maneuver by the track would have put it on a vector to some friendly ship. Perhaps its direct line of approach was merely a coincidence.

Thus, the evidence regarding the gunboat’s intent was ambiguous and conflicting. The officers considered several hypotheses, juxtaposing each against the available background information and situation-specific evidence, in an attempt to ascertain the intent of the gunboat. They considered the hypothesis that the gunboat was on patrol, but discarded that hypothesis because its speed was too fast for a ship on patrol. The hypothesis they finally settled on was that the gunboat was following a plan of opportunistic attack (i.e., to proceed into the Gulf ready to engage any ship it encountered). This hypothesis explained its rapid speed, its inability to localize the Aegis cruiser, and the fact that it was not the most appropriate asset to target against a U.S. Aegis cruiser.

We developed a knowledge base consisting of:

? A set of entity types that represents generic knowledge about naval conflict. These include:

o Combatants: Friendly and hostile combatant MFrags represent background knowledge about

their high-level goals and their level of

aggressiveness.

o Plans: There are plans for provoked attacks, opportunistic attacks, and patrols, as well as a

generic non-specific plan type to represent a plan

type not included among the hypotheses being

considered.

o Attack Triggers: An attack trigger is a kind of behavior that might provoke an attack. We

modeled the U.S. presence below the “Line of

Death” as an instance of an attack trigger.

o Reports: Reports are used to model observable evidence that bears on the hypotheses under

consideration.

? A set of suggestors that represents knowledge about which hypotheses should be explicitly considered in given kinds of situation. Suggestors are expressed as first-order rules. The rules operate on observed evidence (e.g., the cruiser is situated below the “Line of Death”; the gunboat is on a rapid direct approach), together with information about the current status of the Bayesian network (e.g., no attack hypothesis has yet been enumerated for the gunboat), and nominate suggested network construction actions. Future implementations will allow suggestors to nominate pruning actions that delete low-probability hypotheses from the probabilistic KB, to help make inference more efficient.

?Particular facts germane to the scenario, including background knowledge and observed evidence.

The suggestors for the gunboat model were based on the following general rules, which are reflective of the kinds of reasoning the officers applied in this scenario.

?Any ship sailing in its own territorial waters may be on patrol. This was implemented as a suggestor nominating a patrol as a possible plan for any hostile ship.

?If a friendly ship is engaging in behavior against which enemy has threatened to retaliate, then an attack may occur. In this particular scenario, venturing within the Line of Death might provoke an attack. ? A hostile asset approaching on a bearing directly toward a ship may be attacking the ship it is approaching. A rapidly approach strengthens this inference. These rules were not implemented as suggestors, but were instead represented as likelihood information for the attack plans.

?In a provoked attack, the attacker must be able to localize the target it is attacking in advance of the attack. In an opportunistic attack, this is unnecessary.

This knowledge was implemented as likelihoods on localization given provoked and opportunistic attacks.

There is also a suggestor that fires when the probability of an attack exceeds a threshold, that generates a request for evidence on whether the attacker can localize the target, and another suggestor that inserts the result of the report if the report exists. ?When there is evidence consistent with an attack but the evidence conflicts with the hypothesis of a deliberate, provoked attack, then opportunistic attack is a possible explanation. This rule was implemented as follows. There was a suggestor nominating a generic “other” plan for any asset, representing a plan other than the ones explicitly enumerated. There was

another suggestor specifying that if an attack has been hypothesized, but the probability of “other” exceeds a threshold (an indication of conflicting evidence), then hypothesize an opportunistic attack as a possible plan for the asset.

We exercised PLASMA on this scenario, obtaining qualitative results similar to the analysis reported by Cohen, et al. Initially, the provoked attack hypothesis dominates, but as the evidence is processed, its incongruity with the provoked attack hypothesis becomes more apparent. This increases the probability of the “other” hypothesis. A natural hypothesis to consider is the patrol hypothesis, but it too is incongruent with the available evidence. When the opportunistic attack hypothesis is nominated in response to the failure of other plans to account for the evidence, it becomes the dominant hypothesis.

MEBN Logic

MEBN logic combines the expressive power of first-order predicate calculus with a sound and logically consistent treatment of uncertainty. MEBN fragments (MFrags) use directed graphs to specify local dependencies among a collection of related hypotheses. MTheories, or collections of MFrags that satisfy global consistency constraints, implicitly specify joint probability distributions over unbounded and possibly infinite numbers of hypotheses. MEBN logic can be used to reason consistently about complex expressions involving nested function application, arbitrary logical formulas, and quantification.

A set of built-in MFrags gives MEBN logic the expressive power of first-order logic.

A set of MFrags representing knowledge for modeling a gunboat attack scenario is shown as Figure 2. Each MFrag contains a set of random variables. Random variables are functions that map the entities filling their arguments to a set of possible values characteristic of the random variable. For example, IntendedOutcome(p) maps a plan p to a value representing the outcome the agent intends to achieve. Each MFrag contains resident random variables, whose distribution is defined in the MFrag, input random variables, whose distribution is defined in another MFrag, and context random variables, which represent conditions under which the distribution defined in the MFrag applies. Whether a random variable is resident, input or context is indicated by shading and bordering in Figure 2: bold-bordered and dark gray indicates context, light gray and thin-bordered indicates input, and white indicates resident. Our example also includes report MFrags which are not shown in the figure for reasons of space.

Logical and mathematical relationships can also be expressed as MFrags. For example, in the gunboat scenario the officers received a report that the gunboat was headed directly toward the U.S. cruiser. For purposes of this illustrative example, we chose to represent the direction an asset was traveling with respect to its target as a random variable with four possible values:

DirectApproach , GenerallyToward , NotToward , and NotRelevant , and we assumed that evidence was provided as likelihoods for these values. In a fielded decision support application, a sensor would provide likelihood information about the heading of the gunboat and the geospatial coordinates of the U.S. ship. A coordinate transformation would be applied to obtain the bearing of the gunboat with respect to the U.S. ship. Although an implementation might choose for efficiency reasons to have coordinate transformations performed externally, MEBN logic is capable of representing such transformations as MFrags.

Figure 2: MFrags for Naval Attack MTheory

Typically, context random variables represent type and relational constraints that must be satisfied for the MFrag to be meaningful. A default distribution assigns a probability distribution when the context constraints are not met. In some cases, the default distribution will assign probability 1 to the undefined symbol ⊥; in other cases, it is appropriate to assign a “leak” distribution representing the influence of not-yet-enumerated parents.

Figure 3: Situation-Specific Bayesian Network

The MFrags of Figure 2 define a coherent (that is, consistent with the constraints of probability theory) probability distribution over entities in the domain. This probability distribution can be used to reason about group membership of different numbers of objects reported on by sensors with varying spatial layouts. For instance, Figure 3 shows a situation-specific Bayesian network (SSBN) for a situation with two friendly cruisers and two enemy assets,

and several attack hypotheses. This SSBN was generated by instantiating and assembling MFrags that represent the relevant entities.

A straightforward combinatorial argument shows, however, that this extremely simple MTheory can result in situation-specific models of staggering computational complexity when applied to problems with many assets and significant uncertainty about the plans the assets are pursuing. In typical problems encountered in applications, excellent results can be obtained by applying heuristic model construction rules to identify and include those random variables most relevant to a given query, and to exclude those having sufficiently weak influence to be ignored.

PLASMA Architecture

To address the challenge of open-world situation assess-ment, IET has developed, as part of its Quiddity*Suite modeling and inference environment, an Execution Management framework (Quiddity*XM) for specifying and constructing situation-specific probabilistic models. Situation-specific models are assembled from domain models expressed in the Quiddity*Modeler probabilistic frame and rule language. A Quiddity*Modeler frame rep-resents a type of entity, its attributes (slots in the frame), and the relationships it can bear to other entities (reference slots that can be filled by instances of frames). Each frame encodes an MFrag, where the resident random variables correspond to slots for which the frame defines a distribu-tion, the input random variables correspond to slots in other frames that influence the distributions of resident random variables, and the context random variables corre-spond to logical conditions under which the distributions are well-defined. A domain model also includes sugge-stors , which are rules that represent knowledge about how to construct situation-specific models. Suggestors can specify which frames to instantiate or modify in a given situation, and how to connect the frame instances to exist-ing portions of the model. The execution manager uses suggestors to manage the size and complexity of the situa-tion-specific network. Quiddity*XM draws from the data-driven and query-driven paradigms (Wellman, et al., 1992) of knowledge-based model construction to assemble situation-specific models in response to both incoming data and queries.

As the suggestors recognize trigger conditions, they make suggestions about which kind of objects or events exist or might exist in the domain, and the kinds of relationships these objects bear to the previously observed or posited objects. In the original Quiddity*XM, suggestors were implemented as small, often stateless, procedural code modules. This approach is powerful, and provides all the computational advantages of procedural code versus declarative languages. However, the need to write programs to express model construction knowledge puts an undue burden on modelers to perform outside their area of primary expertise. IET has attempted to make

Attack Capability MFrag

Combatant MFrag

Isa(Asset,?a)

Isa(NavalPlan,?p)

ProvokedAttack Existence MFrag

OpportunisticAttack Existence MFrag

Isa(Attack,?p)

?t=Target(?p)

?c=Owner(Platform(?p))

AttackTrigger MFrag

?a=Platform(?p))

suggestors less opaque with respect to our representation language by moving them into the declarative knowledge-based reasoning system within which our modeling language is embedded. Thus, suggestors are represented as first-order rules encoding knowledge about which hypotheses to enumerate and which relationships to represent, as a function of current beliefs, current evidence, and the current query. The PLASMA architecture allows the reasoner to move gracefully back and forth between conclusions reached in the probabilistic reasoning system and the first order logical reasoning system. Logical reasoning about model construction actions is interleaved with probabilistic inference within the current model.

We illustrate with our Libyan gunboat example how PLASMA can implement first order-logic to represent knowledge that facilitates the efficient creation of situation specific networks. The DSS knowledge base contains a domain model consisting of modeler-specified MFrags and SSBN construction rules. These MFrags are represented as frames with uncertain slots annotated with probability information. In this problem, there are frames representing combatants, naval assets, plans, reports, and other entities of interest. These MFrags can represent relationships between entities of different types (e.g., the owner slot of a naval asset instance is filled by a combatant instance; the target slot of a hostile attack plan instance is filled by a friendly naval asset instance). Along with the MFrags, the modeler also creates a set of suggestors that describe conditions under which the situation-specific probabilistic model should be revised, and network construction actions to be taken under the given conditions. These suggestors are represented as Prolog-style rules that suggest conjunctions of network construction actions to be executed as a bundle.

At run-time, this knowledge base is exercised against reports and user queries to construct a situation-specific Bayesian network that represents query-relevant information about the current situation. At any given time, there will be a current situation-specific Bayesian network that reflects currently available reports and their implications for queries of current interest. In our example, the query of interest is the gunboat’s plan. Given this current situation-specific Bayesian network, the Bayesian inference module draws conclusions about the likelihood of the different possible plans for the gunboat.

Changes to the current SSBN can occur as a result of incoming reports, queries posed by the user, or internal triggers stated in terms of the current belief state. The current prototype uses a Prolog-type language and inference, reasoning with Horn clauses. The PLASMA model construction paradigm can be described as follows. First, there is the FOL KB, where the modeler defines the vocabulary to express trigger conditions. Using the supplied interface predicates for interfacing with the probabilistic KB, the modeler can capture a fine-grained, expressive set of assertions that declare when particular model construction actions should take place. For instance, we can define a trigger that asserts that “IF ship is slightly below LOD, THEN moderately severe attack trigger should be hypothesized”:

instigatesAttackTrigger(?shp,Libya,Moderate) :- location(?shp, BelowLOD, Slightly);

Then, the modeler declares what actions should be taken as a result of the appropriate trigger conditions being satisfied. These are declared in a convenient form

For instance, the following suggestor indicates that a hypothetical instance of AttackTrigger (i.e., one with an uncertain probability of existing) should be declared if the trigger condition holds.

hypotheticalEntity(AttackTrigger,"instigator",

?platform):- instigatesAttackTrigger(?platform,?agnt,

?severity) The hypotheticalEntity predicate is one of a set of generic PLASMA predicates which modelers can use in the construction of domain-specific suggestors. This predicate is used to suggest the model construction action of creating a hypothetical instance of the AttackTrigger frame and setting its “instigator” slot to the frame instance bound to ?platform. Specifically, when a binding succeeds for hypotheticalEntity such that its first argument is a frame type, its second argument is a reference slot for the frame type, and its third argument is an instance of the correct type for the slot, the following network constructions are suggested:

?Create a hypothetical instance of the frame type (AttackTrigger in this example);

?Set the third argument as a possible value of the slot denoted by the second argument (in this example, the U.S. cruiser, which binds to ?platform, becomes a possible value for the “instigator” slot of the AttackTrigger frame instance).

A hypothetical instance of a frame corresponds to a possible entity of the given type that may or may not be an actual entity. Each hypothetical entity has an “exists” slot, with a probability distribution representing the likelihood that the entity actually exists. In this case, the attack trigger is hypothetical because it is not known whether presence below the Line of Death actually will trigger an attack.

As reports arrive, new nodes are added to the SSBN to represent the reports; the reports are connected to entities (platform and plan instances) that might explain them; beliefs are updated to reflect the new information; and new hypotheses are introduced when warranted. The incremental model revision process is controlled by the built-in and modeler-defined suggestors.

Table 1 shows a few of the generic suggestors in the PLASMA library. The generic suggestors are available for use in constructing domain-specific suggestors. The table also gives examples of how these generic suggestors are applied in the gunboat scenario.

Declarative specification of suggestors makes our knowledge representation language more robust and

scalable than procedural encoding, as facilitates the explicit representation of semantic information that can be applied flexibly in a variety of contexts. The suggestors applied in the gunboat scenario could of course be implemented as procedural code. However, suggestors often draw upon semantic knowledge such properties of sets, partial orderings, or spatio-temporal phenomena. A declarative representation allows suggestors to draw upon upper ontologies for set-theoretic, mereological, or spatio-temporal reasoning, to compose these concepts as necessary for each new suggestor, and to rely on reasoning services optimized to a given ontology when executing suggestors related to that ontology. Also, note that the recognition of trigger condition A may require recognition of trigger condition B which in turn requires recognition of trigger condition C, etc. Backward chaining can be used to implement such reasoning chains.

Suggestor Example Usage

Instantiate known entities & set known properties A hostile gunboat is approaching Libya’s high-level goal is protecting territorial rights

Hypothesize new entities to fill unfilled roles “Unknown plan” hypothesis should be introduced for any entity with no enumerated plan

Represent reciprocal & composed relationship hypotheses If HostileGunboat.0 may be the platform performing ProvokedAttack.1, then ProvokedAttack.1 may be the plan of HostileGunboat.0

Specify probability thresholds for model construction actions If the probability of an attack exceeds 0.6 then call for a report on whether attacker can localize target

Table 1: Some PLASMA Generic Suggestors Considerations such as these suggest that a modeler-friendly toolkit must be able to represent general concepts such as transitive reasoning, set membership, existential quantification, and spatio-temporal reasoning. Furthermore, the toolkit must provide logical reasoning services such as subsumption, constraint satisfaction, and forward and backward chaining in a way that hides implementation details from modelers. Such a toolkit enables a scaleable and robust approach to suggestor development, allowing modelers to use existing atomic concepts to articulate new complex trigger conditions for suggestors. Hence, we have adopted first order logic as the basis for our suggestor language; we have implemented a Prolog-like backward chaining algorithm for basic logical reasoning; and our architecture allows an application to interface with special-purpose reasoners when appropriate.

The PLASMA suggestor language provides the ability to specify declaratively the conditions under which a given MFrag or collection of MFrags should be retrieved and instantiated. Such conditions include satisfaction of the context constraints of the MFrags, as well as additional constraints on the input and resident random variables of the MFrag. These additional constraints typically involve conditions on the marginal likelihood of input random variables which, when satisfied, tend to indicate that the relevant hypotheses are sufficiently likely to merit consideration. Logical reasoning is then applied to identify promising random variables to include in the situation-specific model. Suggestors can also provide numerical scores used to prioritize model construction actions and control rule execution. The resulting architecture generalizes logical inference to uncertain situations in a mathematically sound manner, while freeing the modeler from tedious and error-prone code development.

Once a situation specific model has been constructed, we also require the ability to prune hypotheses that are either extremely unlikely or very weakly related to the results of a query of interest. Logical reasoning can also be used to apply thresholds or other rules for pruning parts of a model that are only weakly related to the desired query result.

Many of our suggestors are strictly heuristic, but we have also developed suggestors based on decision theoretic reasoning applied to an approximate model. For example, if we specify penalties for false alarms, missed identifications, and use of computational resources, then an MTheory can represent a decision model for the task model construction problem, in which computational cost is traded off against task performance. Of course, exact solution of this decision model may be more computationally challenging than the task model itself, but we may be able to specify a low-resolution approximation that is accurate enough to provide a basis for suggestor design. We can allow modelers to specify a suggestor as a fragment of an influence diagram, and then automatically translate the decision regions into logical rules to be applied by the logical reasoning engine. Collections of suggestors can be evaluated and compared by running simulations across a variety of scenarios.

The PLASMA system realizes an efficient division of labor by exploiting the respective strengths of logic-based and probability-based representation and reasoning paradigms. We use a logic-based tool for managing the inventory of objects in our reasoning domain. Variable binding, transitive reasoning, set theoretic reasoning and existential quantification is available for purposes of maintaining and pruning our inventory of objects in our domain, recognizing relevant relationships and quickly connecting relevant objects for purposes of representing and reasoning about when and how to assemble our more fine-tuned Bayesian representation tools. However, we are also able to quickly instantiate a more focused, fine-grained probabilistic model for the complex situational reasoning that the Bayesian tools can then perform. A somewhat useful analogy here is the surgeon and surgeon’s assistant. The assistant keeps the workspace clean and uncluttered ensuring that the right tools are available for the surgeon’s effort. Similarly, we have implemented the PLASMA suggestor logic tool as a “surgeon’s assistant” ensuring that the precise tools of our Bayesian reasoner are not hindered by being forced to

consider irrelevant objects and relationships, but can be focused on the accurate fine-grained reasoning that it does best.

Summary and Conclusions

The PLASMA architecture combines logical and probabilistic inference to construct tractable situation-specific Bayesian network models from a knowledge base of model fragments and suggestor rules. This architecture permits us to smoothly integrate the representational advantages of two distinct reasoning and representational paradigms to overcome central challenges in hypothesis management. Logic-based concept definitions encode complex definitional knowledge about the structure and makeup of objects in the reasoning domain while probabilistic knowledge allows us to process uncertain and potentially contradictory information about the actual objects under observation.

This architecture permits declarative specification of model construction knowledge as well as knowledge about the structure of and relationships among entities in the application domain. The concepts underlying PLASMA have been applied to problems in several application domains such as military situation assessment and cyber security. We believe that this prototype implementation will increase the accessibility of our modeling tools, and provide reasoning services that take care of implementation details, thus freeing modelers to concentrate on how best to represent knowledge about the domain.

Acknowledgements

This research has been supported under a contract with the Office of Naval Research, number N00014-04-M-0277. We are grateful to Suzanne Mahoney and Tod Levitt for their contributions to the ideas underlying this work.

References

Bacchus, F., Grove, A., Halpern, J. and Koller, D. 1997. From statistical knowledge bases to degrees of belief. Artificial Intelligence87(1-2):75-143.

Bamber, D. 1998. How Probability Theory Can Help Us Design Rule-Based Systems. In Proceedings of the 1998 Command and Control Research and Technology Symposium, Naval Postgraduate School, Monterey, CA, pp. 441–451.

Olav Bangs?, O., and Wuillemin, P.-H. 2000. Object Oriented Bayesian Networks: A Framework for Topdown Specification of Large Bayesian Networks and Repetitive Structures, Technical Report CIT-87.2-00-obphw1, Department of Computer Science, Aalborg University. Cohen, M., Freeman, J., and Wolf, S. 1996. Metarecognition In Time-Stressed Decision-Making. Human Factors38(2):206-219.

Das, B. 2000. Representing Uncertainties Using Bayesian Networks, Technical Report, DSTO-TR-0918, Defence Science and Technology Organization, Electronics and Surveillance Research Lab, Salisbury, Australia. Heckerman, D., Meek, C. and Koller, D. 2004. Probabilistic Models for Relational Data, Technical Report MSR-TR-2004-30, Microsoft Corporation.

IET, Quiddity*Suite Technical Guide. 2004. Technical Report, Information Extraction and Transport, Inc. Jaynes, E. T. 2003. Probability Theory: The Logic of Science. New York, NY: Cambridge Univ. Press. Koller, D. and Pfeffer, A. 1997. Object-Oriented Bayesian Networks. In Uncertainty in Artificial Intelligence: Proceedings of the Thirteenth Conference, 302-313. San Mateo, CA: Morgan Kaufmann Publishers.

Laskey, K. B. 2004. MEBN: A Logic for Open-World Probabilistic Reasoning, Working Paper, https://www.doczj.com/doc/d73203008.html, /~klaskey/papers/Laskey_MEBN_Logic.pdf, Department of Systems Engineering and Operations Research, George Mason University,

Laskey, K.B., d’Ambrosio, B., Levitt, T. and Mahoney, S. 2000. Limited Rationality in Action: Decision Support for Military Situation Assessment. Minds and Machines, 10(1), 53-77.

Laskey, K. B. and Mahoney, S. M. 1997. Network Fragments: Representing Knowledge for Constructing Probabilistic Models. In Uncertainty in Artificial Intelligence: Proceedings of the Thirteenth Conference, 334-341. San Mateo, CA: Morgan Kaufmann Publishers. Sato, T. 1998, Modeling scientific theories as PRISM programs. In ECAI-98 Workshop on Machine Discovery, 37-45. Hoboken, NJ: John Wiley & Sons.

Wellman, M., Breese, J., Goldman, R. 1992. From Knowledge Bases to Decision Models. Knowledge Engineering Review 7:35–53.

论述物理气相沉积和化学气相沉积的优缺点 物理气相沉积技术表示在真空条件下,采用物理方法,将材料源——固体或液体表面气化成气态原子、分子或部分电离成离子,并通过低压气体(或等离子体)过程,在基体表面沉积具有某种特殊功能的薄膜的技术。物理气相沉积的主要方法有,真空蒸镀、溅射镀膜、电弧等离子体镀、离子镀膜,及分子束外延等。发展到目前,物理气相沉积技术不仅可沉积金属膜、合金膜、还可以沉积化合物、陶瓷、半导体、聚合物膜等。 真空蒸镀基本原理是在真空条件下,使金属、金属合金或化合物蒸发,然后沉积在基体表面上,蒸发的方法常用电阻加热,高频感应加热,电子柬、激光束、离子束高能轰击镀料,使蒸发成气相,然后沉积在基体表面,历史上,真空蒸镀是PVD法中使用最早的技术。 溅射镀膜基本原理是充氩(Ar)气的真空条件下,使氩气进行辉光放电,这时氩(Ar)原子电离成氩离子(Ar+),氩离子在电场力的作用下,加速轰击以镀料制作的阴极靶材,靶材会被溅射出来而沉积到工件表面。如果采用直流辉光放电,称直流(Qc)溅射,射频(RF)辉光放电引起的称射频溅射。磁控(M)辉光放电引起的称磁控溅射。电弧等离子体镀膜基本原理是在真空条件下,用引弧针引弧,使真空金壁(阳极)和镀材(阴极)之间进行弧光放电,阴极表面快速移动着多个阴极弧斑,不断迅速蒸发甚至“异华”镀料,使之电离成以镀料为主要成分的电弧等离子体,并能迅速将镀料沉积于基体。因为有多弧斑,所以也称多弧蒸发离化过程。 离子镀基本原理是在真空条件下,采用某种等离子体电离技术,使镀料原子部分电离成离子,同时产生许多高能量的中性原子,在被镀基体上加负偏压。这样在深度负偏压的作用下,离子沉积于基体表面形成薄膜。 物理气相沉积技术基本原理可分三个工艺步骤: (1)镀料的气化:即使镀料蒸发,异华或被溅射,也就是通过镀料的气化源。 (2)镀料原子、分子或离子的迁移:由气化源供出原子、分子或离子经过碰撞后,产生多种反应。 (3)镀料原子、分子或离子在基体上沉积。 物理气相沉积技术工艺过程简单,对环境改善,无污染,耗材少,成膜均匀致密,与基体的结合力强。该技术广泛应用于航空航天、电子、光学、机械、建筑、轻工、冶金、材料等领域,可制备具有耐磨、耐腐饰、装饰、导电、绝缘、光导、压电、磁性、润滑、超导等特性的膜层。 随着高科技及新兴工业发展,物理气相沉积技术出现了不少新的先进的亮点,如多弧离子镀与磁控溅射兼容技术,大型矩形长弧靶和溅射靶,非平衡磁控溅射靶,孪生靶技术,带状泡沫多弧沉积卷绕镀层技术,条状纤维织物卷绕镀层技术等,使用的镀层成套设备,向计算机全自动,大型化工业规模方向发展。 化学气相沉积是反应物质在气态条件下发生化学反应,生成固态物质沉积在加热的固态基体表面,进而制得固体材料的工艺技术。它本质上属于原子范畴的气态传质过程。现代科学和技术需要使用大量功能各异的无机新材料,这些功能材料必须是高纯的,或者是在高纯材料中有意地掺人某种杂质形成的掺杂材料。但是,我们过去所熟悉的许多制备方法如高温熔炼、水溶液中沉淀和结晶等往往难以满足这些要求,也难以保证得到高纯度的产品。因此,无机新材料的合成就成为现代材料科学中的主要课题。 化学气相沉积是近几十年发展起来的制备无机材料的新技术。化学气相淀积法已经广泛用于提纯物质、研制新晶体、淀积各种单晶、多晶或玻璃态无机薄膜材料。这些材料可以是氧化物、硫化物、氮化物、碳化物,也可以是III-V、II-IV、IV-VI族中的二元或多元的元素间化合物,而且它们的物理功能可以通过气相掺杂的淀积过程精确控制。目前,化学气相

最小二乘法及其应用 1. 引言 最小二乘法在19世纪初发明后,很快得到欧洲一些国家的天文学家和测地学家的广泛关注。据不完全统计,自1805年至1864年的60年间,有关最小二乘法的研究论文达256篇,一些百科全书包括1837年出版的大不列颠百科全书第7版,亦收入有关方法的介绍。同时,误差的分布是“正态”的,也立刻得到天文学家的关注及大量经验的支持。如贝塞尔( F. W. Bessel, 1784—1846)对几百颗星球作了三组观测,并比较了按照正态规律在给定范围内的理论误差值和实际值,对比表明它们非常接近一致。拉普拉斯在1810年也给出了正态规律的一个新的理论推导并写入其《分析概论》中。正态分布作为一种统计模型,在19世纪极为流行,一些学者甚至把19世纪的数理统计学称为正态分布的统治时代。在其影响下,最小二乘法也脱出测量数据意义之外而发展成为一个包罗极大,应用及其广泛的统计模型。到20世纪正态小样本理论充分发展后,高斯研究成果的影响更加显著。最小二乘法不仅是19世纪最重要的统计方法,而且还可以称为数理统计学之灵魂。相关回归分析、方差分析和线性模型理论等数理统计学的几大分支都以最小二乘法为理论基础。正如美国统计学家斯蒂格勒( S. M. Stigler)所说,“最小二乘法之于数理统计学犹如微积分之于数学”。最小二乘法是参数回归的最基本得方法所以研究最小二乘法原理及其应用对于统计的学习有很重要的意义。 2. 最小二乘法 所谓最小二乘法就是:选择参数10,b b ,使得全部观测的残差平方和最小. 用数学公式表示为: 21022)()(m in i i i i i x b b Y Y Y e --=-=∑∑∑∧ 为了说明这个方法,先解释一下最小二乘原理,以一元线性回归方程为例. i i i x B B Y μ++=10 (一元线性回归方程)

等离子熔覆耐磨处理技术 青岛海纳等离子科技有限公司

一公司简介 山东科技大学青岛海纳等离子科技有限公司是以本校材料学科为技术依托,以等离子表面改性为核心技术而成立的具有自主知识产权的高新技术企业,是山东省金属材料与表面工程技术研究中心实验基地。 数年来,公司致力于金属材料表面改性技术的研究与开发,公司坚持以技术求进步、以质量谋发展,并取得了丰硕的科技成果。取得了“真空等离子束表面熔覆耐磨蚀涂层的方法”、“一种本安型耐磨镐型截齿”、“一种耐磨可弯曲刮板输送机”、“一种镐型截齿的生产工艺方法”等多项国家发明专利,另外2008年“等离子控制原位冶金反应技术与工程应用”荣获了国家科学技术进步二等奖,“等离子熔覆强化技术及其在刮板输送机上的应用”获中国煤炭工业科学技术奖二等奖,“高温等离子射流控制原位冶金反应技术及产业化”荣获中国机械工业科学技术奖,“等离子多元共渗合金强化技术”获中国高校技术发明二等奖,“一种铸铁表面快速扫描熔凝硬化的方法”获山东省第六届专利奖金奖。 公司在国际上首次提出了负压等离子熔覆涂层与熔射成型技术的新方法,获得了国家发明专利。该技术是解决金属表面高耐磨耐蚀耐冲击的最新技术方法,并且研制成功了综合经济技术指标优于激光熔覆的大功率高稳定性等离子熔覆专用数控设备,该技术与设备于2002年12月21日通过了山东省科技厅组织的鉴定。鉴定结论认为该技术与设备填补了一项空白,是等离子束表面冶金领域中的一项重大创新,整体技术水平达到了国际领先水平。 公司拥有一批具有学士、硕士、博士学位的研发人员,具有中高级专业技术职务的人员占60%以上,同时,公司还拥有一支专业水平高,经验丰富的职工队伍。 二等离子熔覆技术简介 等离子熔覆技术是继激光熔覆技术之后发展起来的最新表面改性技术,是我们公司拥有的具有原创性的国际领先技术。该技术汲取了激光熔覆技术和对焊技术的先进性,屏弃了堆焊和激光熔覆技术的不足。其基本原理是:在高温等离子束流作用下,将合金粉末与工件基体表面迅速加热并一起熔化、混合、扩散、反应、凝固,等离子束离开后自激冷却,在表面形成一层高硬度耐磨层,从而实现表面的强化与硬化,增强工件的耐磨性能。等离子熔覆耐磨层不仅具有很高的硬

种子磁化处理技术是一项新的物理农业技术,也是一项很有价值的农业增产技术,国外早已广泛应用。在外加磁场的作用下,增强了种子中的酶的功能和活力,促进植物根系生长和吸收养分。根据几年的对比 ...种子磁化处理技 术是一项新的物理农业技术,也是一项很有价值的农业增产技术,国外早已广泛应用。在外加磁场的作 用下,增强了种子中的酶的功能和活力,促进植物根系生长和吸收养分。根据几年的对比试验表明,凡 是经过磁化处理的粮食、蔬菜及经济作物的种子,都比没有磁化的发芽早、长势旺、苗粗根壮。据测算, 玉米平均增产8.1%左右,小麦平均增产7.6%,蔬菜平均增产15~20%。 种子磁化机主要由料仓、流量调节阀、磁化通道、磁块、出料口、底板、行走轮、轨道、调整丝杠、手柄、固定座、螺栓、螺母,箱体组装而成。料仓和出料口联为一体,形成一个磁化通道。流量调节阀安装在磁化通道入口处。可根据不同籽种的不同最佳磁化场强来设定各不相同的磁场,以达到最佳磁化效果目的。可提高发芽率5~8%,出苗期可提前1~2天,并能提高种子抗病能力。在播种前24小时内用磁场对农作物种子进行直接磁化处理,增强种子酶的功能和活力,促进植物根系生长和吸收水分,提高种子的发芽率和作物的新陈代谢,使其稳健生长。 1、磁化处理与丰产原理 磁场种子处理机是依据磁场种子处理正向效应和种子微磁性互作机理开发的新型种子磁化机。采用多级交变磁极处理结构进行落种处理,种子在磁场处理正向效应规定的磁场强度范围内受到微磁化和物质结构扭变处理,处理后的种子播种于土壤中会与土壤形成生化、物理性质的互作,带有微磁性的种子会将其周边的微量元素铁磁性物质、顺磁性物质吸引至种子表皮-土壤固液混合相组成的活性界面中,扭变的膜结构会在土壤环境中慢慢得到修复,之后种子萌发并加以利用丰富的微量元素构建丰产的物质基础。另一方面,微磁性种子还能够解析土壤吸附性磷元素,增加土壤有效磷的含量。 2、应用范围 适用于能够进行种子播种的粮食作物、蔬菜、经济作物、花卉、药材等植物种子的播前即时处理。 3、增产现象的分析 微磁性种子吸附顺磁性、铁磁性物质奠定了富足的微量元素的累积是丰产的关键因素,同时趋磁性微生物的聚集也是刺激种子发芽壮苗的重要因素。趋磁细菌是一类对磁场有趋向性反应的细菌,其菌体能吸收土壤环境中非游离态和游离态的铁元素并在体内合成包裹有膜的纳米磁性颗粒 Fe3O4 或 Fe3S4 晶体即磁小体,它的累积进一步富化了微量元素累积。 4、技术要点 选择设备选择大连产WFD555、辉南产dec—1型等品牌的种子磁化机。生产效率每小时400公斤左右。操作程序将磁化器筒直立,3根支脚分别插入3个插座内,调整支脚使磁化筒垂直于地面。检查分流器,使之位于

第五章练习题参考解答 练习题 5.1 设消费函数为 i i i i u X X Y +++=33221βββ 式中,i Y 为消费支出;i X 2为个人可支配收入;i X 3为个人的流动资产;i u 为随机误差 项,并且2 22)(,0)(i i i X u Var u E σ==(其中2 σ为常数) 。试回答以下问题: (1)选用适当的变换修正异方差,要求写出变换过程; (2)写出修正异方差后的参数估计量的表达式。 5.2 根据本章第四节的对数变换,我们知道对变量取对数通常能降低异方差性,但须对这种模型的随机误差项的性质给予足够的关注。例如,设模型为u X Y 21β β=,对该模型中的变量取对数后得如下形式 u X Y ln ln ln ln 21++=ββ (1)如果u ln 要有零期望值,u 的分布应该是什么? (2)如果1)(=u E ,会不会0)(ln =u E ?为什么? (3)如果)(ln u E 不为零,怎样才能使它等于零? 5.3 由表中给出消费Y 与收入X 的数据,试根据所给数据资料完成以下问题: (1)估计回归模型u X Y ++=21ββ中的未知参数1β和2β,并写出样本回归模型的书写格式; (2)试用Goldfeld-Quandt 法和White 法检验模型的异方差性; (3)选用合适的方法修正异方差。 Y X Y X Y X 55 80 152 220 95 140 65 100 144 210 108 145 70 85 175 245 113 150 80 110 180 260 110 160

79120135190125165 84115140205115180 98130178265130185 95140191270135190 90125137230120200 7590189250140205 741055580140210 1101607085152220 1131507590140225 12516565100137230 10814574105145240 11518080110175245 14022584115189250 12020079120180260 14524090125178265 13018598130191270 5.4由表中给出1985年我国北方几个省市农业总产值,农用化肥量、农用水利、农业劳动力、每日生产性固定生产原值以及农机动力数据,要求: (1)试建立我国北方地区农业产出线性模型; (2)选用适当的方法检验模型中是否存在异方差; (3)如果存在异方差,采用适当的方法加以修正。 地区农业总产值农业劳动力灌溉面积化肥用量户均固定农机动力(亿元)(万人)(万公顷)(万吨)资产(元)(万马力) 北京19.6490.133.847.5394.3435.3天津14.495.234.95 3.9567.5450.7河北149.91639 .0357.2692.4706.892712.6山西55.07562.6107.931.4856.371118.5内蒙古60.85462.996.4915.41282.81641.7辽宁87.48588.972.461.6844.741129.6吉林73.81399.769.6336.92576.81647.6黑龙江104.51425.367.9525.81237.161305.8山东276.552365.6456.55152.35812.023127.9河南200.022557.5318.99127.9754.782134.5陕西68.18884.2117.936.1607.41764 新疆49.12256.1260.4615.11143.67523.3 5.5表中的数据是美国1988研究与开发(R&D)支出费用(Y)与不同部门产品销售量

物理气相沉积(PVD)技术 第一节概述 物理气相沉积技术早在20世纪初已有些应用,但在最近30年迅速发展,成为一门极具广阔应用前景的新技术。,并向着环保型、清洁型趋势发展。20世纪90年代初至今,在钟表行业,尤其是高档手表金属外观件的表面处理方面达到越来越为广泛的应用。 物理气相沉积(Physical Vapor Deposition,PVD)技术表示在真空条件下,采用物理方法,将材料源——固体或液体表面气化成气态原子、分子或部分电离成离子,并通过低压气体(或等离子体)过程,在基体表面沉积具有某种特殊功能的薄膜的技术。物理气相沉积的主要方法有,真空蒸镀、溅射镀膜、电弧等离子体镀、离子镀膜,及分子束外延等。发展到目前,物理气相沉积技术不仅可沉积金属膜、合金膜、还可以沉积化合物、陶瓷、半导体、聚合物膜等。 真空蒸镀基本原理是在真空条件下,使金属、金属合金或化合物蒸发,然后沉积在基体表面上,蒸发的方法常用电阻加热,高频感应加热,电子柬、激光束、离子束高能轰击镀料,使蒸发成气相,然后沉积在基体表面,历史上,真空蒸镀是PVD法中使用最早的技术。 溅射镀膜基本原理是充氩(Ar)气的真空条件下,使氩气进行辉光放电,这时氩(Ar)原子电离成氩离子(Ar+),氩离子在电场力的作用下,加速轰击以镀料制作的阴极靶材,靶材会被溅射出来而沉积到工件表面。如果采用直流辉光放电,称直流(Qc)溅射,射频(RF)辉光放电引起的称射频溅射。磁控(M)辉光放电引起的称磁控溅射。电弧等离子体镀膜基本原理是在真空条件下,用引弧针引弧,使真空金壁(阳极)和镀材(阴极)之间进行弧光放电,阴极表面快速移动着多个阴极弧斑,不断迅速蒸发甚至“异华”镀料,使之电离成以镀料为主要成分的电弧等离子体,并能迅速将镀料沉积于基体。因为有多弧斑,所以也称多弧蒸发离化过程。 离子镀基本原理是在真空条件下,采用某种等离子体电离技术,使镀料原子部分电离成离子,同时产生许多高能量的中性原子,在被镀基体上加负偏压。这样在深度负偏压的作用下,离子沉积于基体表面形成薄膜。 物理气相沉积技术基本原理可分三个工艺步骤: (1)镀料的气化:即使镀料蒸发,异华或被溅射,也就是通过镀料的气化源。 (2)镀料原子、分子或离子的迁移:由气化源供出原子、分子或离子经过碰撞后,产生多种反应。 (3)镀料原子、分子或离子在基体上沉积。 物理气相沉积技术工艺过程简单,对环境改善,无污染,耗材少,成膜均匀致密,与基体的结合力强。该技术广泛应用于航空航天、电子、光

曲线拟合及其应用综述 摘要:本文首先分析了曲线拟合方法的背景及在各个领域中的应用,然后详细介绍了曲线拟合方法的基本原理及实现方法,并结合一个具体实例,分析了曲线拟合方法在柴油机故障诊断中的应用,最后对全文内容进行了总结,并对曲线拟合方法的发展进行了思考和展望。 关键词:曲线拟合最小二乘法故障模式识别柴油机故障诊断 1背景及应用 在科学技术的许多领域中,常常需要根据实际测试所得到的一系列数据,求出它们的函数关系。理论上讲,可以根据插值原则构造n 次多项式Pn(x),使得Pn(x)在各测试点的数据正好通过实测点。可是, 在一般情况下,我们为了尽量反映实际情况而采集了很多样点,造成了插值多项式Pn(x)的次数很高,这不仅增大了计算量,而且影响了函数的逼近程度;再就是由于插值多项式经过每一实测样点,这样就会保留测量误差,从而影响逼近函数的精度,不易反映实际的函数关系。因此,我们一般根据已知实际测试样点,找出被测试量之间的函数关系,使得找出的近似函数曲线能够充分反映实际测试量之间的关系,这就是曲线拟合。 曲线拟合技术在图像处理、逆向工程、计算机辅助设计以及测试数据的处理显示及故障模式诊断等领域中都得到了广泛的应用。 2 基本原理 2.1 曲线拟合的定义 解决曲线拟合问题常用的方法有很多,总体上可以分为两大类:一类是有理论模型的曲线拟合,也就是由与数据的背景资料规律相适应的解析表达式约束的曲线拟合;另一类是无理论模型的曲线拟合,也就是由几何方法或神经网络的拓扑结构确定数据关系的曲线拟合。 2.2 曲线拟合的方法 解决曲线拟合问题常用的方法有很多,总体上可以分为两大类:一类是有理论模型的曲线拟合,也就是由与数据的背景资料规律相适应的解析表达式约束的曲线拟合;另一类是无理论模型的曲线拟合,也就是由几何方法或神经网络的拓扑结构确定数据关系的曲线拟合。 2.2.1 有理论模型的曲线拟合 有理论模型的曲线拟合适用于处理有一定背景资料、规律性较强的拟合问题。通过实验或者观测得到的数据对(x i,y i)(i=1,2, …,n),可以用与背景资料规律相适应的解析表达式y=f(x,c)来反映x、y之间的依赖关系,y=f(x,c)称为拟合的理论模型,式中c=c0,c1,…c n是待定参数。当c在f中线性出现时,称为线性模型,否则称为非线性模型。有许多衡量拟合优度的标准,最常用的方法是最小二乘法。 2.2.1.1 线性模型的曲线拟合 线性模型中与背景资料相适应的解析表达式为: ε β β+ + =x y 1 (1) 式中,β0,β1未知参数,ε服从N(0,σ2)。 将n个实验点分别带入表达式(1)得到: i i i x yε β β+ + = 1 (2) 式中i=1,2,…n,ε1, ε2,…, εn相互独立并且服从N(0,σ2)。 根据最小二乘原理,拟合得到的参数应使曲线与试验点之间的误差的平方和达到最小,也就是使如下的目标函数达到最小: 2 1 1 ) ( i i n i i x y Jε β β- - - =∑ = (3) 将试验点数据点入之后,求目标函数的最大值问题就变成了求取使目标函数对待求参数的偏导数为零时的参数值问题,即: ) ( 2 1 1 = - - - - = ? ?∑ = i i n i i x y J ε β β β (4)

第5章 参数估计 ●1. 从一个标准差为5的总体中抽出一个容量为40的样本,样本均值为25。 (1) 样本均值的抽样标准差x σ等于多少? (2) 在95%的置信水平下,允许误差是多少? 解:已知总体标准差σ=5,样本容量n =40,为大样本,样本均值x =25, (1)样本均值的抽样标准差 x σσ5=0.7906 (2)已知置信水平1-α=95%,得 α/2Z =1.96, 于是,允许误差是E = α/2 σ Z 6×0.7906=1.5496。 ●2.某快餐店想要估计每位顾客午餐的平均花费金额,在为期3周的时间里选取49名顾客组成了一个简单随机样本。 (3) 假定总体标准差为15元,求样本均值的抽样标准误差; (4) 在95%的置信水平下,求允许误差; (5) 如果样本均值为120元,求总体均值95%的置信区间。 解:(1)已假定总体标准差为σ=15元, 则样本均值的抽样标准误差为 x σσ15=2.1429 (2)已知置信水平1-α=95%,得 α/2Z =1.96, 于是,允许误差是E = α/2 σ Z 6×2.1429=4.2000。 (3)已知样本均值为x =120元,置信水平1-α=95%,得 α/2Z =1.96, 这时总体均值的置信区间为 α/2 x Z 0±4.2=124.2115.8 可知,如果样本均值为120元,总体均值95%的置信区间为(115.8,124.2)元。 ●3.某大学为了解学生每天上网的时间,在全校7500名学生中采取不重复抽样方法随机抽取36人,调查他们每天上网的时间,得到下面的数据(单位:小时): 3.3 3.1 6.2 5.8 2.3 4.1 5.4 4.5 3.2 4.4 2.0 5.4 2.6 6.4 1.8 3.5 5.7 2.3 2.1 1.9 1.2 5.1 4.3 4.2 3.6 0.8 1.5 4.7 1.4 1.2 2.9 3.5 2.4 0.5 3.6 2.5

物理气相沉积(PVD)技术 第一节 概述 物理气相沉积技术早在20世纪初已有些应用,但在最近30年迅速发展,成为一门极具广阔应用前景的新技术。,并向着环保型、清洁型趋势发展。20世纪90年代初至今,在钟表行业,尤其是高档手表金属外观件的表面处理方面达到越来越为广泛的应用。 物理气相沉积(Physical Vapor Deposition,PVD)技术表示在真空条件下,采用物理方法,将材料源——固体或液体表面气化成气态原子、分子或部分电离成离子,并通过低压气体(或等离子体)过程,在基体表面沉积具有某种特殊功能的薄膜的技术。 物理气相沉积的主要方法有,真空蒸镀、溅射镀膜、电弧等离子体镀、离子镀膜,及分子束外延等。发展到目前,物理气相沉积技术不仅可沉积金属膜、合金膜、还可以沉积化合物、陶瓷、半导体、聚合物膜等。 真空蒸镀基本原理是在真空条件下,使金属、金属合金或化合物蒸发,然后沉积在基体表面上,蒸发的方法常用电阻加热,高频感应加热,电子柬、激光束、离子束高能轰击镀料,使蒸发成气相,然后沉积在基体表面,历史上,真空蒸镀是PVD法中使用最早的技术。 溅射镀膜基本原理是充氩(Ar)气的真空条件下,使氩气进行辉光放电,这时氩(Ar)原子电离成氩离子(Ar+),氩离子在电场力的作用下,加速轰击以镀料制作的阴极靶材,靶材会被溅射出来而沉积到工件表面。如果采用直流辉光放电,称直流(Qc)溅射,射频(RF)辉光放电引起的称射频溅射。磁控(M)辉光放电引起的称磁控溅射。 电弧等离子体镀膜基本原理是在真空条件下,用引弧针引弧,使真空金壁(阳极)和镀材(阴极)之间进行弧光放电,阴极表面快速移动着多个阴极弧斑,不断迅速蒸发甚至“异华”镀料,使之电离成以镀料为主要成分的电弧等离子体,并能迅速将镀料沉积于基体。因为有多弧斑,所以也称多弧蒸发离化过程。 离子镀基本原理是在真空条件下,采用某种等离子体电离技术,使镀料原子部分电离成离子,同时产生许多高能量的中性原子,在被镀基体上加负偏压。这样在深度负偏压的作用下,离子沉积于基体表面形成薄膜。 物理气相沉积技术基本原理可分三个工艺步骤: (1)镀料的气化:即使镀料蒸发,异华或被溅射,也就是通过镀料的气化源。 (2)镀料原子、分子或离子的迁移:由气化源供出原子、分子或离子经过碰撞后,产生多种反应。 (3)镀料原子、分子或离子在基体上沉积。 物理气相沉积技术工艺过程简单,对环境改善,无污染,耗材少,成膜均匀致密,与基体的结合力强。该技术广泛应用于航空航天、电子、光学、机械、建筑、轻工、冶金、材料等领域,可制备具有耐磨、耐腐饰、装饰、导电、绝缘、光导、压电、磁性、润滑、超导等特性的膜层。随着高科技及新兴工业发展,物理气相沉积技术出现了不少新的先进的亮点,如多弧离子镀与磁控溅射兼容技术,大型矩形长弧靶和溅射靶,非平衡磁控溅射靶,孪生靶技术,带状泡沫多弧沉积卷绕镀层技术,条状纤维织物卷绕镀层技术等,使用的镀层成套设备,向计算机全自动,大型化工业规模方向发展。 第二节 真空蒸镀

毕业论文文献综述 信息与计算科学 最小二乘法的原理及应用 一、国内外状况 国际统计学会第56届大会于2007年8月22-29日在美丽的大西洋海滨城市、葡萄牙首都里斯本如期召开。应大会组委会的邀请,以会长李德水为团长的中国统计学会代表团一行29人注册参加了这次大会。北京市统计学会、山东省统计学会,分别组团参加了这次大会。中国统计界(不含港澳台地区)共有58名代表参加了这次盛会。本届大会的特邀论文会议共涉及94个主题,每个主题一般至少有3-5位代表做学术演讲和讨论。通过对大会论文按研究内容进行归纳,特邀论文大致可以分为四类:即数理统计,经济、社会统计和官方统计,统计教育和统计应用。 数理统计方面。数理统计作为统计科学的一个重要部分,特别是随机过程和回归分析依然展现着古老理论的活力,一直受到统计界的重视并吸引着众多的研究者。本届大会也不例外。 二、进展情况 数理统计学19世纪的数理统计学史, 就是最小二乘法向各个应用领域拓展的历史席卷了统计大部分应用的几个分支——相关回归分析, 方差分析和线性模型理论等, 其灵魂都在于最小二乘法; 不少近代的统计学研究是在此法的基础上衍生出来, 作为其进一步发展或纠正其不足之处而采取的对策, 这包括回归分析中一系列修正最小二乘法而导致的估计方法。 数理统计学的发展大致可分 3 个时期。① 20 世纪以前。这个时期又可分成两段,大致上可以把高斯和勒让德关于最小二乘法用于观测数据的误差分析的工作作为分界线,前段属萌芽时期,基本上没有超出描述性统计量的范围。后一阶段可算作是数理统计学的幼年阶段。首先,强调了推断的地位,而摆脱了单纯描述的性质。由于高斯等的工作揭示了最小二乘法的重要性,学者们普遍认为,在实际问题中遇见的几乎所有的连续变量,都可以满意地用最小二乘法来刻画。这种观点使关于最小二乘法得到了深入的发展,②20世纪初到第二次世界大战结束。这是数理统计学蓬勃发展达到成熟的时期。许多重要的基本观点和方法,以及数理统计学的主要分支学科,都是在这个时期建立和发展起来的。这个时期的成就,包含了至今仍在广泛使用的大多数统计方法。在其发展中,以英国统计学家、生物学家费希尔为代表的英国学派起了主导作用。③战后时期。这一时期中,数理统计学在应用和理论两方面继续获得很大的进展。

第29卷第1期2008年1舞 腐蚀与防护 CORROSION&PROTECrION V01.29No.1 January2008 矿09“””《”岛 8试验研究8 Q,《,c。。E>c。。《“铁基高铬等离子束表面冶金涂层 耐海水腐蚀性能 置斐斐1,李惠琪1.一。孙玉宗1。予盛旺1。任勇强1 (1。虫东耱技大学毒耋辩学院,青囊266510≠2。趣寨辩技大学糖辫科学毒王翟学院,善芝衷100083)摘要:采用多元Fe基粉末,用等离子荣寝面冶金方法在低碳钢表面制备厚度约3,---5蝴的合金涂屡。借助光学显徽镶、X射线愆射伎、毙谱仪对冶金屡戆组织形态、物摆组成遴褥了研究黎分辑,爰静态浸渍法兹步礤变其褒海水中的耐腐蚀性能。结果表明:表面冶众层内组织均匀细密无缺陷,是固溶了大量Cr的rFe相与商硬度析出相的共晶组织;表丽冶金层在海水中的耐蚀性高于oCrl8Ni9Ti不锈钢,两者均液现为点蚀。 关键灏:等离子糸裘瑟冶金;铁基会金;共鑫组织}海水腐蚀 中图分类号:TGl74.44文献标识鹌:A文章编号:1005-748X(2008)01-0018∞3 CorrosionResistanceinSeawaterofFe-BasedHighChromiumCoatingProduced byDC-PlasmaJetSurfaceMetallurgy WANGFei-feil,LIHui-qil”,SUNYu-zon91,YUSheng-wan91,RENYong-qian91 (1。s醯naongUniversityofScienceandTechnology,Qingdao266510,China; 2.Universityof.ScienceandTechnologyBeijing,B罚ing100083,China)Abstract:Analloycoatingwithapproximate3~5㈣thicknesswasdepositedonlow-carbonsteelbyDC-plasmajetsurfacemetallurgyusingFebasedpowders.Makinguseofopticalmicroscope,X-raydiffraction,energyspectrometer,themorphologyandcompositionfeatureshavebeenstudiedindetail.Corrosionresistanceofthecoatinginseawaterwascharacterizedbyimmersionmethod.Themorphologyofmetallurgicallayerwasuniformandfine.乃撑microstructurewaseutecticstructureof7-FecontaininglotsofCrandhardprecipitatedphase.薅erewerenOdefectsinthe1ayer.Ithadbetterpittingcorrosionresistanceinseawaterthan0Crl8Ni9TistainlesssteelKeywords:DC-plasmajetsurfacemetallurgy;Febasedalloy;Eutecticstructure;Corrosioninseawater 0引畜 解决材料耐海水腐蚀的途径有多静,表面涂层防护是一种常用的方法。在该领域,除了应用广泛的有机涂层外,热喷涂锌铝合金层也是常用的海洋钢构长效防腐蚀方法。但是热嚷涂需要严格的表面前处理,在潮湿的海洋大气中施工受到一定限制。另外有机涂层和热喷涂锌铝合金防腐层的抗冲击磨损性能很差。 等离子束表面冶金技术以等离子弧为热源,采用同步送粉方式,在钢铁等材料表面熔覆一层合金层,具有成分可调范滗大、不需要严格前处理,涂层’致密均匀无孔隙、与基体呈冶金结合等优点。等离 牧稿日期:2007-05-31;修订丑期:2007-06-15 ?18?子束表面冶金涂层已大量成功应用于高耐磨和耐冲 击场合,是一种发展前景广阔的金属表面高抗冲击 抗磨损保护薪技术曩’2】,徨是尾于既掰冲击磨损又 耐海水腐蚀尚无先例。 本工作利用等离子束表面冶金技术制得了Fe 基合金涂层,并对其形貌、缀织及其在海水孛的耐腐 蚀性熊进行了初步探讨。 1实验材料与方法,。 袭面冶金涂层试样在科大海纳等离子科技有限 公司研制的数控等离子柬表面冶金设备上制备[2]。 工艺参数调节范蕊为:工f#电流100~500A,等离 子炬扫描速度50----1000mm/min,送粉气0.2~1.2 m3/h,离子气0。6~1.0m3/h,保护气0.6~1。0 m3/h,等离子柬流长度30一--34mill。 万方数据万方数据

参数估计习题参考答案

参数估计习题参考答案 班级:姓名:学号:得分 一、单项选择题: 1、关于样本平均数和总体平均数的说法,下列正确的是( B ) (A)前者是一个确定值,后者是随机变量(B)前者是随机变量,后者是一个确定值 (C)两者都是随机变量(D)两者都是确定值 2、通常所说的大样本是指样本容量( A ) (A)大于等于30 (B)小于30 (C)大于等于10 (D)小于10 3、从服从正态分布的无限总体中分别抽取容量为4,16,36的样本,当样本容量增大时,样本均值的标准差将( B ) (A)增加(B)减小(C)不变(D)无法确定 4、某班级学生的年龄是右偏的,均值为20岁,标准差

为 4.45.如果采用重复抽样的方法从该班抽取容量为100的样本,那么样本均值的分布为( A ) (A)均值为20,标准差为0.445的正态分布(B)均值为20,标准差为4.45的正态分布 (C)均值为20,标准差为0.445的右偏分布(D)均值为20,标准差为4.45的右偏分布 5. 区间估计表明的是一个( B ) (A)绝对可靠的范围(B)可能的范围(C)绝对不可靠的范围(D)不可能的范围 6. 在其他条件不变的情形下,未知参数的1-α置信区间,( A ) A. α越大长度越小 B. α越大长度越大 C. α越小长度越小 D. α与长度没有关系 7. 甲乙是两个无偏估计量,如果甲估计量的方差小于乙估计量的方差,则称( D ) (A)甲是充分估计量(B)甲乙一样有效(C)乙比甲有效(D)甲比乙有效 8. 设总体服从正态分布,方差未知,在样本容量和置信度保持不变的情形下,根据不同的样本值得到总体均

最小二乘法综述及算例 一最小二乘法的历史简介 1801年,意大利天文学家朱赛普·皮亚齐发现了第一颗小行星谷神星。经过40天的跟踪观测后,由于谷神星运行至太阳背后,使得皮亚齐失去了谷神星的位置。随后全世界的科学家利用皮亚齐的观测数据开始寻找谷神星,但是根据大多数人计算的结果来寻找谷神星都没有结果。时年24岁的高斯也计算了谷神星的轨道。奥地利天文学家海因里希·奥尔伯斯根据高斯计算出来的轨道重新发现了谷神星。 高斯使用的最小二乘法的方法发表于1809年他的著作《天体运动论》中。 经过两百余年后,最小二乘法已广泛应用与科学实验和工程技术中,随着现代电子计算机的普及与发展,这个方法更加显示出其强大的生命力。 二最小二乘法原理 最小二乘法的基本原理是:成对等精度测得的一组数据),...,2,1(,n i y x i i =,是找出一条最佳的拟合曲线,似的这条曲线上的个点的值与测量值的差的平方和在所有拟合曲线中最小。 设物理量y 与1个变量l x x x ,...,2,1间的依赖关系式为:)(,...,1,0;,...,2,1n l a a a x x x f y =。 其中n a a a ,...,1,0是n +l 个待定参数,记()2 1 ∑=- = m i i i y v s 其中 是测量值, 是由己求 得的n a a a ,...,1,0以及实验点),...,2,1)(,...,(;,2,1m i v x x x i il i i =得出的函数值 )(,...,1,0;,...,2,1n il i i a a a x x x f y =。 在设计实验时, 为了减小误差, 常进行多点测量, 使方程式个数大于待定参数的个数, 此时构成的方程组称为矛盾方程组。通过最小二乘法转化后的方程组称为正规方程组(此时方程式的个数与待定参数的个数相等) 。我们可以通过正规方程组求出a 最小二乘法又称曲线拟合, 所谓“ 拟合” 即不要求所作的曲线完全通过所有的数据点, 只要求所得的曲线能反映数据的基本趋势。 三曲线拟合 曲线拟合的几何解释: 求一条曲线, 使数据点均在离此曲线的上方或下方不远处。 (1)一元线性拟合 设变量y 与x 成线性关系x a a y 10+=,先已知m 个实验点),...,2,1(,m i v x i i =,求两个未知参数1,0a a 。 令()2 1 10∑ =--=m i i i x a a y s ,则1,0a a 应满足1,0,0==??i a s i 。 即 i v i v

等离子体增强化学气相沉积(P E C V D)综述

等离子体增强化学气相沉积(PECVD)综述 摘要:本文综述了现今利用等离子体技术增强化学气相沉积(CVD)制备薄膜的原理、工艺设备现状和发展。 关键词:等离子体;化学气相沉积;薄膜; 一、等离子体概论——基本概念、性质和产生 物质存在的状态都是与一定数值的结合能相对应。通常把固态称为第一态,当分子的平均动能超过分子在晶体中的结合能时,晶体结构就被破坏而转化成液体(第二态)或直接转化为气体(第三态);当液体中分子平均动能超过范德华力键结合能时,第二态就转化为第三态;气体在一定条件下受到高能激发,发生电离,部分外层电子脱离原子核,形成电子、正离子和中性粒子混合组成的一种集合体形态,从而形成了物质第四态——等离子体。 只要绝对温度不为零,任何气体中总存在有少量的分子和原子电离,并非任何的电离气体都是等离子体。严格地说,只有当带电粒子密度足够大,能够达到其建立的空间电荷足以限制其自身运动时,带电粒子才会对体系性质产生显著的影响,换言之,这样密度的电离气体才能够转变成等离子体。此外,等离子体的存在还有空间和时间限度,如果电离气体的空间尺度L下限不满足等离子体存在的L>>l D(德拜长度l D)的条件,或者电离气体的存在的时间下限不满足t>>t p(等离子体的振荡周期t p)条件,这样的电离气体都不能算作等离子体。

在组成上等离子体是带电粒子和中性粒子(原子、分子、微粒等)的集合 体,是一种导电流体,等离子体的运动会受到电磁场的影响和支配。其性质宏观上呈现准中性(quasineutrality ),即其正负粒子数目基本相当,系统宏观呈中性,但是在小尺度上则体现电磁性;其次,具有集体效应,即等离子体中的带电粒子之间存在库仑力。体内运动的粒子产生磁场,会对系统内的其他粒子产生影响。 描述等离子体的参量有粒子数密度n 和温度T 。 通常用n e 、n i 和n g 来表示等离子体内的电子密度、粒子密度和中性粒子密度。当n e =n i 时,可用n 来表示二者中任一带电粒子的密度,简称等离子体密度。但等离子体中一般含有不同价态的离子,也可能含有不同种类的中性粒子,因此电子密度与粒子密度不一定总是相等。对于主要是一阶电离和含有同一类中性粒子的等离子体,可以认为n e ≈ n i ,对此,定义:a =n e /( n e + n g )为电离度。在热力学平衡条件下,电离度仅取决于粒子种类、粒子密度及温度。用T e 、T i 和T g 来表示等离子体的电子温度、离子温度和中性粒子温度,考虑到“热容”,等离子体的宏观温度取决于重粒子的温度。在热力学平衡态下,粒子能量服从麦克斯韦分布,单个粒子平均平动能KE 与热平衡温度T 关系为: 21322 kT KE mv == 等离子体的分类按照存在分为天然和人工等离子体。按照电离度a 分为: a<<0.1称为弱电离等离子体,当a > 0.1时,称为为强电离等离子体;a =1 时,则叫完全等离子体。按照粒子密度划分为致密等离子体n >1518310cm -,若n<1214310cm -为稀薄等离子体。按照热力学平衡划分为完全热力学平衡等离子体,即

等离子熔覆耐磨处理技术

青岛海纳等离子科技有限公司 一、等离子熔覆技术简介 等离子金属表面熔覆处理技术(等离子束金属表面原位冶金技术)是我公司在堆焊以及激光熔覆的基础上自主研发的提高金属表面性能的一项新技术,利用该技术可在金属零部件的表面获得一层具有特殊性能的合金熔覆层,以提高金属零部件的耐磨损、耐冲击和耐腐蚀性能,熔覆层与基体为冶金结合,结合强度高。该项技术获得了多项国家专利,并且在2008年荣获“国家级科技进步奖二等奖”,以及“中国煤炭工业科学技术奖二等奖”和“中国机械工业科学技术奖二等奖”等多项奖励。 等离子熔覆技术的基本原理是在柔性高温等离子束流作用下,将合金粉末与基体表面迅速加热并一起熔化、混合、扩散、反应、凝固,等离子束离开后自激冷却,从而实现表面的强化与硬化。 2002年12月份通过了对负压等离子束熔覆金属陶瓷涂层技术和数控等离子熔覆强化机床的鉴定,鉴定结论认为该项技术与设备是等离子表面冶金领域的一项重大创新,填补了一项空白,总体技术达到了国际领先水平。等离子熔覆已在抗冲击耐磨损防腐蚀方面显示其很大的优越性和强大的生命力。 等离子熔覆的特点是: (1)等离子束能量密度高,熔覆耐磨层与基体为冶金结合,结合强度高,不脱落。 (2)无需喷砂等前处理过程,生产工艺简单,降低了生产成本,提高了生产效率。 (3)整个熔覆过程在数控系统控制下实现,自动化程度高,适合进行批量工业化生产。. (4)对使用环境要求低,无需设备降温、除尘等辅助要求,操作简单,设备维

修维护容易。 (5)电热转换效率高,能效比高。 (6)熔覆过程稀释率低,熔覆层性能容易控制。 (7)粉末适用范围广,可使用铁基、镍基、钴基等粉末熔覆。 (8)根据使用要求不同,熔覆层单层厚度可调(0.5mm~6.0mm)。 二、等离子熔覆耐磨处理系列设备 DRF-2型数控等离子熔覆耐磨处理设备 1、可对中部槽的中板进行耐磨处理。 2、可对平面零部件进行耐磨处理。 3、采用先进的数控技术,根据需要编好程序后自动完成熔覆过程,自动化程度高。 4、熔覆层平整度高,材质均匀,稀释率低。 5、程序控制自动送粉,送粉速度可调。 DRF-5B型数控等离子熔覆耐磨处理设备

种子磁化处理技术种子处 理技术 The following text is amended on 12 November 2020.

种子磁化处理技术是一项新的物理农业技术,也是一项很有价值的农业增产技术,国外早已广泛应用。在外加磁场的作用下,增强了种子中的酶的功能和活力,促进植物根系生长和吸收养分。根据几年的对比 ...种子磁化处理技术是一项新的物理农业技术,也是一项很有价值的农业增产技术,国外早已广泛应用。在外加磁场的作用下,增强了种子中的酶的功能和活力,促进植物根系生长和吸收养分。根据几年的对比试验表明,凡是经过磁化处理的粮食、蔬菜及经济作物的种子,都比没有磁化的发芽早、长势旺、苗粗根壮。据测算,玉米平均增产%左右,小麦平均增产%,蔬菜平均增产15~20%。 种子磁化机主要由料仓、流量调节阀、磁化通道、磁块、出料口、底板、行走轮、轨道、调整丝杠、手柄、固定座、螺栓、螺母,箱体组装而成。料仓和出料口联为一体,形成一个磁化通道。流量调节阀安装在磁化通道入口处。可根据不同籽种的不同最佳磁化场强来设定各不相同的磁场,以达到最佳磁化效果目的。可提高发芽率5~8%,出苗期可提前1~2天,并能提高种子抗病能力。在播种前24小时内用磁场对农作物种子进行直接磁化处理,增强种子酶的功能和活力,促进植物根系生长和吸收水分,提高种子的发芽率和作物的新陈代谢,使其稳健生长。 1、磁化处理与丰产原理 磁场种子处理机是依据磁场种子处理正向效应和种子微磁性互作机理开发的新型种子磁化机。采用多级交变磁极处理结构进行落种处理,种子在磁场处理正向效应规定的磁场强度范围内受到微磁化和物质结构扭变处理,处理后的种子播种于土壤中会与土壤形成生化、物理性质的互作,带有微磁性的种子会将其周边的微量元素铁磁性物质、顺磁性物质吸引至种子表皮-土壤固液混合相组成的活性界面中,扭变的膜结构会在土壤环境中慢慢得到修复,之后种子萌发并加以利用丰富的微量元素构建丰产的物质基础。另一方面,微磁性种子还能够解析土壤吸附性磷元素,增加土壤有效磷的含量。