Enhancing Performance in Latent Semantic Indexing(LSI)Retrieval

Susan T.Dumais

Bellcore,21236

ABSTRACT

We have previously described an extension of the vector retrieval method called"Latent Semantic Indexing"(LSI)(Deerwester,et al.,1990;Dumais,et al.,1988;Furnas,et al.,1988). The LSI approach partially overcomes the problem of variability in human word choice by automatically organizing objects into a"semantic"structure more appropriate for information retrieval.This is done by modeling the implicit higher-order structure in the association of terms with objects.Initial tests?nd this completely automatic method to be a promising way to improve users’access to many kinds of textual materials or to objects for which textual descriptions are available.

This paper describes some enhancements to the basic LSI method,including differential term weighting and relevance feedback.Appropriate term weighting improves performance by an average of40%,and feedback based on3relevant documents improves performance by an average of67%.

1.Introduction

We have developed a method called Latent Semantic Indexing (LSI)that can improve people’s access to electronically available textual information (Deerwester,et al.,1990;Dumais,et al.,1988;Furnas,et al.,1988).Most approaches to retrieving textual materials depend on a lexical match between words in users’requests and those in database objects.Typically only text objects that contain one or more words in common with those in the users’query are returned as relevant.Word-based retrieval systems like this are,however,far from ideal -many objects relevant to a users’query are missed,and many unrelated or irrelevant materials are retrieved.We believe that fundamental characteristics of human verbal behavior underly these retrieval dif?culties (Bates,1986;Fidel,1985;Furnas et al.,1987).Because of the tremendous diversity in the words people use to describe the same object or concept (synonymy ),requesters will often use different words from the author or indexer of the information,and relevant materials will be missed.Conversely,since the same word often has more than one meaning (polysemy ),irrelevant materials will be retrieved.

The LSI approach tries to overcome these problems by automatically organizing objects into a semantic structure more appropriate for information retrieval.This is done by modeling the implicit higher-order structure in the association of terms with objects.We assume that there is some underlying or "latent"structure in the pattern of word usage that is partially obscured by the variability of word choice.We use statistical techniques to estimate this latent structure and get rid of the obscuring "noise".A description of terms,objects and user queries based on the underlying latent semantic structure (rather than surface level word choice)is used for representing and retrieving information.LSI has several appealing properties for retrieval,both in a standard retrieval scenario,where a set of text objects is retrieved in response to a user’s query,and in more sophisticated retrieval operations.

2.Overview of Latent Semantic Indexing

2.1Theory.

The particular latent semantic indexing analysis that we have tried uses singular-value decomposition (SVD),a technique closely related to eigenvector decomposition and factor analysis (Forsythe,Malcolm and Moler,1977;Cullum and Willoughby,1985).We take a large matrix of term to text-object association data and decompose it into a set of,typically 50to 150,orthogonal factors from which the original matrix can be approximated by linear combination.More formally,any rectangular matrix,X ,for example a t ×o matrix of terms and objects,can be decomposed into the product of three other matrices:

t ×o X =t ×r T 0.r ×r S 0.r ×o

O 0′,such that T 0and O 0have orthonormal columns,S 0is diagonal,and r is the rank of X .This is so-called singular value decomposition of X and it is unique up to certain row,column and sign permutations.

If only the k largest singular values of S 0are kept along with their corresponding columns in the

T 0and O 0matrices,and the rest deleted (yielding matrices S ,T and O ),the resulting matrix,X

?,is

the unique matrix of rank k that is closest in the least squares sense to X :

t ×o X ~~t ×o X ?=t ×k T .k ×k S .k ×o

O ′.The idea is that the X

?matrix,by containing only the ?rst k independent linear components of X ,captures the major associational structure in the matrix and throws out noise.It is this reduced model,usually with k =100,that we use to approximate the term to text-object association data in X .Since the number of dimensions in the reduced model (k )is much smaller than the number of unique terms (t ),minor differences in terminology are ignored.In this reduced model,the closeness of objects is determined by the overall pattern of term usage,so objects can be near each other regardless of the precise words that are used to describe them,and their description depends on a kind of consensus of their term meanings,thus dampening the effects of polysemy.In particular,this means that text objects which share no words with a user’s query may still be near it if that is consistent with the major patterns of word usage.We use the term "semantic"indexing to describe our method because the reduced SVD representation captures the major associative relationships between terms and text objects.

One can also interpret the analysis performed by SVD geometrically.The location of the terms and objects in k -space is given by the row vectors from the T and O matrices,respectively.In this space the cosine or dot product between vectors corresponds to their estimated similarity.Since both term and object vectors are represented in the same space,similarities between any combination of terms and objects can be easily obtained.Retrieval proceeds by using the terms in a query to identify a point in the space,and all text objects are then ranked by their similarity to the query.We make no attempt to interpret the underlying dimensions or factors,nor to rotate them to some intuitively meaningful orientation.Our analysis does not require us to be able to describe the factors verbally but merely to be able to represent terms,text objects and queries in a way that escapes the unreliability,ambiguity and redundancy of individual terms as descriptors.

This geometric analysis makes the relationship between LSI and the standard vector retrieval methods clear.In the standard vector model,terms are assumed to be independent,and form the orthogonal basis vectors of the vector space (Salton and McGill,1983;van Rijsbergen,1979).The independence assumption is quite unreasonable,but makes parameter estimation more tractable.Several researchers have suggested ways in which term dependencies might be added to the vector model (e.g.van Rijsbergen,1977;Yu et al.,1983),but parameter estimation remains a severe dif?culty especially when higher-order associations are included.In LSI,the reduced dimensional SVD gives us the orthogonal basis vectors,and term vectors are positioned in this space so as to re?ect the correlations in their usage across documents.Since both terms and objects are represented in the same space,queries can be formed using any combination of terms and objects,and any combination of terms and objects can be returned in response to a query.Like the standard vector method,the LSI representation has many desirable properties:it does not require Boolean queries;it ranks retrieval output in decreasing order of document to query similarity;it easily accommodates term weighting;and it is easy to modify the location of individual vectors for use in dynamic retrieval environments.

The idea of aiding information retrieval by discovering latent proximity structure has several

lines of precedence in the information science literature.Hierarchical classi?cation analyses have sometimes been used for term and document clustering(Sparck Jones,1971;Jardin and van Rijsbergen,1971).Factor analysis has also been explored previously for automatic indexing and retrieval(Baker,1962;Atherton and Borko,1965;Borko and Bernick,1963;Ossorio,1966). Koll(1979)has discussed many of the same ideas we describe above regarding concept-based information retrieval,but his system lacks the formal mathematical underpinnings provided by the singular value decomposition model.Our latent structure method differs from these approaches in several important ways:(1)we use a high-dimensional representation which allows us to better represent a wide range of semantic relations;(2)both terms and text objects are explicitly represented in the same space;and(3)objects can be retrieved directly from query terms.

2.2Practice.

We have applied LSI to several standard information science test collections,for which queries and relevance assessments were available.The text objects in these collections are bibliographic citations(consisting of the full text of document titles,authors and abstracts),or the full text of short articles.For each user query,all documents have been ordered by their estimated relevance to the query,making systematic comparison of alternative retrieval systems straightforward.Results were obtained for LSI and compared against published or computed results for other retrieval techniques,notably the vector method in SMART(Salton,1968).Each document is indexed completely automatically,and each word occurring in more than one document and not on SMART’s stop list is included in the LSI analysis.The LSI analysis begins with a large term by document matrix in which cell entries are a function of the frequency with which a given term occurred in a given document.A singular value decomposition(SVD) is performed on this matrix,and the k largest singular values and their corresponding left and right singular vectors are used for retrieval.Queries are automatically indexed using the same preprocessing as was used for indexing the original documents,and the query vector is placed at the weighted sum of its constituent term vectors.The cosine between the resulting query vector and each document vector is calculated.Documents are returned in decreasing order of cosine, and performance evaluated on the basis of this list.

Performance of information retrieval systems is often summarized in terms of two parameters-precision and recall.Recall is the proportion of all relevant documents in the collection that are retrieved by the system;and precision is the proportion of relevant documents in the set returned to the user.Average precision across several levels of recall can then be used as a summary measure of performance.For several information science test collections,we found that average precision using LSI ranged from comparable to to30%better than that obtained using standard vector methods.(See Deerwester,et al.,1990;Dumais,et al.,1988;for details of these evaluations.)The LSI method performs best relative to standard vector methods when the queries and relevant documents do not share many words,and at high levels of recall.

Another test of the LSI method was done in connection with the Bellcore Advisor,an on-line service which provides information about Bellcore experts on topics entered by a user (Lochbaum and Streeter,1988;Streeter and Lochbaum,1987).The objects in this case were research groups which were characterized by abstracts of technical memoranda and descriptions

of research projects written for administrative purposes.A singular value decomposition of a 728(work group descriptions)by7100(term)matrix was performed and a100-dimensional representation was used for retrieval.Evaluations were based on a new set of263technical abstracts not used in the original scaling,and descriptions of research interests solicited from40 people.Each new abstract or research description served as a query and its similarity to each organization in the space was calculated.The result of interest is the rank of the correct organization in the returned list.For the research descriptions,LSI outperformed standard vector methods(median rank2vs.4);for technical abstracts,performance was the same with the two methods(median rank2).Interestingly,a combination of the two methods(maximum of normalized values on each)provided the best performance,returning the appropriate organization with a median rank of1for both kinds of test queries.

3.Improving Performance

There are several well-known techniques for improving performance in standard vector retrieval systems.One of the most important and robust methods involves differential term weighting (Sparck Jones,1972).Another method of improvement involves an iterative retrieval process based on users’judgments of relevant items-often referred to as relevance feedback(Salton, 1971).The LSI approach also involves choosing the number of dimensions for the reduced space.We do not present detailed results of other methods that sometimes improve retrieval performance like stemming and phrases since preliminary investigations showed at most modest (1%-5%)improvements with these methods.The effects of stemming were especially variable, sometimes resulting in small improvements,sometimes in small decrements in performance. Table1gives a brief description and summarizes some characteristics of the datasets and queries used in our experiments.As noted above,the"documents"in these collections consisted of the full text of document abstracts or short articles.

MED:document abstracts in biomedicine received from the National Library

of Medicine

CISI:document abstracts in library science and related areas published

between1969and1977and extracted from Social Science Citation

Index by the Institute for Scienti?c Information

CRAN:document abstracts in aeronautics and related areas originally used

for tests at the Cran?eld Institute of Technology in Bedford,England TIME:articles from Time magazine’s world news section in1963

ADI:small test collection of document abstracts from library science

and related areas

MED CISI CRAN TIME ADI number of documents10331460140042582 number of terms58315743448610337374 (occurring in more than one document)

number of queries30352258335 average number of documents

relevant to a query2350845 average number of terms per doc50455619016 average number of docs per term9131684 average number of terms per query108985 percent non-zero entries0.860.88 1.10 1.80 4.38

Table1.Characteristics of Datasets

3.1Choosing the number of dimensions.

Choosing the number of dimensions for the reduced dimensional representation is an interesting problem.Our choice thus far has been determined simply by what works best.We believe that the dimension reduction analysis removes much of the noise,but that keeping too few dimensions would loose important information.We suspect that the use of too few dimensions has been a de?ciency of previous experiments that have employed techniques similar to SVD (Atherton and Borko,1965;Borko and Bernick,1963;Ossorio,1966;Koll,1979).Koll,for example,used only seven dimensions.We have evaluated retrieval performance using a range of dimensions.We expect the performance to increase only while the added dimensions continue to account for meaningful,as opposed to chance,co-occurrence.That LSI works well with a relatively small(compared to the number of unique terms)number of dimensions shows that these dimensions are,in fact,capturing a major portion of the meaningful structure.

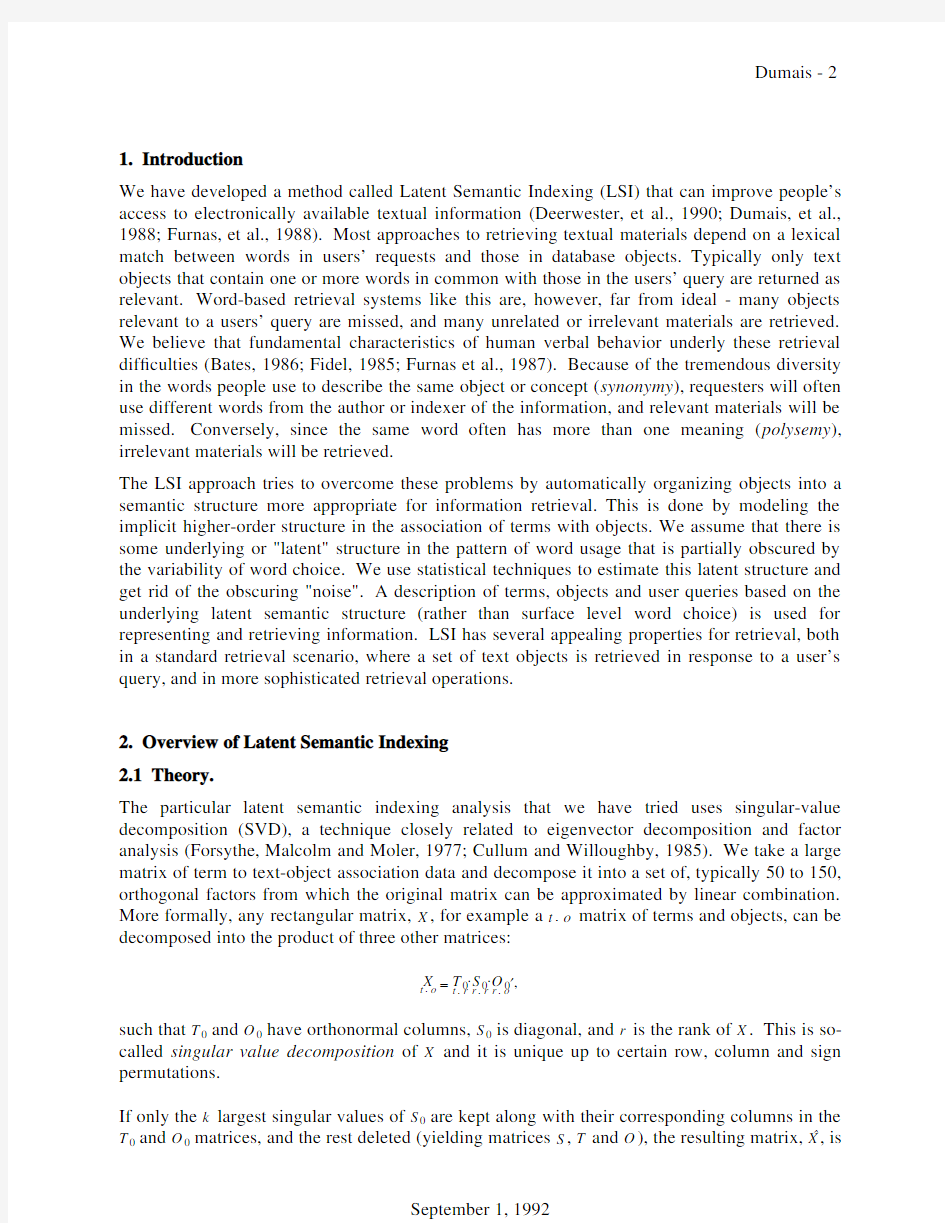

Figure1shows performance for the MED database using different numbers of dimensions in the reduced LSI representation.Performance is average precision over recall levels of0.25,0.50and 0.75.

00.20.40.60.81Mean

Precision Dimensions

MED-LogEntropy

Performance vs.number of dimensions

1003005007009001100

....................Figure 1.MED number of dimensions

It is clear from this ?gure that performance improves considerably after 10or 20dimensions,peaks between 70and 100dimensions,and then begins to diminish slowly.This pattern of performance (initial large increase and slow decrease to word-based performance)is observed with other datasets as well.As noted above,eventually performance must approach (typically by decreasing)the level of performance attained by standard vector methods,because with suf?cient parameters SVD will exactly reconstruct the original term by document matrix.

We have found that 100-dimensional representations work well for these test collections.However,the number of dimensions needed to adequately capture the structure in other collections will probably depend on their breadth.Most of the test collections are relatively homogeneous,and 100dimensions appears to be adequate to capture the major patterns of word usage across documents.In choosing the number of dimensions,we have been guided by the operational criterion of "what works best".That is,we examine performance for several different dimensions,and select the dimensionality that maximizes performance.In practice,the use of statistical heuristics for determining the dimensionality of an optimal representation will be important.

3.2Term weighting.

One of the common and usually effective methods for improving retrieval performance in vector methods is to transform the raw frequency of occurrence of a term in a document (i.e.the value of a cell in the raw term-document matrix)by some function.Such transformations normally have two components.Each term is assigned a global weight ,indicating its overall importance in the collection as an indexing term.The same global weighting is applied to an entire row (term)of the term-document matrix.It is also possible to transform the term’s frequency in the document;such a transformation is called a local weighting ,and is applied to each cell in the matrix.In the general case,the value for a term t in a document d is L (t ,d )×G (t ),where L (t ,d )is the local weighting for term t in document d and G (t )is the term’s global weighting.

Some popular local weightings include:

Term Frequency

Binary log(Term Frequency +1)

Term Frequency is simply the frequency with which a given term appears in a given document.Binary weighting replaces any term frequency which is greater than or equal to 1with 1.Log (Term Frequency +1)takes the log of the raw term frequency,thus dampening effects of large differences in frequencies.

Four well-known global weightings are:Normal,GfIdf,Idf,and Entropy.Each is de?ned in terms of the term frequency (tf ij ),which is the frequency of term i in document j ,the document frequency (df i ),which is the number of documents in which term i occurs,and the global frequency (gf i ),which is the total number of times term i occurs in the whole collection.

Normal:√ j Σtf ij 21

GfIdf:df i gf i

Idf:log 2 df i ndocs +1,where ndocs is the number of documents in the collection 1-Entropy or Noise:1?j

Σlog (ndocs )p ij log (p ij ) where p ij =gf i tf ij ,and ndocs is the number of documents in the collection

All of the global weighting schemes basically give less weight to terms that occur frequently or in many documents.The ways in which this is done involve interesting variations in the relative importance of local frequency,global frequency,and document frequency.Normal normalizes the length of each row (term)to 1.This has the effect of giving high weight to infrequent terms.Normal,however,depends only on the sum of the squared frequencies and not the distribution of those frequencies per se -e.g.a term that occurs once in each of 100different documents is given the same weight as a term that occurs 10times in only one document.GfIdf and Idf are closely related,both weighting terms inversely by the number of different documents in which

they appear.GfIdf also increases the weight of frequently occurring terms.Neither method depends on the distribution of terms in documents,only the number of different documents in which a term occurs -e.g.a term occurring 10times in each of two documents is given the same weight as a term occurring 1time in one document and 19times in a second document.Entropy is based on information theoretic ideas and is the most sophisticated weighting scheme.The

average uncertainty or entropy of a term is given by j Σlog (ndocs )

p ij log (p ij ) where p ij =gf i tf ij .Subtracting this quantity from a constant assigns minimum weight to terms that are equally distributed over documents (i.e.where p ij =1/ndocs ),and maximum weight to terms which are concentrated in a few documents.Entropy takes into account the distribution of terms over documents.

We explored the effects of six different term weighting schemes in each of the test collections.We performed analyses using:no global weighting (i.e.raw term frequency,tf ij ),combinations of the local weight tf ij and each of the four global weights discussed above (GfIdf,Idf,Entropy,and Normal),and one combination of a local log weight (log(tf ij +1))and a global entropy weight (LogEntropy).The original term by document matrix was transformed according to the relevant weighting scheme,and a reduced dimensional SVD was calculated and used for the analysis.Sixty dimensions were used for the ADI collection,and 100dimensions were used for the remaining collections.In all cases,query vectors were composed using the same weight used to transform the original matrix.

Average precision (over the queries)at 10levels of recall is shown in Figure 2for the Cran?eld test collection (CRAN).For this evaluation,we used a subset of CRAN,made up of the 924documents that were relevant to at least one query,and a set of 100test queries.

00.20.40.60.81

00.20.40.60.8

1Precision

Recall

Cran?eld subset

Global weightings -

LSI

LogEntropy

Entropy Idf

Raw TF

GfIdf Normal Figure 2.CRAN global term weightings

As can be seen,Normal and GfIdf are worse than no global weighting,and Idf,Entropy and LogEntropy result in substantial performance improvements.These results are generally con?rmed on the other data sets as well.Table 2presents a summary of term weighting experiments for ?ve different datasets.The entries in the table are average precision over three levels of recall (.25,.50and .75)and over all queries.The numbers in parenthesis indicate the percentage improvement over performance in the Raw Term Frequency case for that dataset.

TF TF TF TF TF Log(TF)

Raw Normal GfIdf Idf Entropy Entropy

ADI.28.30(+7%).24(-14%).34(+22%).36(+30%).36(+30%) MED.52.48(-6%).55(+6%).67(+30%).66(+27%).72(+39%) CISI.11.10(-6%).10(-7%).15(+36%).16(+46%).17(+53%) CRAN.29.25(-15%).28(-5%).40(+37%).40(+38%).46(+57%) TIME.49.32(-35%).42(-14%).55(+12%).54(+10%).59(+20%)

mean-11%-7%+27%+30%+40%

Table2.Summary term weighting

Normalization and GfIdf have mixed effects,depending on the dataset,but generally result in decreases in performance compared with raw term frequency.Idf,Entropy and LogEntropy all result in consistently large improvements in performance,with LogEntropy being somewhat better than the other two weightings.

These results are quite consistent with those reported by Harman(1986)using a standard vector retrieval method.In her study using the IRX(Information Retrieval Experiment)at the National Library of Medicine,Harman found that both Idf and Entropy weightings produced large performance advantages.Harmon found the following advantages over her baseline term overlap measure:Idf20%;Entropy24%;LogEntropy35%;LogEntropy/normalized-document-lengths44%.Given the differences in test document sets and the baseline method,the agreements in our results indicate that positive effects of the Idf and Entropy global weightings, and the local Log weighting are quite robust.We did not run any tests that involved normalizations of document length.We hope to encorporate this into our tools soon,but suspect that the differences will be relatively small for our test sets since documents within a set are roughly comparable in length(except for the TIME collection).

Somewhat surprisingly,term weighting experiments with the Bellcore Advisor which used an LSI representation give different results(Lochbaum and Streeter,1988).When both the initial matrix transformation and query processing used the same weighting scheme,Lochbaum and Streeter found the best results for a Normalization weighting(median rank3,75th percentile rank19),compared with(median rank4,75th percentile rank23)for the raw matrix.Best performance was found when Normalization was used for the initial matrix transformation,and Entropy was used for query processing(median rank2,75th percentile rank14).It is not clear how these differences can be explained.The Lochbaum and Streeter study differs from the others in an number of ways(e.g.performance measure,length of queries,and variations in document length,use of phrases)and some combination of these factors may be responsible.

3.3Relevance feedback.

The idea behind relevance feedback is quite https://www.doczj.com/doc/1d12437793.html,ers are very unlikely to be able to specify their information needs adequately,especially given only one chance.With increases in computer speed,interactive or iterative searches are common,and users can reformulate queries in light of the system’s response to previous queries(e.g.Oddy,1977;Williams,1984).Users

claim to use information about the number of documents retrieved,related terms and information in relevant citations to reformulate their search requests.There is,however,little experimental evidence to back up this claim or to assess the success of such reformulations. Another approach to query reformulation is to have the system automatically alter the query based on user feedback about which documents are relevant to the initial request(Salton,1971). This automatic system adaptation is what is usually meant by relevance feedback,and can be summarized as follows:

1.initial user query->ranked list of documents

https://www.doczj.com/doc/1d12437793.html,er selects some documents as relevant

system automatically reformulates query using these docs->

new ranked list of docs

iterate step2.

Simulations have shown that relevance feedback can be very effective(Ide and Salton,1971; Rocchio,1971;Ide,1971;Salton,1971;Stanfel,1971;Stan?ll and Kahle,1986;Salton and Buckley,1990).Systems can use information about which documents are relevant in many ways.Typically what is done is to increase the weight given to terms occurring in relevant documents and to decrease the weight of terms occurring in non-relevant documents.Most of our tests using LSI have involved a method in which the initial query is replaced with the vector sum of the documents the users has selected as relevant.We do not currently make use of negative information;for example,by moving the query away from documents which the user has indicated are irrelevant.

Table3on the next page shows an example of the feedback process using Query34from the CISI dataset.The top half of the table shows the system’s initial response to the query.The?rst column indicates whether the document is relevant to the query or not;the second column is the document number;the third column is the cosine between the query and the document(in the 100-dimensional LSI solution);the fourth column is the rank of the document;and the?fth column is an abbreviated document title.We see that4of the top15documents are relevant-there are38documents relevant to this query.The precision for this query(averaged over recall levels.25,.50and.75)is.09.

Next we show what happens when the?rst relevant document,document673in this case, replaces the initial query.This can be viewed as a simulation of a retrieval scenario in which a user looks down a list of candidate documents and stops when the?rst relevant document is encountered.The full text of this document abstract is then used as the query.Now7of the top 15documents,including the?rst4,are relevant.The precision for this query improves to.14;far from1.00,but a noticeable improvement none the less.(Note that document673has similarity 1.00with itself.When more than one document is used to form the new query,the similarities will not be1.00,and there is no guarantee that the documents selected as relevant will be at the top of the revised list.)

Original Query:CISI,Query#34

Methods of coding used in computerized index systems.

Original Ranked List:

rel docn cos rank title

5310.5981Index Term Weighting

1460.5922Research on Users’Needs:Where is it Getting Us?

R6730.5683Rapid Structure Searches via Permuted Chemical...

R340.5584Keyword-In-Context Index for Technical Literature(KWIC Index) R530.5545The KWIC Index Concept:A Retrospective View 6880.5496The Multiterm Index:A New Concept in Info Retrieval

1490.5237Current Awareness Searches on CT,CBAS and ASCA

R440.5018The Distribution of Term Usage in Manipulative Indexes 860.4949A Core Nursing Library for Practitioners

12830.48110Science Citation Index-A New Dimension in Indexing

3900.47511Factors Determining the Performance of Indexing Systems

8270.46012The Evaluation of Information Retrieval Systems

1940.45113World Biomedical Journals,1951-60:

7900.44714Computer Indexing of Medical Articles-Project Medico

1800.44615Medical School Library Statistics

NEW Ranked List-Using Doc673as Query

rel docn cos rank title

R673 1.0001Rapid Structure Searches via Permuted Chemical...

R6690.6232Rapid Structure Searches via Permuted Chemical Line-Notations R530.6013The KWIC Index Concept:A Retrospective View

R6870.5954Index Chemicus Registry System:Pragmatic Approach to 10260.5845Line-Formula Chemical Notation

6750.5756Atom-by-Atom Typewriter Input for Computerized

6880.5687The Multiterm Index:A New Concept in Info Retrieval

7150.5328Articulation in the Generation of Subject Indexes by computer

14520.5289The Wiswesser Line-Formula Chemical Notation(WLN)

R440.52710The Distribution of Term Usage in Manipulative Indexes

R340.51911Keyword-In-Context Index for Technical Literature(KWIC Index) 7380.51812Substructure Searching of Computer-Readable Chemical Abstracts 6840.51713Operation of Du Pont’s Central Patent Index

R7040.50914Use of the IUPAC Notation in Computer Processing 860.50915A Core Nursing Library for Practitioners

Table3.Relevance Feedback example

We have conducted several more systematic studies of relevance feedback.The document sets and user queries described in Table1were used in these experiments.We compared performance with the original query against two simulated cases of"feedback"and against a "centroid"query.Retrieval is?rst performed using the original query.Then this query is replaced by:the?rst relevant document,the weighted average or centroid of the?rst three relevant documents,or the centroid of all relevant documents.The"centroid"condition represents the best performance that can be realized with a single-point query.While this cannot

be achieved in practice(except through many iterations),it serves as a useful upper bound on the performance that can be expected given the LSI representation.

Table4presents a summary of the relevance feedback simulation experiments.In all cases performance is the mean precision averaged over three levels of recall(.25,.50and.75)and over

all queries.

Orig:performance with original query

Nrel:average number of relevant documents for queries

Feedbk n=1:?rst relevant document is new query

Feedbk n=3:average of?rst three relevance documents is new query

Nview n:median number of documents viewed to?nd?rst n relevant ones

i.e.median rank of n th relevant document

Centroid:=average or centroid of all relevant documents is query

Dataset Orig Nrel Feedbk n=1Nview1Feedbk n=3Nview3Centroid ADI.365.59(63%)3.87(141%)13.98(172%) CISI.1650.21(31%)2.26(62%)9.47(193%) MED.6723.68(1%)1.72(7%)3.80(19%) CRAN.428.51(20%)1.74(76%)6.86(95%) TIME.544.80(48%)1.85(53%)4.85(57%) mean(33%)1(67%)7(107%)

Table4.Relevance Feedback summary

Large performance improvements are found for all but the MED dataset,where initial performance was already quite high.Performance improvements average67%when the?rst three relevant documents are used as a query and33%when the?rst document is used.These substantial performance improvements can be obtained with little cost to the user.A median of

7documents have to be viewed in order to?nd the?rst three relevant documents,and a median

of1document has to be seen in order to?nd the?rst relevant document.Relevance feedback using documents as queries imposes no added costs in terms of system computations.Since both terms and documents are represented as vectors in the same k-dimensional space,forming queries as combinations of terms(normal query),documents(relevance feedback)or both is straightforward.

The"centroid"query results in performance that is quite good in all but one collection.This suggests that the LSI representation is generally adequate to describe the interrelations among

terms and documents,but that users have dif?culty in stating their requests in a way that leads to appropriate query placement.In the case of the CISI collection(where there are an average of50 relevant documents)a single query does not seem to capture the appropriate regions of relevance.We are now exploring a method for representing queries in a way that allows for multiple disjoint regions to be equally relevant to a query(Kane-Esrig et al.,1989).A related method for"query splitting"has also been described by(Borodin,Kerr and Lewis,1968).

The LSI relevance feedback results are comparable to those reported by Salton and his colleagues using the vector method(Rocchio,1971;Ide,1971;Salton,1971).Even though they used somewhat different datasets and the details of their feedback method was quite different, they too found large(average60%)and reliable improvements in performance with feedback. Their feedback method involved?nding all relevant documents in the top15,and modifying the query by adding terms from relevant documents and subtracting terms from non-relevant documents.If there are on average3relevant documents in the top15then these results are very similar to ours.Stan?ll and Kahle(1986)also report large improvements with feedback,but their results involve only two queries and a small subset of documents.We have also performed a few more direct comparisons using a standard word-based vector method on our datasets. Performance increases were comparable to those found with the reduced dimensional LSI representation.

The simulation experiments described above examined performance improvements obtained using a single feedback cycle.We are currently exploring what happens when several retrieval iterations are used.For example,how do three sequential one-document feedback queries compare with the three-document feedback query described above?

It should be noted that the extent of the effectiveness of relevance feedback is tricky to gauge. There will be some improvement in performance simply because the documents used as the feedback query will typically move higher in the ranked retrieval list.It is dif?cult to separate the contribution to increased retrieval effectiveness produced when these documents move to higher ranks from the contributions produced when the rank of previous unselected relevant documents increases.(It is probably the latter which users care about.)Methods for dealing with this include:freezing documents identi?ed as relevant in their original ranks,or splitting the document collection into halves with similar relevance properties,but we have not encorporated such corrections into our calculations.However,even if the magnitude of the improvement is somewhat smaller than noted above,there is room for signi?cant improvement as can be seen by the"centroid"results and the relevance feedback methods are a step in this direction.

How well relevance feedback(or other iterative methods)will work in practice is an empirical issue.We are now in the process of conducting an experiment in which users modify initial queries by:rephrasing the original query;relevance feedback based on user-selected relevant documents;or a combination of both methods.(See Dumais,Littman and Arrowsmith,1989for a description of the interface and evaluation method.)

4.Conclusions

LSI is a modi?cation of the vector retrieval method that explicitly models the correlation of term usage across documents using a reduced dimensional SVD.The technique’s tested performance ranged from roughly comparable to30%better than standard vector methods,apparently depending on the associative properties of the document set and the quality of the queries. These results demonstrate that there is useful information in the correlation of terms across of documents,contrary to the assumptions behind many vector-model information retrieval approaches which treat terms as uncorrelated.

Performance in LSI-based retrieval can be improved by many of the same techniques that have been useful in standard vector retrieval methods.In addition,varying the number of dimensions in the reduced space in?uences performance.Performance increases dramatically over the?rst 100dimensions,reaching a maximum and falling off slowly to reach the typically lower word-based level of performance.Idf and Entropy global term weighting improved performance by an average of30%,and improvements with the combination of a local Log and a global Entropy weighting(LogEntropy)were40%.In simulation experiments,relevance feedback using the?rst 3relevant documents improved performance by an average of67%,and feedback using only the ?rst relevant document improved performance by an average of33%.Since the?rst three relevant document are found after searching through only a median of7documents,this method offers the possibility of dramatic performance improvements with relatively little user effort.An experiment is now underway to evaluate the feedback method in practice,and to compare it with other methods of query reformulation.

REFERENCES

1.Atherton,P.and Borko,H.A test of factor-analytically derived automated classi?cation

methods.AIP rept AIP-DRP65-1,Jan.1965.

2.Baker,https://www.doczj.com/doc/1d12437793.html,rmation retrieval based on latent class analysis.Journal of the ACM,1962,

9,512-521.

3.Bates,M.J.Subject access in online catalogs:A design model.JASIS,1986,37(6),357-376.

4.Borko,H and Bernick,M.D.Automatic document classi?cation.Journal of the ACM,April

1963,10(3),151-162.

5.Borodin, A.,Kerr,L.,and Lewis, F.Query splitting in relevance feedback systems.

Scienti?c Report No.ISR-14,Department of Computer Science,Cornell University,Oct.

1968.

6.Cullum,J.K.and Willoughby,https://www.doczj.com/doc/1d12437793.html,nczos algorithms for large symmetric eigenvalue

computations-Vol1Theory(Chapter5:Real rectangular matrices).Brikhauser,Boston, 1985.

7.Deerwester,S.,Dumais,S.T.,Furnas,G.W.,Landauer,T.K.,and Harshman R.A.

Indexing by latent semantic analysis.JASIS,1990,41(6),391-407.

8.Dumais,S.T.,Furnas,G.W.,Landauer,T.K.,and Deerwester,https://www.doczj.com/doc/1d12437793.html,ing latent semantic

analysis to improve information retrieval.In CHI’88Proceedings,pp.281-285.

9.Dumais,S.T.,Littman,M.L.and Arrowsmith,https://www.doczj.com/doc/1d12437793.html,Search:A program for iterative

information retrieval using LSI.Bellcore TM,1989.

10.Fidel,R.Individual variability in online searching behavior.In C.A.Parkhurst(Ed.).

ASIS’85:Proceedings of the ASIS48th Annual Meeting,Vol.22,October20-24,1985,69-

72.

11.Forsythe,G.E.,Malcolm,M.A.,and Moler,https://www.doczj.com/doc/1d12437793.html,puter Methods for Mathematical

Computations(Chapter9:Least squares and the singular value decomposition).Englewood Cliffs,NJ:Prentice Hall,1977.

12.Furnas,G.W.,Deerwester,S.,Dumais,S.T.,Landauer,T.K.,and Harshman,R.A.

Information retrieval using a singular value decomposition model of latent semantic structure.In Proceedings of SIGIR,1988,36-40.

13.Furnas,G.W.,Landauer,T.K.,Gomez,L.M.,and Dumais,S.T.The vocabulary problem in

human-system https://www.doczj.com/doc/1d12437793.html,munications of the ACM,1987,30(11),964-971.

15.Harman, D.An experimental study of factors important in document ranking.In

Proceedings of ACM SIGIR,Pisa,Italy,1986.

15.Ide,E.New experiments in relevance feedback.Chapter16.In G.Salton(Ed.),The SMART

retrieval system:Experiments in automatic document processing,Prentice Hall,1971.

15.Ide, E.and Salton,G.Interactive search strategies and dynamic?le organization in

information retrieval.In G.Salton(Ed.),The SMART retrieval system:Experiments in automatic document processing,Prentice Hall,1971.

16.Jardin,N.and van Rijsbergen,C.J.The use of hierarchic clustering in information retrieval.

Information Storage and Retrieval,1971,7,217-240.

17.Kane-Esrig,Y.,Casella,G.,Streeter,L.A.,and Dumais,S.T.Ranking documents for

retrieval by Bayesian modeling of a relevance distribution.In Proceedings of the12th IRIS (Information System Research In Scandinavia)Conference,Aug1989.

18.Koll,M.An approach to concept-based information retrieval.ACM SIGIR Forum,XIII32-

50,1979.

20.Lochbaum,K.E.and Streeter,https://www.doczj.com/doc/1d12437793.html,paring and combining the effectiveness of Latent

Semantic Indexing and the ordinary Vector Space Model for information retrieval.Bellcore TM-ARH-012395,August1988.

21.Oddy,https://www.doczj.com/doc/1d12437793.html,rmation retrieval through man-machine dialogue.Journal of

Documentation,1977,33,1-14.

22.Ossorio,P.G.Classi?cation space:A multivariate procedure for automatic document

indexing and retrieval.Multivariate Behavioral Research,October1966,479-524.

24.Rocchio,J.J.Jr.Relevance feedback in information retrieval.Chapter14.In G.Salton(Ed.),

The SMART retrieval system:Experiments in automatic document processing.Prentice Hall,1971.

25.Salton,G.Automatic Information Organization and Retrieval.McGraw Hill,1968.

26.Salton G.(Ed.),The SMART retrieval system:Experiments in automatic document

processing.Prentice Hall,1971.

27.Salton,G.Relevance feedback and the optimization of retrieval effectiveness.Chapter15.

In G.Salton(Ed.),The SMART retrieval system:Experiments in automatic document processing.Prentice Hall,1971.

28.Salton,G.and McGill,M.J.Introduction to Modern Information Retrieval.McGraw-Hill,

1983.

29.Salton,G.and Buckley,C.Improving retrieval performance by relevance feedback.JASIS,

1990,41(4),288-297.

30.Sparck Jones,K.Automatic Keyword Classi?cation for Information Retrieval,Buttersworth,

London,1971.

31.Sparck Jones,K.A statistical interpretation of term speci?city and its applications in

retrieval.Journal of Documentation,1972,28(1),11-21.

32.Stanfel,L.E.Sequential adaptation of retrieval systems based on user https://www.doczj.com/doc/1d12437793.html,rmation

Storage and Retrieval,1971,7,69-78.

33.Stan?ll,C.and Kahle,B.Parallel free-text search on the connection machine system.

Communications of the ACM,1986,29(12),1229-1239.

34.Streeter,L.A.and Lochbaum,K.E.An expert/expert-locating system based on automatic

representation of semantic structure.In Proceedings of the fourth conference on arti?cial intelligence applications,March14-18,1987,San Diego,CA,345-350.

35.van Rijsbergen,C.J.A theoretical basis for the use of co-occurrence data in information

retrieval.Journal of Documentation,1977,33(2),106-119.

36.van Rijsbergen,https://www.doczj.com/doc/1d12437793.html,rmation retrieval.,Buttersworth,London,1979.

37.Williams,M.D.What make RABBIT run?International Journal of Man-Machine Studies,

1984,21,333-352.

38.Yu,C.T.,Buckley,C.,Lam,K.,Salton,G.A generalized term dependence model in

information https://www.doczj.com/doc/1d12437793.html,rmation Research and Technology,1983,2,129-154.

实验三键盘及LED显示实验 一、实验内容 利用8255可编程并行接口控制键盘及显示器,当有按键按下时向单片机发送外部中断请求(INT0,INT1),单片机扫描键盘,并把按键输入的键码一位LED显示器显示出来。 二、实验目的及要求 (一)实验目的 通过该综合性实验,使学生掌握8255扩展键盘和显示器的接口方法及C51语言的编程方法,进一步掌握键盘扫描和LED显示器的工作原理;培养学生一定的动手能力。 (二)实验要求 1.学生在实验课前必须认真预习教科书与指导书中的相关内容,绘制流程图,编写C51语言源程序,为实验做好充分准备。 2.该实验要求学生综合利用前期课程及本门课程中所学的相关知识点,充分发挥自己的个性及创造力,独立操作完成实验内容,并写出实验报告。 三、实验条件及要求 计算机,C51语言编辑、调试仿真软件及实验箱50台套。 四、实验相关知识点 1.C51编程、调试。 2.扩展8255芯片的原理及应用。 3.键盘扫描原理及应用。 4.LED显示器原理及应用。

5.外部中断的应用。 五、实验说明 本实验仪提供了8位8段LED 显示器,学生可选用任一位LED 显示器,只要按地址输出相应的数据,就可以显示所需数码。 显示字形 1 2 3 4 5 6 7 8 9 A b C d E F 段 码 0xfc 0x60 0xda 0xf2 0x66 0xb6 0xbe 0xe0 0xfe 0xf6 0xee 0x3e 0x9c 0x7a 0x9e 0x8e 六、实验原理图 01e 1d 2dp 3 c 4g 56 b 78 9 a b c g d dp f 10a b f c g d e dp a 11GND3a b f c g d e dp 12 GND4 a b f c g d e dp GND1GND2DS29 LG4041AH 234 567 89A B C D E F e 1d 2dp 3 c 4g 56 b 78 9 a b c g d dp f 10a b f c g d e dp a 11GND3a b f c g d e dp 12 GND4 a b f c g d e dp GND1 GND2DS30 LG4041AH 1 2 3 4 5 6 7 8 JP4112345678 JP4712345678JP42 SEGA SEGB SEGC SEGD SEGE SEGG SEGF SEGH SEGA SEGB SEGC SEGD SEGE SEGG SEGF SEGH A C B 12345678 JP92D 5.1K R162 5.1K R163VCC VCC D034D133D232D331D430D529D628D727PA04PA13PA22PA31PA440PA539PA638PA737PB018PB119PB220PB321PB422PB523PB624PB725PC014PC115PC216PC317PC413PC512PC611PC7 10 RD 5WR 36A09A18RESET 35CS 6 U36 8255 D0D1D2D3D4D5D6D7WR RD RST A0A1PC5PC6PC7 PC2PC3PC4PC0PC1CS 12345678JP56 12345678JP53 12345678 JP52 PA0PA1PA2PA3PA4PA5PA6PA7PB0PB1PB2PB3PB4PB5PB6PB7 (8255 PB7)(8255 PB6)(8255 PB5)(8255 PB4)(8255 PB3)(8255 PB2)(8255 PB1)(8255 PB0) (8255 PC7)(8255 PC6)(8255 PC5)(8255 PC4)(8255 PC3)(8255 PC2)(8255 PC1)(8255 PC0) (8255 PA0) (8255 PA1) (8255 PA2) (8255 PA3) (8255 PA4) (8255 PA5) (8255 PA6) (PA7) I N T 0(P 3.2) I N T 0(P 3.3) 七、连线说明

信息工程学院实验报告 课程名称:微机原理与接口技术 实验项目名称:键盘扫描及显示实验 实验时间: 班级: 姓名: 学号: 一、实 验 目 的 1. 掌握 8254 的工作方式及应用编程。 2. 掌握 8254 典型应用电路的接法。 二、实 验 设 备 了解键盘扫描及数码显示的基本原理,熟悉 8255 的编程。 三、实 验 原 理 将 8255 单元与键盘及数码管显示单元连接,编写实验程序,扫描键盘输入,并将扫描结果送数码管显示。键盘采用 4×4 键盘,每个数码管显示值可为 0~F 共 16 个数。实验具体内容如下:将键盘进行编号,记作 0~F ,当按下其中一个按键时,将该按键对应的编号在一个数码管上显示出来,当再按下一个按键时,便将这个按键的编号在下一个数码管上显示出来,数码管上可以显示最近 6 次按下的按键编号。 键盘及数码管显示单元电路图如图 7-1 和 7-2 所示。8255 键盘及显示实验参考接线图如图 7-3 所示。 图 7-1 键盘及数码管显示单元 4×4 键盘矩阵电路图 成 绩: 指导老师(签名):

图 7-2 键盘及数码管显示单元 6 组数码管电路图 图 7-3 8255 键盘扫描及数码管显示实验线路图 四、实验内容与步骤 1. 实验接线图如图 7-3 所示,按图连接实验线路图。

图 7-4 8255 键盘扫描及数码管显示实验实物连接图 2.运行 Tdpit 集成操作软件,根据实验内容,编写实验程序,编译、链接。 图 7-5 8255 键盘扫描及数码管显示实验程序编辑界面 3. 运行程序,按下按键,观察数码管的显示,验证程序功能。 五、实验结果及分析: 1. 运行程序,按下按键,观察数码管的显示。

实验矩阵键盘扫描实验 一、实验要求 利用4X4 16位键盘和一个7段LED构成简单的输入显示系统,实现键盘输入和LED 显示实验。 二、实验目的 1、理解矩阵键盘扫描的原理; 2、掌握矩阵键盘与51单片机接口的编程方法。 三、实验电路及连线 Proteus实验电路

1、主要知识点概述: 本实验阐述了键盘扫描原理,过程如下:首先扫描键盘,判断是否有键按下,再确定是哪一个键,计算键值,输出显示。 2、效果说明: 以数码管显示键盘的作用。点击相应按键显示相应的键值。 五、实验流程图

1、Proteus仿真 a、在Proteus中搭建和认识电路; b、建立实验程序并编译,加载hex文件,仿真; c、如不能正常工作,打开调试窗口进行调试 参考程序: ORG 0000H AJMP MAIN ORG 0030H MAIN: MOV DPTR,#TABLE ;将表头放入DPTR LCALL KEY ;调用键盘扫描程序 MOVC A,@A+DPTR ;查表后将键值送入ACC MOV P2,A ;将ACC值送入P0口 LJMP MAIN ;返回反复循环显示 KEY: LCALL KS ;调用检测按键子程序 JNZ K1 ;有键按下继续 LCALL DELAY2 ;无键按调用延时去抖 AJMP KEY ;返回继续检测按键 K1: LCALL DELAY2 LCALL DELAY2 ;有键按下延时去抖动 LCALL KS ;再调用检测按键程序 JNZ K2 ;确认有按下进行下一步 AJMP KEY ;无键按下返回继续检测 K2: MOV R2,#0EFH ;将扫描值送入R2暂存MOV R4,#00H ;将第一列值送入R4暂存 K3: MOV P1,R2 ;将R2的值送入P1口 L6: JB P1.0,L1 ;P1.0等于1跳转到L1 MOV A,#00H ;将第一行值送入ACC AJMP LK ;跳转到键值处理程序 L1: JB P1.1,L2 ;P1.1等于1跳转到L2 MOV A,#04H ;将第二行的行值送入ACC AJMP LK ;跳转到键值理程序进行键值处理 L2: JB P1.2,L3 ;P1.2等于1跳转到L3

9.3.1 矩阵键盘的工作原理和扫描确认方式 来源:《AVR单片机嵌入式系统原理与应用实践》M16华东师范大学电子系马潮 当键盘中按键数量较多时,为了减少对I/O 口的占用,通常将按键排列成矩阵形式,也称为行列键盘,这是一种常见的连接方式。矩阵式键盘接口见图9-7 所示,它由行线和列线组成,按键位于行、列的交叉点上。当键被按下时,其交点的行线和列线接通,相应的行线或列线上的电平发生变化,MCU 通过检测行或列线上的电平变化可以确定哪个按键被按下。 图9-7 为一个 4 x 3 的行列结构,可以构成12 个键的键盘。如果使用 4 x 4 的行列结构,就能组成一个16 键的键盘。很明显,在按键数量多的场合,矩阵键盘与独立式按键键盘相比可以节省很多的I/O 口线。 矩阵键盘不仅在连接上比单独式按键复杂,它的按键识别方法也比单独式按键复杂。在矩阵键盘的软件接口程序中,常使用的按键识别方法有行扫描法和线反转法。这两种方法的基本思路是采用循环查循的方法,反复查询按键的状态,因此会大量占用MCU 的时间,所以较好的方式也是采用状态机的方法来设计,尽量减少键盘查询过程对MCU 的占用时间。 下面以图9-7 为例,介绍采用行扫描法对矩阵键盘进行判别的思路。图9-7 中,PD0、PD1、PD2 为3 根列线,作为键盘的输入口(工作于输入方式)。PD3、PD4、PD5、PD6 为4根行线,工作于输出方式,由MCU(扫描)控制其输出的电平值。行扫描法也称为逐行扫描查询法,其按键识别的过程如下。 √将全部行线PD3-PD6 置低电平输出,然后读PD0-PD2 三根输入列线中有无低电平出现。只要有低电平出现,则说明有键按下(实际编程时,还要考虑按键的消抖)。如读到的都是高电平,则表示无键按下。 √在确认有键按下后,需要进入确定具体哪一个键闭合的过程。其思路是:依

键盘扫描显示实验原理及分析报告 一、实验目的-------------------------------------------------------------1 二、实验要求-------------------------------------------------------------1 三、实验器材-------------------------------------------------------------1 四、实验电路-------------------------------------------------------------2 五、实验说明-------------------------------------------------------------2 六、实验框图-------------------------------------------------------------2 七、实验程序-------------------------------------------------------------3 八、键盘及LED显示电路---------------------------------------------14 九、心得体会------------------------------------------------------------- 15 十、参考文献--------------------------------------------------------------15

实验三4*4键盘扫描显示控制 一、实验目的 实现一4×4键盘的接口,并在两个数码管上显示键盘所在的行与列。即将8255单元与键盘及数码管显示单元连接,编写实验程序扫描键盘输入,并将扫描结果送数码显示,键盘采用4×4键盘,每个数码管值可以为0到F,16个数。将键盘进行编号记作0—F当按下其中一个按键时将该按键对应的编号在一个数码管上显示出来,当按下下一个按键时便将这个按键的编号在下一个数码管上显示出来,且数码管上可以显示最近6次按下按键的编号。 二、实验要求 1、接口电路设计:根据所选题目和所用的接口电路芯片设计出完整的接口电路,并进行电路连接和调试。 2、程序设计:要求画出程序框图,设计出全部程序并给出程序设计说明。 三、实验电路

四、实验原理说明 图2 数码管引脚图 图1为AT89C51引脚图,说明如下: VCC:供电电压。 GND:接地。 P0口:P0口为一个8位漏级开路双向I/O口,每脚可吸收8TTL门电流。当P1口的管脚第一次写1时,被定义为高阻输入。P0能够用于外部程序数据存储器,它可以被定义为数据/地址的第八位。在FIASH编程时,P0 口作为原码输入口,当FIASH进行校验时,P0输出原码,此时P0外部必须被拉高。 P1口:P1口是一个内部提供上拉电阻的8位双向I/O口,P1口缓冲器能接收输出4TTL门电流。P1口管脚写入1后,被内部上拉为高,可用作输入,P1口被外部下拉为低电平时,将输出电流,这是由于内部上拉的缘故。在FLASH 编程和校验时,P1口作为第八位地址接收。 P2口:P2口为一个内部上拉电阻的8位双向I/O口,P2口缓冲器可接收,输出4个TTL门电流,当P2口被写“1”时,其管脚被内部上拉电阻拉高,且作为输入。并因此作为输入时,P2口的管脚被外部拉低,将输出电流。这是由于内部上拉的缘故。P2口当用于外部程序存储器或16位地址外部数据存储器进行存取时,P2口输出地址的高八位。在给出地址“1”时,它利用内部上拉优势,当对外部八位地址数据存储器进行读写时,P2口输出其特殊功能寄存器的内容。P2口在FLASH编程和校验时接收高八位地址信号和控制信号。 P3口:P3口管脚是8个带内部上拉电阻的双向I/O口,可接收输出4个TTL门电流。当P3口写入“1”后,它们被内部上拉为高电平,并用作输入。作为输入,由于外部下拉为低电平,P3口将输出电流(ILL)这是由于上拉的缘故。P3口同时为闪烁编程和编程校验接收一些控制信号。 ALE/PROG:当访问外部存储器时,地址锁存允许的输出电平用于锁存地址

实验四键盘扫描及显示设计实验报告 一、实验要求 1. 复习行列矩阵式键盘的工作原理及编程方法。 2. 复习七段数码管的显示原理。 3. 复习单片机控制数码管显示的方法。 二、实验设备 1.PC 机一台 2.TD-NMC+教学实验系统 三、实验目的 1. 进一步熟悉单片机仿真实验软件 Keil C51 调试硬件的方法。 2. 了解行列矩阵式键盘扫描与数码管显示的基本原理。 3. 熟悉获取行列矩阵式键盘按键值的算法。 4. 掌握数码管显示的编码方法。 5. 掌握数码管动态显示的编程方法。 四、实验内容 根据TD-NMC+实验平台的单元电路,构建一个硬件系统,并编写实验程序实现如下功能: 1.扫描键盘输入,并将扫描结果送数码管显示。 2.键盘采用 4×4 键盘,每个数码管显示值可为 0~F 共 16 个数。 实验具体内容如下: 将键盘进行编号,记作 0~F,当按下其中一个按键时,将该按键对应的编号在一个数码 管上显示出来,当再按下一个按键时,便将这个按键的编号在下一个数码管上显示出来,数 码管上可以显示最近 4 次按下的按键编号。 五、实验单元电路及连线 矩阵键盘及数码管显示单元

图1 键盘及数码管单元电路 实验连线 图2实验连线图 六、实验说明 1. 由于机械触点的弹性作用,一个按键开关在闭合时不会马上稳定地接通,在断开时也不会一下子断开。因而在闭合及断开的瞬间均伴随有一连串的抖动。抖动时间的长短由按键的机械特性决定,一般为 5~10ms。这是一个很重要的时间参数,在很多场合都要用到。 键抖动会引起一次按键被误读多次。为了确保 CPU 对键的一次闭合仅做一次处理,必须去除键抖动。在键闭合稳定时,读取键的状态,并且必须判别;在键释放稳定后,再作处理。按

二、设计内容 1、本设计利用各种器件设计,并利用原理图将8255单元与键盘及数码管显示单元连接,扫描键盘输入,最后将扫描结果送入数码管显示。键盘采用4*4键盘,每个数码管可以显示0-F共16个数。将键盘编号,记作0-F,当没按下其中一个键时,将该按键对应的编号在一个数码管上显示出来,当在按下一个 键时,便将这个按键的编号在下一个数码管上显示,数码管上 可以显示最近6次按下的按键编号。 设计并实现一4×4键盘的接口,并在两个数码管上显示键盘所在的行与列。 三、问题分析及方案的提出 4×4键盘的每个按键均和单片机的P1口的两条相连。若没有按键按下时,单片机P1口读得的引脚电平为“1”;若某一按键被按下,则该键所对应的端口线变为地电平。单片机定时对P1口进行程序查询,即可发现键盘上是否有按键按下以及哪个按键被按下。 实现4×4键盘的接口需要用到单片机并编写相应的程序来识别键盘的十六个按键中哪个按键被按下。因为此题目还要求将被按下的按键显示出来,因此可以用两个数码管来分别显示被按下的按键的行与列

表示任意一个十六进制数)分别表示键盘的第二行、第三行、第四行;0xXE、0xXD、0xXB、0xX7(X表示任意一个十六进制数)则分别表示键盘的第一列、第二列、第三列和第四列。例如0xD7是键盘的第二行第四列的按键 对于数码管的连接,采用了共阳极的接法,其下拉电阻应保证芯片不会因为电流过大而烧坏。 五、电路设计及功能说明 4×4键盘的十六个按键分成四行四列分别于P1端口的八条I/O 数据线相连;两个七段数码管分别与单片机的P0口和P2口的低七 位I/O数据线相连。数码管采用共阳极的接法,所以需要下拉电阻 来分流。结合软件程序,即可实现4×4键盘的接口及显示的设计。 当按下键盘其中的一个按键时,数码管上会显示出该按键在4×4键 盘上的行值和列值。所以实现了数码管显示按键位置的功能 四、设计思路及原因 对于4×4键盘,共有十六个按键。如果每个按键与单片机的一个引脚相连,就会占用16个引脚,这样会使的单片机的接口不够用(即使够用,也是对单片机端口的极大浪费)。因此我们应该行列式的接法。行列式非编码键盘是一种把所有按键排列成行列矩阵的键盘。在这种键若没有按键按下时,单片机从P1口读得的引脚电平为“1”;若某一按键被按下,则该键所对应的端口线变为地电平。因此0xEX(X表示任意4×4键盘的第一行中的某个按键被按下,相应的0xDX、0xBX、0x7X(X 二、实验内容

4.1键盘扫描输入实验 4.1.1 实验目的 1.学习复杂数字系统的设计方法; 2.掌握矩阵式键盘输入列阵的设计方法。 4.1.2 实验设备 PC微机一台,TD-EDA试验箱一台,SOPC开发板一块。 4.103 实验内容 在电子,控制,信息处理等各种系统中,操作人员经常需要想系统输入数据和命令,以实现人机通信。实现人机通信最常用的输入设备是键盘。在EDA技术的综合应用设计中,常用的键盘输入电路独立式键盘输入电路、矩阵式键盘输入电路和“虚拟式”键盘输入电路。 所谓矩阵是键盘输入电路,就是将水平键盘扫描线和垂直输入译码线信号的不同组合编码转换成一个特定的输入信号值或输入信号编码,利用这种行列矩阵结构的键盘,只需N 个行线和M个列线即可组成NXM按键,矩阵式键盘输入电路的优点是需要键数太多时,可以节省I/O口线;缺点是编程相对困难。 本实验使用TD-EDA实验系统的键盘单元设计一个4x4的矩阵键盘的扫描译码电路。此设计包括键盘扫描模块和扫描码锁存模块,原理如图4-1-1。每按下键盘列阵的一个按键立即在七段数码管上显示相应的数据。 4.1.1 实验步骤 1. 运行Quartus II 软件,分别建立新工程,选择File->New菜单,创建VHDL描述语言设计文件,分别编写JPSCAN.VHD、REG.VHD. 2.扫描码锁存模块REG的VHDL源程序如下; --输入锁存器VHDL源程序:REGVHDL LIBRARY IEEE; USB IEEE.STD-LOGIC-1164.ALL; ENTITY REG IS PORT ( RCLK : IN STD-LOGIC; --扫描时钟YXD : IN STD-LOGIC-VECTOR(3 DOWNTO 0); --Y 列消抖输入 DATA : IN STD-LOGIC-VECTOR(7 DOWNTO 0); --输入数据 LED : OUT STD-LOGIC- VECTOR(7 DOWNTO 0)); --锁存数据输出END ENTITY REG; ARCHITECTURE BEHV OF REG IS SIGNAL RST : STD-LOGIC; --锁存器复位清零 SIGNAL OLDDATA : STD-LOGIC- VECTOR(7 DOWNTO 0); --锁存器旧数据 SIGNAL NEWDATA : STD-LOGIC- VECTOR(7 DOWNTO 0); --锁存器新数据

实验一键盘显示系统实验 1.实验目的: (1)了解8155芯片的工作原理以及应用 (2)了解键盘、LED显示器的接口原理以及硬件电路结构 (3)掌握非编码键盘的编程方法以及程序设计 2.实验内容: 将程序输入实验系统后,在运行状态下,按下数字0~9之一,将在数码管上显示相应数字,按下A、B或C之一,将在数码管上显示“0”、“1”或“2”循环 3.程序框图: 4. 实验程序 下面程序有四部分组成,程序的地址码、机器码、程序所在行号(中间)和源程序。字型码表和关键字表需要同学们自己根据硬件连接填在相应位置。 地址码机器码 1 源程序 0000 2 org 0000h 0000 90FF20 3 mov dptr,#0ff20h 0003 7403 4 mov a,#03h ;方式字 0005 F0 5 movx @dptr,a ;A和B口为输出口,C口为输入口 0006 753012 6 mov 30h,#12h ;LED共阴极,开始显示“H”,地址偏移量 送30h

0009 1155 7 dsp: acall disp1 ;调显示子程序 000B 11FE 8 acall ds30ms 000D 1179 9 acall scan ;调用键盘扫描子程序 000F 60F8 10 jz dsp ;若无键按下,则dsp 0011 11B7 11 acall kcode ;若有键按下,则kcode 0013 B40A00 12 cjne a,#0ah,cont ;是否数字键,若是0-9则是,a-c 则否 0016 400F 13 cont: jc num ;若是,则num 0018 90001F 14 mov dptr,#jtab ;若否,则命令转移表始址送dptr 001B 9409 15 subb a,#09h; 形成jtab表地址偏移量 001D 23 16 rl a ;地址偏移量*2 001E 73 17 jmp @a+dptr ;转入相应功能键分支程序 001F 00 18 jtab: nop 0020 00 19 nop 0021 8008 20 sjmp k1 ;转入k1子程序 0023 800B 21 sjmp k2 ; 转入k2子程序 0025 800E 22 sjmp k3 ; 转入k3子程序 0027 F530 23 num: mov 30h,a 0029 80DE 24 sjmp dsp ; 返回dsp 002B 7531C0 25 k1: mov 31h,#0c0h ; "0" 循环显示 002E 800A 26 sjmp k4 0030 7531F9 27 k2: mov 31h,#0f9h ; "1" 循环显示 0033 8005 28 sjmp k4 0035 7531A4 29 k3: mov 31h,#0a4h ; "2" 循环显示 0038 8000 30 sjmp k4 003A 7B01 31 k4: mov r3,#01h ;显示最末一位,注意共阴极 003C EB 32 k5: mov a,r3 003D 90FF21 33 mov dptr,#0ff21h 0040 F0 34 movx @dptr,a ;字位送8155 0041 E531 35 mov a,31h 0043 90FF22 36 mov dptr,#0ff22h; 字型口 0046 F0 37 movx @dptr,a ;字型送8155的B口 0047 11EC 38 acall delay ;延时1ms*** 0049 74FF 39 mov a,#0ffh 004B F0 40 movx @dptr,a ;关显示,在此使LED各位显示块都灭004C EB 41 mov a,r3 004D 23 42 rl a 004E FB 43 mov r3,a 004F BB40EA 44 cjne r3,#40h,k5 ;还没有循环玩一遍,则循环继续0052 80E6 45 sjmp k4 ;若循环完一遍则返回k4;又开始新一轮的循环 0054 22 46 ret 0055 90FF21 47 disp1: mov dptr,#0ff21h; 字位口A,注意led是共阴极接法0058 7401 48 mov a,#01h 005A F0 49 movx @dptr,a 005B 90FF22 50 mov dptr,#0ff22h;字型口 005E E530 51 mov a,30h 0060 2402 52 add a,#02h 0062 83 53 movc a,@a+pc 0063 F0 54 movx @dptr,a ;字型码输入,N1点亮 0064 22 55 ret ;下面是0到c的字型码 0065 ? 56 db ???? 0066 ? 0067 ? 0068 ? 0069 ? 006A ? 57 db ????

【实验内容】 将8255单元与键盘及数码管显示单元连接,编写实验程序,扫描键盘输入,并将扫描结果送数码管显示。键盘采用4×4键盘,每个数码管显示值可为0~F 共16个数。实验具体内容如下:将键盘进行编号,记作0~F,当按下其中一个按键时,将该按键对应的编号在一个数码管上显示出来,当再按下一个按键时,便将这个按键的编号在下一个数码管上显示出来,数码管上可以显示本次按键的按键编号。8255键盘及显示实验参考接线图如图1所示。 【实验步骤】 1. 按图1连接线路图; 2. 编写实验程序,检查无误后编译、连接并装入系统; 3. 运行程序,按下按键,观察数码管的显示,验证程序功能。 【程序代码】 MY8255_A EQU 0600H MY8255_B EQU 0602H MY8255_C EQU 0604H MY8255_CON EQU 0606H SSTACK SEGMENT STACK DW 16 DUP(?) SSTACK ENDS DA TA SEGMENT DTABLE DB 3FH,06H,5BH,4FH DB 66H,6DH,7DH,07H DB 7FH,6FH,77H,7CH DB 39H,5EH,79H,71H table1 db 0dfh,0efh,0f7h,0fbh,0fdh,0feh count db 0h DA TA END ODE SEGMENT ASSUME CS:CODE,DS:DA TA START: MOV AX,DA TA

MOV DS,AX MOV SI,3000H MOV AL,03H MOV [SI],AL ;清显示缓冲 MOV [SI+1],AL MOV [SI+2],AL MOV [SI+3],AL MOV [SI+4],AL MOV [SI+5],AL MOV DI,3005H MOV DX,MY8255_CON ;写8255控制字 MOV AL,81H OUT DX,AL BEGIN: CALL DIS ;调用显示子程序 CALL CLEAR ;清屏 CALL CCSCAN ;扫描 JNZ INK1 JMP BEGIN INK1: CALL DIS CALL DALL Y CALL DALL Y CALL CLEAR CALL CCSCAN JNZ INK2 ;有键按下,转到INK2 JMP BEGIN ;======================================== ;确定按下键的位置 ;======================================== INK2: MOV CH,0FEH MOV CL,00H COLUM: MOV AL,CH MOV DX,MY8255_A OUT DX,AL MOV DX,MY8255_C IN AL,DX L1: TEST AL,01H ;is L1? JNZ L2 MOV AL,00H ;L1 JMP KCODE L2: TEST AL,02H ;is L2? JNZ L3 MOV AL,04H ;L2 JMP KCODE L3: TEST AL,04H ;is L3?

一、实验名称: 键盘扫描及显示设计实验 二、实验目的 1.学习按键扫描的原理及电路接法; 2.掌握利用8255完成按键扫描及显示。 三、实验内容及步骤 1. 实验内容 编写程序完成按键扫描功能,并将读到的按键值依次显示在数码管上。实验机的按键及显示模块电路如图1所示。按图2连线。 图1 键盘及显示电路

图2 实验连线 2. 实验步骤 (1)按图1接线; (2)键入:check命令,记录分配的I/O空间; (3)利用查出的地址编写程序,然后编译链接; (4)运行程序,观察数码管显示是否正确。 四、流程图

五、源程序 ;Keyscan.asm ;键盘扫描及数码管显示实验 ;***************根据CHECK配置信息修改下列符号值******************* IOY0 EQU 9800H ;片选IOY0对应的端口始地址 ;***************************************************************** MY8255_A EQU IOY0+00H*4 ;8255的A口地址 MY8255_B EQU IOY0+01H*4 ;8255的B口地址 MY8255_C EQU IOY0+02H*4 ;8255的C口地址 MY8255_MODE EQU IOY0+03H*4 ;8255的控制寄存器地址 STACK1 SEGMENT STACK DW 256 DUP(?) STACK1 ENDS DATA SEGMENT DTABLE DB 3FH,06H,5BH,4FH,66H,6DH,7DH,07H,7FH,6FH,77H,7CH,39H,5EH,79H,71H DATA ENDS ;键值表,0~F对应的7段数码管的段位值 CODE SEGMENT ASSUME CS:CODE,DS:DATA START: MOV AX,DATA MOV DS,AX MOV SI,3000H ;建立缓冲区,存放要显示的键值 MOV AL,00H ;先初始化键值为0 MOV [SI],AL MOV [SI+1],AL MOV [SI+2],AL MOV [SI+3],AL MOV DI,3003H MOV DX,MY8255_MODE ;初始化8255工作方式 MOV AL,81H ;方式0,A口、B口输出,C口低4位输入

4*4矩阵键盘扫描汇编程序(基于51单片机) // 程序名称:4-4keyscan.asm ;// 程序用途:4*4矩阵键盘扫描检测 ;// 功能描述:扫描键盘,确定按键值。程序不支持双键同时按下, ;// 如果发生双键同时按下时,程序将只识别其中先扫描的按键;// 程序入口:void ;// 程序出口:KEYNAME,包含按键信息、按键有效信息、当前按键状态;//================================================================== ==== PROC KEYCHK KEYNAME DATA 40H ;按键名称存储单元 ;(b7-b5纪录按键状态,b4位为有效位, ;b3-b0纪录按键) KEYRTIME DATA 43H ;重复按键时间间隔 SIGNAL DATA 50H ;提示信号时间存储单元 KEY EQU P3 ;键盘接口(必须完整I/O口) KEYPL EQU P0.6 ;指示灯接口 RTIME EQU 30 ;重复按键输入等待时间 KEYCHK: ;//=============按键检测程序========================================= ==== MOV KEY,#0FH ;送扫描信号 MOV A,KEY ;读按键状态 CJNE A,#0FH,NEXT1 ;ACC<=0FH ; CLR C ;Acc等于0FH,则CY为0,无须置0 NEXT1: ; SETB C ;Acc不等于0FH,则ACC必小于0 FH, ;CY为1,无须置1 MOV A,KEYNAME ANL KEYNAME,#1FH ;按键名称屏蔽高三位 RRC A ;ACC带CY右移一位,纪录当前按键状态 ANL A,#0E0H ;屏蔽低五位

DSP实验报告 实验名称:矩阵键盘扫描实验系部:物理与机电工程学院专业班级: 学号: 学生姓名: 指导教师: 完成时间:2014-5-8 报告成绩:

矩阵键盘扫描实验 一、实验目的 1.掌握键盘信号的输入,DSP I/O的使用; 2.掌握键盘信号之间的时序的正确识别和引入。 二、实验设备 1. 一台装有CCS软件的计算机; 2. DSP试验箱的TMS320F2812主控板; 3. DSP硬件仿真器。 三、实验原理 实验箱上提供一个 4 * 4的行列式键盘。TMS320F2812的8个I / O口与之相连,这里按键的识别方法是扫描法。 当有键被按下时,与此键相连的行线电平将由此键相连的列线电平决定,而行线的电平在无法按键按下时处于高电平状态。如果让所有的列线也处于高电平,那么键按下与否不会引起行线电平的状态变化,始终为高电平。所以,在让所有的列线处于高电平是无法识别出按键的。现在反过来,让所有的列线处于低电平,很明显,按键所在的行电平将被拉成低电平。根据此行电平的变化,便能判断此行一定有按键被按下,但还不能确定是哪个键被按下。假如是5键按下,为了进一步判定是哪一列的按键被按下,可在某一时刻只让一条列线处于低电平,而其余列线处于高电平。那么按下键的那列电平就会拉成低电平,判断出哪列为低电平就可以判断出按键号码。

模块说明: 此模块共有两种按键,KEY1—KEY4是轻触按键,在按键未按下时为高电平输入FPGA,当按键按下后对FPGA输入低电平,松开按键后恢复高电平输入,KEY5—KEY8是带自锁的双刀双掷开关,在按键未按下时是低电平,按键按下时为高电平并且保持高电平不变,只有再次按下此按键时才恢复低电平输入。每当按下一个按键时就对FPGA就会对此按键进行编码,KEY1—KEY8分别对应的是01H、02H、03H、04H、05H、06H、07H、08H。在编码的同时对DSP产生中断INT1,这个时候DSP就会读取按键的值,具体使用方法可以参考光盘例程 key,prj。

《单片机》实验报告 一.实验题目 实验4.7 7279 键盘扫描及动态LED 显示实验 二.实验要求 本实验利用7279 进行键盘扫描及动态LED 数码管显示控制。 三.实验源程序 #include

一、 实验名称: 键盘扫描及显示设计实验 二、 实验目的 1. 学习按键扫描的原理及电路接法; 2 .掌握利用8255完成按键扫描及显示。 三、 实验内容及步骤 1.实验内容 编写程序完成按键扫描功能,并将读到的按键值依次显示在数码管上。实验机的按 键及显示模块电路如图 1所示。按图2连线。 Γ≡≡ If * —I 〔01S 冥 P π图1键盘及显示电路

2. 实验步骤 (1) 按图1接线; (2) 键入:CheCk 命令,记录分配的I/O 空间; (3) 利用查出的地址编写程序,然后编译链接; (4) 运行程序,观察数码管显示是否正确。 四、流程图 22LZj XD2汽 XDrX XDir d √ I I WirI ?IOR A .■ [QYO Λ : 07 PBfl D? PBl 般 唯 * C4 PB3 PBl ?皿 PBi 71 PB6 DO E55 PB7 FA.Q Al PAJ AO 吨 ! PA5 I WR PCo 7 RD PCI ?÷ CS PΩ I PCI 图2实验连线 >Cχ ? 7 H *J J XXXX t- ?r√ *JJ

开始

五、源程序 是 KeySCa n. asm ;键盘扫描及数码管显示实验 根据CHECKE 置信息修改下列符号值 ******************* 的A 口地址 的B 口地址 的C 口地址 的控制寄存器地址 STACKI SEGMENT STACK DW 256 DUP(?) STACKI ENDS DATA SEGMENT DTABLE 3FH,06H,5BH,4FH,66H,6DH,7DH,07H,7FH,6FH,77H,7CH,39H,5EH,79H,71H DATA ENDS ; 键值表,0?F 对应的7段数码管的段位值 CODE SEGMENT ASSUME CS:CODE,DS:DATA START: MOV AX,DATA MOV DS,AX MOV SI,3000H ; 建立缓冲区,存放要显示的键值 MOV AL,00H ; 先初始化键值为 0 MOV [SI],AL MOV [SI+1],AL MOV [SI+2],AL MOV [SI+3],AL MOV DI,3003H 初始化8255工作方式 方式0, A 口、B 口输出,C 口低4位输入? *************** IoYo EQU 9800H 片选IOYO 对应的端口始地址 MY8255_ _A EQU IOY0+00H*4 ;8255 MY8255 _ _B EQU IOY0+01H*4 ;8255 MY8255_ _C EQU IOY0+02H*4 ;8255 DB MOV DX,MY8255_MODE MOV AL,81H ; ?***************************************************************** MY8255_MODE EQU IOY0+03H*4 ;8255

ET6218R Etek Microelectronics LED Controller/driver General Description ET6218R is an LED Controller driver on a 1/7 to 1/8 duty factor. 7 segment output lines, 4 grid output lines, 1 segment/grid output lines, one display memory, control circuit, key scan circuit are all incorporated into a single chip to build a highly reliable peripheral device for a single chip microcomputer. Serial data is fed to ET6218R via a three-line serial interface, ET6218R pin assignments and application circuit are optimized for easy PCB Layout and cost saving advantages. Features z CMOS Technology z Low Power Consumption z Multiple Display Modes(4~5 Grid, 7~8 segment) z Key scaning(7×1 Matrix) z 8-step Dimming Circuitry z Serial Interface for Clock, Data Input/Output, Strobe Pins z Available in 18-pin, DIP Package Pin Configurations

实验三LED数码管动态显示及4 X4 键盘控制实验 一、实验目的 1.巩固多位数码管动态显示方法。 2.掌握行扫描法矩阵式按键的处理方法。 3.熟练应用AT89S52学习板实验装置,进一步掌握keil C51的使用方法。二、实验内容 使用AT89S52学习板上的4位LED数码管和4 X 4矩阵键盘阵列做多位数码管动态显示及行扫描法键盘处理功能实验。用P0口做数据输出,利用P1做锁存器74HC573的锁存允许控制,编写程序使4位LED数码管按照动态显示方式显示一定的数字;按照行扫描法编写程序对4 X 4矩阵键盘阵列进行定期扫描,计算键值并在数码管上显示。 三、实验系统组成及工作原理 1.4位LED数码管和4 X 4矩阵键盘阵列电路原理图

2.多位数码管动态显示方式 a b c d e f g dp com a b c d e f g dp com a b c d e f g dp com a b c d e f g dp com D0 IO(2) IO(1) 说明4位共阴极LED动态显示3456数字的工作过程 首先由I/O口(1)送出数字3的段选码4FH即数据01001111到4个LED共同的段选线上, 接着由I/O口(2)送出位选码××××0111到位选线上,其中数据的高4位为无效的×,唯有送入左边第一个LED的COM端D3为低电平“0”,因此只有该LED的发光管因阳极接受到高电平“1”的g、d、c、b、a段有电流流过而被点亮,

也就是显示出数字3,而其余3个LED因其COM端均为高电平“1”而无法点亮;显示一定时间后, 再由I/O口(1)送出数字4的段选码66H即01100110到段选线上,接着由I/O 口(2)送出点亮左边第二个LED的位选码××××1011到位选线上,此时只有该LED的发光管因阳极接受到高电平“1”的g、f、c、b段有电流流过因而被点亮,也就是显示出数字4,而其余3位LED不亮; 如此再依次送出第三个LED、第四个LED的段选与位选的扫描代码,就能一一分别点亮各个LED,使4个LED从左至右依次显示3、4、5、6。 3.4 X 4 矩阵式按键扫描处理程序 行扫描法又称逐行零扫描查询法,即逐行输出行扫描信号“0”,使各行依次为低电平,然后分别读入列数据,检查此(低电平)行中是否有键按下。如果读得某列线为低电平,则表示此(低电平)行线与此列线的交叉处有键按下,再对该键进行译码计算出键值,然后转入该键的功能子程序入口地址;如果没有任何一根列线为低电平,则说明此(低电平)行没有键按下。接着进行下一行的“0”行扫描与列读入,直到8行全部查完为止,若无键按下则返回。 有时为了快速判断键盘中是否有键按下,也可先将全部行线同时置为低电平,然后检测列线的电平状态,若所有列线均为高电平,则说明键盘中无键按下,立即返回;若要有一列的电平为低,则表示键盘中有键被控下,然后再如上那样进行逐行扫描。 四、实验设备和仪器 PC机一台 AT89S52单片机学习板、下载线一套 五、实验步骤 1.按时实验要求编写源程序(实验前写)进行软件模拟调试。 2.软件调试好,连接硬件电路。